December 2015 snapshots: Hyperspectral and multispectral imaging, underwater robots, 3D imaging

Researchers develop inexpensive hyperspectral camera

A team of computer science and electric engineers from the University of Washington (Seattle, WA, USA; www.washington.edu) and Microsoft Research (Redmond, WA, USA; www.research.microsoft.com) have developed an affordable hyperspectral camera that uses visible and near-infrared light.

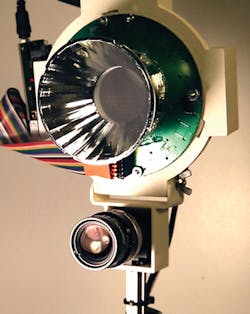

HyperCam costs approximately $800 and is based on a FL3-U3-13Y3M-C USB 3.0 camera from Point Grey (Richmond, BC, Canada; www.ptgrey.com). The camera features a 1.3 MPixel ON Semiconductor (Phoenix, AZ, USA; www.onsemi.com) VITA1300 CMOS image sensor that can achieve frame rate up to 150fps and is sensitive from 350-1080 nm with peak quantum efficiency at 560 nm. The camera uses 17 different spectral bands that are created using narrow-band, off-the-shelf LEDs configured in a ring arrangement. The wavelengths for these LEDs vary from 450 to 990 nm, and were selected to cover the camera's sensitivity range.

If all of HyperCam's 17 different bands of spectrum are needed for a specific application, the effective frame rate for the camera is 9fps (150/17). Each LED in the ring arrangement has a different lighting direction and path, which causes non-uniform glares and shadows for different wavelengths.The team compensated for this by using an integrating hemisphere to diffuse the light and minimize non-directional uniformity.

The light from an LED strikes the integrating hemisphere and reflects through an opening at the center. Although this leads to reduced light intensity, the team found that the final light intensities were satisfactory for most applications.

Point Grey's USB3 camera has a GPIO interface for power, triggering, serial I/O, pulse width modulation, and strobing, and camera control is performed through the GPIO interface using a PSoC3: CY8C38 chipset from Cypress (San Jose, CA, USA; www.cypress.com). The team programmed state-machines in the on-board EEPROM to enable fast switching between LEDs. All image processing was performed on a computer that was connected to the PSoC3: CY8C38 and camera over separate USB connections. The camera then sends frames directly to the computer over the USB 3.0 connection.

HyperCam required two calibration steps. First, the team had to ensure that the setup has a flat spectral response. The intensities of all the LEDs were calibrated by looking at the spectral reflectance response of the camera for a MacBeth color chart at each wavelength. The second calibration accounts for different lighting conditions. Before each session, the team captured an ambient lighting image (i.e. an image without any LEDs illumination) that is subtracted from all subsequent images.

In a preliminary testing, researchers have shown how the HyperCam can be used in biometric applications, differentiating between hand images of 25 users with 99% accuracy. In another test, the team took hyperspectral images of 10 different fruits, from strawberries to mangoes to avocados, over the course of a week. The captured images predicted the relative ripeness of the fruits with 94% accuracy, compared to 64% of a standard RGB camera. Further information can be found at: http://bit.ly/1N9NRy0

www.vision-systems.comVision Systems Design December 2015

Underwater robot seeks and destroys harmful starfish

Designed to seek out and eliminate the Great Barrier Reef's crown-of-thorns starfish (COTS), the COTSbot vision-guided underwater robot from the Queensland University of Technology (QUT, Brisbane, Australia; www.qut.edu.au) just recently completed its first sea trials.

"Human divers are doing an incredible job of eradicating this starfish from targeted sites but there just are not enough divers to cover all the COTS hotspots across the Great Barrier Reef," says Dr. Matthew Dunbabin from QUT's Institute for Future Environments. "We see the COTSbot as a first responder for ongoing eradication programs - deployed to eliminate the bulk of COTS, with divers following a few days later to eliminate the remaining COTS."

The COTSbot can search the reef for up to eight hours at a time, delivering more than 200 lethal doses of bile salts using a pneumatic injection arm. The vehicle autonomously navigates using a vision system based on a stereo camera setup looking downwards and a single camera looking forward. The downward cameras are used to detect the starfish, while the front-facing camera is used for navigation. The cameras used were CM3-U3-13S2C-CS Chameleon 3 color cameras from Point Grey (Richmond, BC, Canada; www.ptgrey.com) which are 44mm x 35 mm x 19.5 mm enclosed USB 3.0 cameras that feature ICX445 CCD image sensors from Sony (Tokyo, Japan; www.sony.com). The ICX445 is a 1/3in 1.3 MPxel CCD sensor with a 3.75μm pixel size that can achieve frame rates of 30 fps.

All image processing is performed on-board the robot by a GPU. The software, according to Dunbabin, is built around the Robotic Operating System (ROS) and is optimized to exploit the GPU.

"Using this system we can process the images for COTS at greater than 7Hz, which is sufficient for real-time detection of COTS and feeding back the detected positions for controlling the underwater robot and the manipulator," he says.

QUT roboticists spent the last six months developing and training the robot to recognize COTS among coral, training the system with thousands of still images of the reef, and with videos taken by COTS-eradicating divers. Dr. Feras Dayoub, who designed the detection software, says the robot will continue to learn from its experiences in the field.

"If the robot is unsure that something is actually a COTS, it takes a photo of the object to be later verified by a human, and that human feedback is incorporated into the system," says Dayoub.

Further testing for the COTSbot, specifically at the Great Barrier Reef, will see the robot in its first trials on living targets. In this trial, a human will verify each COTS identification the robot makes before the robot is allowed to inject it.

3D cameras ensure accurate development of custom shoe insoles

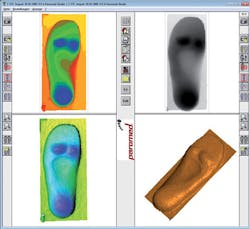

Foot impression foams help orthopedists develop shoe insoles that provide customers with additional support for their feet. To create accurate measurements of these foams, foot fitting company paromed (Neubeuern, Germany; www.paromed.biz) has developed a portable 3D measurement system.

The portable system is based on an Ensenso 10 3D camera from IDS Imaging Development Systems (Obersulm, Germany; www.ids-imaging.com). Ensenso N10 stereo cameras feature two 752 x 480 pixel global shutter CMOS image sensors and a USB interface. The N10 cameras feature an infrared pattern projector that projects a random pattern of dots onto the object to be captured, allowing structures that are not visible or only faintly visible on the surface to be enhanced or highlighted. This is necessary because stereo matching requires the identification of interest points in a given image.

Once interest points are identified, the object is then captured by the two image sensors and 3D coordinates are reconstructed for each pixel using triangulation. Even if parts with a relatively monotone surface are imaged, a 3D image of the surface can be generated. Customers who require an individual footprint for the production of insoles stand barefoot onto the foot impression foam, which deforms and adapts to the physiological characteristics of the feet. The N10 3D camera then captures a 3D image of the footprint in the foam box.

From there, 3D point clouds are converted, filtered and displayed in an internal file format. A CAD system for individual modeling of shoe insoles processes the additional image data that is then used for either shaping shoe insoles from blanks or from a 3D printer.

Multispectral imaging used in feature film

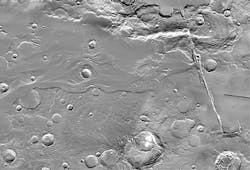

Images of Mars taken by NASA's multispectral imaging camera called THEMIS-Thermal Emission Imaging system-have been used in the recent motion picture The Martian. The 2015 science fiction film tells the story of an astronaut (Matt Damon) who is incorrectly presumed dead and left behind on Mars, In The Martian, THEMIS images and mosaics appear briefly in a few scenes.

Designed at Arizona State University (ASU, Tempe, AZ, USA; www.asu.edu), THEMIS is a multispectral imaging camera on NASA's Mars Odyssey spacecraft designed to yield information on the physical and thermal properties of the Martian surface. THEMIS consists of infrared and visible multispectral imagers that have independent power and data interfaces to provide system redundancy.

The system features a 320 x 240 element uncooled microbolometer array that was produced commercially by the Raytheon's Santa Barbara Research Center (Waltham, MA, USA; www.raytheon.com) under license from Honeywell (Morris Plains, NJ, USA; www.honeywell.com). The array is thermoelectrically-cooled to stabilize the focal plane array to ± 0.001K. The imager has ten stripe filters that produce 1 μm-wide bands at nine separate wavelengths from 6.78-14.88 μm. These bands include nine surface-sensing wavelengths and one atmospheric wavelength.

Additionally, THEMIS has a 1024 x 1024 KAI-1001 CCD image sensor from ON Semiconductor (Phoenix, AZ, USA; www.onsemi.com) with a 9μm pixel size that uses a filter plate mounted directly over the area-array detector on the focal plane. On the plate are multiple narrow band filter strips, each covering the entire cross-track width of the detector, but only a fraction of the along-track portion of the detector. This sensor uses five color filters to acquire multispectral coverage. Band selection is accomplished by selectively reading out only part of the resulting frame for transmission to the spacecraft computer. The imager uses five stripes, each 192 pixels wide. The entire detector is read out every 1.3s and the five bands selected are centered near 425, 550, 650, 750, and 860 nm.

THEMIS' telescope is a three-mirror anastigmatic lens system with a 12cm effective aperture and a speed of f/1.6. A calibration flag, the only moving part in the instrument, provides thermal calibration and is used to protect the detectors from direct illumination from the Sun.

THEMIS has achieved a longer run of observations of Mars than any previous instrument, according to ASU Professor Philip Christensen. Additionally, Odyssey, on which THEMIS is carried, has enough propellant left on board to orbit the Red Planet for another decade according to NASA (Washington, D.C.; www.nasa.gov).