Sharply Focused

Novel lenses and optics make light-field cameras viable

Obtaining high-contrast, sharply focused images is key in the development of every machine-vision system. Because of this, many designers use fixed-focused lenses that feature fewer moving parts and less chromatic aberration than their zoom-lens counterparts. And because these lenses usually have a larger maximum aperture than zoom lenses, they can be used in lower-light applications. Developers must ensure that parts being inspected are in focus before any image-processing functions are performed on the images.

Recently, however, researchers developing new types of cameras based on novel light-field designs promise to overcome this limitation. “Currently, digital consumer cameras make decisions about what the correct focus should be before the picture is taken, which engineering-wise can be very difficult,” says Ren Ng, a computer science graduate student in the lab of Pat Hanrahan, the Canon USA professor in the school of engineering at Stanford University. Similarly, choosing a fixed-focus lens for machine vision relies on the expertise of the system integrator.

“However,” says Ng, with a light-field camera, one exposure can be taken, more information about the scene captured, and focusing decisions made after the image is taken.” In a conventional camera, rays of light pass through the camera’s main lens and converge onto a photosensor behind it. Each point on the resulting 2-D image is the sum of all the light rays striking that location.

Light-field cameras, often referred to as plenoptic cameras, can use a number of different methods to separate these light rays into subimages that are then captured by the image sensor. Because multiple images of a scene are taken, the traditional relationship between aperture size and depth of field of traditional camera and lens design is decoupled. But, because multiple images of these scenes are taken at once, custom postprocessing software must be used to compute sharp images focused at different depths. Today, advances in semiconductor imagers, photolithography techniques, and hardware-assisted image-processing techniques are making the concept of these cameras more viable and more affordable.

One of the earliest modern prototypes of a light-field camera was by Jason Yang, then of the Massachusetts Institute of Technology, who in 1999 developed a low-cost light-field camera based on an adapted UMAX Astra 2000P flatbed scanner with a 2-D assembly of 88 lenses in an 8 × 11 column grid. Using TWAIN software supplied by the scanner manufacturer, images were captured into a host computer. By taking a series of images from different viewpoints on the image plane, new views, not just those restricted to the original plane, can be generated. Since this initial prototype, a number of other researchers and organizations have developed cameras.

Engineers at Point Grey Research have developed a light-field camera with a PCI Express interface, the ProFUSION 25, that uses multiple low-cost MT9V022 wide-VGA CMOS sensors from Micron Technology to reduce the cost of the system (see Vision Systems Design, January 2008, p. 48). Like Yang’s prototype, the ProFUSION uses custom ray-tracing software to compute various images at different depths.

“While traditional light-field imaging uses an array of cameras taking multiple pictures of the same scene and there is an increase in sensor resolution now being offered by Kodak and others, it is becoming possible to capture all of the radiance information into one single image of high resolution,” says Todor Georgiev of Adobe Systems. Rather than use multiple cameras, Georgiev’s design uses a large main lens and an array of negative lenses placed behind it (see Fig. 1). This produces virtual images that are captured on the other side of the main lens and then captured by a camera using a 16-Mpixel sensor from Kodak. “This,” says Georgiev, “produces refocused output images of 700 × 700.” A movie showing the effects of digitally reconstructed refocusing can be found at www.tgeorgiev.net/Juggling.wmv.

Alternative designs

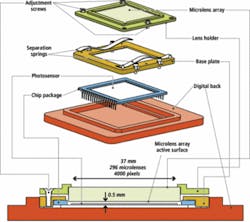

While such techniques use multiple lens elements to achieve a light-field camera design, others, notably those at Stanford University and Mitsubishi Electric Research Labs (MERL), are looking at ways to miniaturize the designs. Rather than use large multiple lens elements, Ng and his colleagues at Stanford have developed a microlens array built by Adaptive Optics Associates that has 296 × 296 lenslets that are 125 µm wide. This microlens array is mounted on a Kodak KAF-16802CE color sensor, which then forms the digital back of a Megavision FB4040 digital camera (see Fig. 2). In the design, Ng chose the sizes of the main lens and microlens apertures to be equal so that the images are as large as possible.

Figure 2. Ren Ng and his colleagues at Stanford University have developed a microlens array that has 296 × 296 lenslets that are 125 µm wide. The microlens array is mounted on a Kodak KAF-16802CE color sensor, which then forms the back of a digital camera.

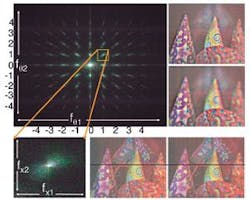

These benefits have not been overlooked by researchers at MERL that are using a very different technique to produce similar effects. There, Ramesh Raskar and his colleagues have developed a light-field camera that does not use multiple lenses or lens arrays. Instead, the method exploits an optical version of heterodyning in which a baseband (usually radio) signal is modulated by a high-frequency carrier signal so that it can be transmitted over long distances without noticeable energy loss. A receiver is used to demodulate these signals and recover the baseband signal. In essence, Raskar and his colleagues have achieved this optically, developing an optical 2-D mask that modulates the angular variations in the light field frequencies to higher frequencies that are detected by a 2-D photosensor.

To achieve this, a 2-D cosine mask consisting of four harmonics in both dimensions was used. As light passes though the mask, it is optically modulated resulting in a fine shadow-like pattern. By compensating for this shadowing effect, a full 2-D image of the scene that was in focus can be recovered. Taking the magnitude of the 2-D Fourier transform of the image can show the number of spectral tiles that correspond to the 81 images in the spectral field, all of which are in focus (see Fig. 3). Fourier domain decoding also can be used to recover all 81 images. Using a 1629 × 2052 imager results in 81 focused images at 181 × 228 resolution.

Extending the depth of field while maintaining a wide aperture may provide significant benefits to industries such as security surveillance. Often mounted in crowded or dimly lit areas, such as airport security lines and backdoor exits, monitoring cameras notoriously produce grainy, indiscernible images. “Let’s say it’s nighttime and the security camera is trying to focus on something,” said Ng. “If someone is moving around, the camera will have trouble tracking them. Or if there are two people, whom does it choose to track? The typical camera will close down its aperture to try capturing a sharp image of both people, but the small aperture will produce video that is dark and grainy.” Today, such developments remain research projects. Within the next few years, however, expect developments to emerge into mainstream consumer and industrial cameras.

null

Websites of interest

graphics.stanford.edu/papers/lfcamera/lfcamera-150dpi.pdf

www.time4.com/time4/microsites/popsci/howitworks/lightfield_camera.html

groups.csail.mit.edu/graphics/pubs/thesis_jcyang.pdf

www.merl.com/people/raskar/Talks/07ACCV/raskarCodedPhotographyACCV2007.pdf

www.merl.com/people/raskar/

www.digitalcamerainfo.com/content/Mitsubishi-Electric-Develops-Camera-to-Refocus-Photos.htm

www-bcs.mit.edu/people/jyawang/demos/plenoptic/plenoptic.html

www.merl.com/people/raskar/Mask/

www.tgeorgiev.net/LightFieldCamera.pdf

www.tgeorgiev.net/IntegralView.pdf

www.merl.com/people/raskar/Mask/Sig07CodedApertureOpticalHeterodyningLowRes.pdf

news-service.stanford.edu/news/2005/november9/camera-110205.html