Synthesizer adds pattern recognition

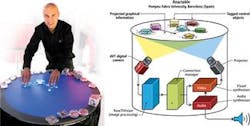

One of the highlight’s of the Vision 2007 show in Stuttgart, Germany, was a demonstration of a music synthesizer that uses a new type of human machine interface made possible by pattern-recognition techniques. Known as the Reactable, the instrument was developed by researchers at the Pompeu Fabra University (Barcelona, Spain; http://mtg.upf.edu/reactable) using an off-the-shelf camera, computer projector, PC, and a custom-built light-table (see figure).

To synthesize music, different Plexiglas objects are placed on the table and moved in relationship to each other. These objects perform different functions based upon their geometric shape. For example, square elements generate basic tones, while round objects act as sound filters, which modulate these basic tones. The symbol on the selected elements determines the type of the basic tone and/or filters, and the spatial relationship of the objects to each other determines the extent to which one element affects another.

To meet requirements for object recognition, a special collection of objects, known as amoeba, was developed to code each individual Plexiglas object. To recognize each object, images of the table are captured with a Guppy FireWire camera from Allied Vision Technologies (Stadtroda, Germany; www.alliedvisiontec.com) and converted to a black-and-white image using an adaptive thresholding algorithm. This image is then segmented into a region adjacency graph that reflects the structure of the alternating black-and-white regions of the amoeba. This graph is searched for unique tree structures, which are encoded into symbols on each object. These identified trees are matched to a dictionary to retrieve the unique marker identification of the symbols.

The Reactable hardware is based on a translucent, round multitouch surface. The camera beneath the table continuously analyzes the surface, tracking the player’s fingertips and the nature, position, and orientation of physical objects that are distributed on its surface. These objects represent the components of a classic modular synthesizer. The musician can move these objects, changing their distance, orientation, and the relation to each other. These actions directly control the topological structure and parameters of the sound synthesizer. A Sanyo projector, also underneath the table, draws dynamic animations on its surface, providing visual feedback of the state, activity, and main characteristics of the sounds produced by the synthesizer.

The Reactable projects markings onto the surface of the table to make the instrument easy to operate. These confirm to the musician that the object has been recognized and provide additional information regarding the status of the generated tone and its interaction with neighboring objects, allowing the artist to see the connections and a dynamic graphic presentation of the generated sound waves on the table. The musician can change individual sound parameters by touching the projected information with his finger.

After images are captured by the Guppy camera, the images from each Plexiglas object are analyzed and their position and orientation measured. Corresponding sound information is then generated and transferred to the PC’s loud speakers as an audio signal, as well as being graphically projected onto the tabletop.

At Vision 2007, Günter Geiger, a senior researcher at Pompeu Fabra University, demonstrated how each object could be used to synthesize sound (see photo). First, an object representing a sine-tone generator was placed on the table. By rotating the object, the frequency of the sound generated could be modulated. Next, an object representing a bandpass filter was placed on the table. After the PC recognizes the object, bandpass functions of the object are linked with the sine generator and displayed graphically.

To change the resonant frequency of this filter, the object can be once again rotated. In a similar manner, a third object, in this case an audio delay, also can be placed in the pipeline and its delay length modified. The result is a graphical real-world hard-object orientation of the sound path. Other objects, such as low-pass filters, can be added to the table as required.

“With a camera and a constant bandwidth, we can monitor the entire surface, regardless of how many objects are on the table. In addition, image processing determines not only the position of the objects but also their orientation (rotation) and can recognize human fingers on the tabletop. With this, the optical solution offers many more fine-tuning possibilities and nuances than alternative technologies and places almost no limits on musical creativity,” says Sergi Jordà, director of the project.

Image transfer and processing speed were critical in the design of the system, as the instrument must react quickly to the commands of the musician. “With the digital FireWire cameras, the data rate allows 60 frames/s to be captured and processed in real time. To lessen sensitivity to light, the Guppy camera was equipped with a near-IR filter,” explains Jordà.