Image-capture boards speed vision tasks

Combining FPGAs, embedded processors, and real-time operating systems, PCI boards offload image-processing functions from the host PC.

By Andrew Wilson, Editor

System integrators wishing to perform complex image-processing tasks at high speed must look beyond the numerous PC-based frame-grabber boards currently available. While these boards provide interfaces between analog and digital standards, performing even the simplest image-processing operations is left to the host PC. When large amounts of data must be captured and analyzed rapidly, even the fastest 3-GHz host computers cannot process the data quickly enough for real-time machine-vision tasks.

To address this, several board vendors offer complete image-capture processing boards that often feature on-board or optional display capabilities. Although designing these systems into machine-vision systems demands more from the system integrator, the benefits are often worthwhile. In considering purchasing such products, however, the system integrator must carefully evaluate the jobs that must be performed. Thus, the choice of board will be application-specific.

Today’s high-performance add-in image-processing boards are not like simple frame grabbers. Because they incorporate on-board digital and analog camera interfaces, look-up tables (LUTs), FPGAs, image memory, RISC, DSPs, or CPUs and often display capability, they resemble stand-alone add-in image processors rather than frame grabbers or image accelerators. Combined, all these features allow system integrators to partition their image-processing or machine-vision algorithm by combining both on-board data flow and von-Neumann architectures.

“No one wants to purchase an expensive board unless they have to,” says Philip Colet, vice president of marketing at Dalsa Coreco, “because in many applications, a simple frame grabber will suffice. However, in some applications, the data rate may be too high, the algorithm may be too complex, or the application may be mission critical. In these applications, he says, the PC must not be burdened with complex image-processing responsibilities since it may be required to perform other functions.”

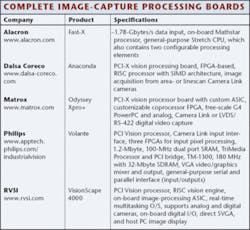

Several companies, such as Alacron, Dalsa Coreco, Matrox, RSVI Acuity CiMatrix, and Philips, offer add-in image-processing boards (see Fig. 1 and table). With on-board LUTs, FPGAs, and CPUs, these boards can overcome the burden of the host computer’s operating system (Windows/Linux), which, in some cases makes it unsuitable for real-time distributed image-processing and machine-vision applications.

By understanding the nature of the task to be performed and the architecture required to perform it, system integrators can optimize their machine-vision applications. For some applications, it may be important to adjust the contrast characteristics. This is best done with LUTs that remap the original pixel values to a new set of values, as determined by a contrast curve set by the operator. By adjusting the values of the curve, and thus the values in the LUT, the contrast of the image can be increased or decreased. The LUTs can also be used for functions such as windowing, which allows the programmer to display and enhance the contrast of the image in selected segments of the total pixel-value range. When windows are set to cover the lower segment of total pixel-value range, a good contrast will be apparent in the lighter areas of the image (see Fig. 2).

Unsharp masking is another image-processing function that can increase the contrast of objects within images. Here again, LUTs, combined with on-board memory in a dataflow architecture, can rapidly do this. First, the original image is blurred by replacing each pixel value with the average of the pixel values that surround it. This image is then subtracted from the original image using pipelined hardware, which results in an image with small objects and structures enhanced. To perform these functions in real time, however, LUTs must be combined with image storage and pipelined hardware (often FPGAs). If the image processor is not designed in this way, captured images will be transferred to the PC, computed, and redisplayed. This could result in an order-of-magnitude decrease in system speed over a pipelined approach.

NEIGHBORHOOD OPERATORS

In many machine-vision applications, it is only a first step to alter the contrast of a captured image. After processing, image filtering may be required to smooth or sharpen the images to find relevant edges from which further computations can be done. These neighborhood operations are generally performed by applying a convolution kernel over the existing image. Such kernels determine pixel values in the output image by applying an algorithm or a matrix of weights to the pixels in the input image. Convolution operators implement operators such as spatial filters and feature detectors.

To pipeline these functions, an array of multiply/accumulators must be designed into the data path of the image-processing board. Before the advent of FPGAs, the use of bipolar fixed-data-path multiplier/accumulators were used. Because these were custom devices, their functions were limited to performing specific (usually 8 × 8 convolution kernels) functions across the image. Now, by incorporating FPGAs within reconfigurable processor pipelines, board vendors can offer greater flexibility and higher data throughputs. This hardware can allow functions such as the Hough transform (HT) to be implemented rapidly using pipelined multiply/accumulators and on-board image memory.

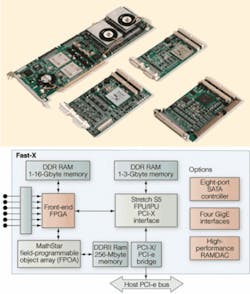

One example is the Fast-X board from Alacron. It provides high-speed data input (~1.78 Gbytes/s), a front-end interface to preprocess the data, a MathStar chip with two 128-bit DDRAM memory systems, and a general-purpose processor from Stretch, which also contains two configurable processing elements for customization to a specific application pipeline. “With this kind of hardware,” says Joseph Sgro, Alacron president, “8 × 8 convolutions used by algorithms such as the contrast-limited adaptive histogram equalization (CLAHE) algorithm, can be performed in real time at more than 250 Mpixels/s. Alternately, HTs can be performed at more than 500 Mpixels/s” (see Fig. 3).

The hardware combination used on the Alacron board allows the two algorithms to run in real time, whereas, in the past, they were limited to less than 25 Mpixels/s (CLAHE), and ~5 Mpixels/s (HT) when implemented on general-purpose hardware. The advantage to these higher-speed processing elements is that more complex pattern-recognition algorithms can be implemented in real time at high resolutions, improving classification and reducing false-positives/negatives.

FEATURE EXTRACTION

While point and neighborhood functions can perform a variety of image-processing and machine-vision algorithms, they are not the best choice of processors when image segmentation, feature extraction, or interpretation is required. Although preprocessing stages using LUTs and FPGAs can be used to highlight specific features within an image, the functions of segmentation, feature extraction, and interpretation require more complex image-processing functions that are best performed by von-Neumann-like architectures. The primary reason for this is that many image operations operate on an “If, else, if” programming model that cannot be accomplished in a pipelined fashion.

To segment objects within an image, for example, watershed segmentation algorithms are often used. (see Fig. 4). These algorithms map high-intensity regions within the image as “tall areas” and low-intensity regions as “low-lying” areas. Objects in the image are then regarded as objects within a flood plain.

By slowly increasing the level of “water” in the flood plain, tall objects or those of high intensity will remain, while those of low intensity may be underwater and thus obscured from the final image. Using these algorithms, the image can be segmented at different scales. The operators must then determine which scale level is best used to highlight structures of interest within the image.

OCR/OCV can also be computationally intensive. Before recognition is accomplished, preprocessing stages threshold an image to produce a binary image that segments the text from the background image. Then, disconnected elements are joined by a series of morphological operators that grow these regions into more recognizable characters. After this is complete, the images or characters are segmented, and a feature vector set of the captured image is compared with that of a known, good example to provide a statistical fit of how well the character matches. While part of the OCR algorithm may lend itself to pipeline processing, feature vector-set comparison and statistical analysis may best be performed with multiple processing methods that include point processing and neighborhood operators.

“Wafer inspection is suited to a balanced-coprocessor approach,” says Dalsa Coreco’s Colet, “because extremely high spatial resolutions coupled with large object size combine to create huge amounts of data that must be processed in real time.” In a typical wafer-inspection application, 200- and 300-mm bump wafers are often inspected using 4K TDI linescan cameras that produce 160 Mbytes/s.

The standard approach is to compare the acquired image with an ideal version of the inspected object (a golden image). Any differences between the two indicate the presence of defects on the wafer. However, because the wafer-positioning mechanism and acquisition process induce spatial drift and rotation, fiducial marks must first be found in the acquired image, then a rotation/translation step performed to bring the acquired image into proper alignment with the golden image. “What makes Coreco’s Anaconda ideal for this type of application,” says Colet, “is that by incorporating both an FPGA and PowerPC, compute-intensive searches can be off-loaded to the PowerPC while the FPGA performs functions such as translation and rotation and masked subtraction of the new image from the golden image.”

“The advantage of these coprocessors,” says Alacron’s Sgro, “is that they are designed to perform multiple operations with minimum CPU intervention. As a result, these boards can outperform the PC for imaging and machine-vision applications.”

Company Info

Alacron

Nashua, NH, USA

www.alacron.com

Dalsa Coreco

St.-Laurent, QC, Canada

www.imaging.com

MathStar

Minneapolis, MN, USA

www.mathstar.com

Matrox Imaging

Dorval, QC, Canada

www.matrox.com

Philips

Eindhoven, The Netherlands

www.apptech.philips.com/industrialvision

RVSI Acuity CiMatrix

Nashua, NH, USA

www.rvsi.com

Stretch

Mountain View, CA, USA

www.stretchinc.com