GIGABIT ETHERNET OFFERS BLAZING SPEED

By John Vieth, Director of Research And Development Digital Cinema Unit, Dalsa Corp., Waterloo, ON, Canada; www.dalsa.com

In the context of machine-vision applications, a digital camera converts spatial light patterns into a real-time stream of bits. The camera is often a peripheral of the overall vision system and, therefore, requires a medium to transfer the bits to another device for processing. The camera interface connects a camera to an image processor over some distance using a cable to exchange image data and control signals. It comprises two primary elements—a detachable physical interconnection and a signaling protocol. Some implementations of the camera interface may allow multiple devices to share a common physical medium, although this provides tangible benefits in only a few applications.

Several methods are available to implement the camera interface. The primary design considerations are

- Speed and latency of data transfer to support the throughput and timing requirements of the intended application.

- Cost of implementation with affordable components to be competitive with alternative interfaces supporting similar performance.

- Compatibility and interoperability with equipment from a variety of suppliers interconnected and operated in a variety of combinations.

- Availability of components on demand from multiple sources to implement the interface.

- Ease of installation and maintenance calling for a minimum of training and special equipment to attach, configure, and debug the connections.

- Fault tolerance and noise immunity of the interface must sustain operating requirements in the environment of the intended application.

- Reliability in the operating environment of the intended application with few, if any, failures due to the interface.

These considerations favor a mature technology based on established computer-industry standards that are widely supported by multiple equipment suppliers. One such mature technology is Gigabit Ethernet, the IEEE 802.3ab standard 1000Base-T interface. It is a higher-bandwidth version of the ubiquitous local-area-network medium for computers. It uses five-level pulse amplitude modulation (PAM5) signal encoding It supports 1000 million bits/s over four unshielded twisted pairs of Category-5 wiring up to 100 meters between transceivers. It uses 8-pin (RJ-45) connectors.

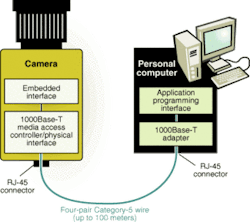

Consider a typical application in which a camera is connected to a PC (see figure). Application software controls the camera and acquires image data via the application programming interface in the PC. Many standard PC configurations include the 1000Base-T interface hardware.

Currently, many machine-vision applications require high-performance digital cameras that can provide output data rates from 40 to 100 Mbytes/s. For example, a 1-Mpixel camera that captures 75 frames/s at 10 bits/pixel can transfer at least 94 Mbytes/s. Easily meeting this challenge, Gigabit Ethernet can carry 80 million, 10-bit pixels/s of camera output data over more than 100 meters of cable. Its cable and connectors are readily available components that are used widely in computer-network installations. Containing eight conductors, the cables are flexible, and the connectors are small.

Ethernet signaling protocols provide more-than-adequate noise immunity and reliability as proven over decades of use in a variety of environments. As standard equipment on PCs, the electronic components required to implement the Gigabit Ethernet interface in digital cameras are readily available at favorable prices.

Competitive analysis

From this analysis it appears that Gigabit Ethernet satisfies the primary requirements of an interface for applications using high-performance digital cameras. But how does it compare to alternative interfaces such as IEEE 1394b (FireWire), USB 2.0, or Camera Link? Similar to USB and FireWire, only standard PC hardware is required. In operation, Gigabit Ethernet supports faster data transfer rates over copper wires than do FireWire and USB and over much longer distances. In addition, it offers simpler and less expensive cabling that can be prefabricated or readily assembled in the field. In sum, Gigabit Ethernet offers the lowest potential cost of implementation.

However, standard Gigabit Ethernet provides only the physical and media access layers of the camera interface. With the cooperation of camera manufacturers throughout the Automated Imaging Association (AIA; Ann Arbor, MI; www.machinevisiononline.org), an industry standard interface for high-performance digital cameras over Gigabit Ethernet can achieve the same level of plug-and-play interoperability that USB and FireWire provide for PC peripherals today. But unlike the de facto USB and FireWire implementations, the Gigabit Ethernet camera interface will sustain the full gigabit bandwidth of data transfer with little sacrifice of image processing speed in the PC.

Having examined the camera interfaces, it is evident that the best of these cannot effectively support the full range of machine-vision applications. The AIA standard Camera Link interface remains the best platform for camera applications demanding higher data rates or lower latency than Gigabit Ethernet can support. Although some applications using the Camera Link interface may eventually migrate to the Gigabit Ethernet interface, Camera Link will retain a substantial installed base and will support new applications demanding high performance.