An Expert System Uses multispectral inspection to Assess Meat

An Expert System Uses multispectral inspection to Assess Meat

By F. Francini, G. Longobardi, V. Guarnieri, and S. Livi

Checking the quality of manufactured objects is often accomplished by comparison of captured and reference images. In such systems, captured images are compared with reference images or data to produce a pass/fail decision. In inspecting natural objects such as meat carcasses, however, quality is more subjective, and expert knowledge must be incorporated into such systems. Because this knowledge relates to the internal and external characteristics of the products being inspected, vision systems must employ multispectral sensors to visualize the meat being inspected. Incorporating visible, x-ray, and infrared (IR) sensors allows complete profiles of meat to be developed and analyzed by expert systems.

Multispectral vision

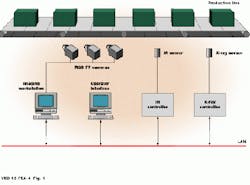

At the Instituto Nazionale di Ottica (Florence, Italy), a system capable of inspecting meat carcasses has been developed that uses a combination of visible, x-ray, and IR detectors and sensors (see Fig. 1). In operation, images of hanging meat samples are taken at 120 from each other. This allows a complete 360 view of the meat carcasses to be obtained. For detection of defects not seen under visible light, IR detectors in the 3-5-µm range visualize internal subcutaneous blisters. For internal inspection, x-ray sources and detectors are used.

To extract image features from meat carcasses, images are digitized into a VME-based workstation based around a TSVME 959 Sun-compatible SPARC board from Themis Computer (Fremont, CA). RGB, x-ray, and IR images are transferred over an Ethernet local-area network to the imaging workstation. Using Khoros image-processing software from Khoral Research (Albuquerque, NM), the system subtracts sample images from backgrounds, segments the sample into subparts, and determines meat features and defects using a custom-built multiple C40-based digital-signal-processor (DSP) VME board.

Isolating chicken bones

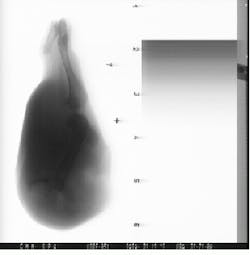

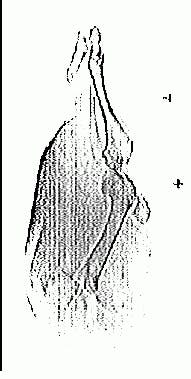

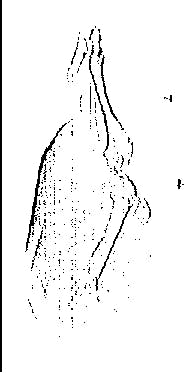

To isolate bone from the surrounding image, bone edges are highlighted with a 7 ¥ 1 tophat convolution kernel (see Fig. 2). To reduce resulting noise, images are then filtered. A further convolution with an 11 ¥ 3 matrix enlarges the contours in the vertical direction. The result is then ANDed with a threshold of the original image to eliminate any redundancies outside a leg`s boundaries.

To thicken the contours and extract the region containing bone, a morphological operation called binary region growing is used. This is an iterative process similar to morphological dilation and allows the connected regions with the greatest surface (containing the bones) to be separated from spurious elements corresponding to dilated impulse noise and parts of the contour not discarded by the AND operation.

After this process, dilation and morphological closing smooth the subregion to eliminate indentations and protrusions and extract contours of interest. The final image is the result of a binary arithmetic addition between the initial subimage and the extracted contours of the region of interest.

Expert systems

To grade the inspected meat, an expert system is used to evaluate image features. In programming this system, knowledge from experts at slaughterhouses was coded as rules. These rules can be modified to provide a correspondence between human and computer grading. A dedicated inference engine then evaluates these rules.

Using freely available software coupled to off-the-shelf and custom-built VME hardware, the system can produce 100% grading of chicken and pig`s legs. With RGB, IR, and x-ray analysis techniques coupled in a multispectral process, subsurface features and defects can be graded in real time.

FIGURE 1. As a multispectral imaging system to inspect poultry products, this VME-based design detects x-ray, IR, and visible images and assembles or fuses the data in an imaging workstation linked over an Ethernet.

FIGURE 2. Finding bone edges in chicken carcasses is a seven-step process. After images are digitized (a), edges are found (b), and the resulting image filtered (c); a further convolution (d) reduces noise. The result is ANDed with a threshold of the original image (e), and a morphological operator is used to find bone regions (f). Dilation and morphological closing are then used to define the edge of the leg bone (g) that is superimposed on the original image (h).

F. Francini and G. Longobardi are with the Instituto Nazionale di Ottica (Florence, Italy). V. Guarnieri is with the Centro di Eccellena Optronica (Florence Italy). S. Livi is with Officine Galileo SpA (Campi Bisenzio, Italy).