INFRARED IMAGING: 'Super-framing' increases the dynamic range of thermal imagers

Although the human visual system (HVS) can perceive objects at very low and very high levels of illumination, discerning objects at both illumination extremes simultaneously is impossible. However, in many industrial vision, military, and security applications, the ability to image objects in scenes where illumination levels may range from very bright to very dark is of great importance.

Luckily, developers of CCD and CMOS image sensors and the cameras based around them have implemented a number of temporal and spatial techniques to accomplish this task (see "Dynamic Design," Vision Systems Design, October 2009). The dynamic range of the image sensor or camera system must be dramatically increased to allow images to display object details.

Perhaps the most commonly used method to increase this dynamic range is a temporal approach, in which multiple exposures of an image are captured at different light levels. Shorter integration times capture bright images and vice versa. Although this method maintains the signal-to-noise ratio (SNR) over the extended dynamic range and allows the image sensor to exhibit a linear response, multiple images must first be captured and combined to form a high-dynamic-range image.

In the past, such techniques have been limited to visible imagers and camera systems. Now, Austin Richards, PhD, and his colleagues at FLIR Systems (Wilsonville, OR, USA; www.flir.com) have applied this technique to the company's SC6000 and SC8000 series of InSb-based infrared (IR) cameras. The technique, often called "super-framing," extends the effective scene brightness of a thermal image while maintaining its thermal contrast.

"Although thermal cameras can produce high-contrast images showing small temperature differences, they can only do so within a defined temperature range," says Richards. "By setting an IR camera to a typical temperature range of -20°C to +50°C, for example, all the objects with a temperature beyond this range are saturated while those below will generally be very noisy.

"So, if the object temperature of interest is +100°C, a range of +20°C to +120°C must be selected. In this case, the camera will present a good image of the +100°C object, but the contrast of the room-temperature objects in the image will not be as good as when displayed in a -20°C to +50°C range," Richards explains.

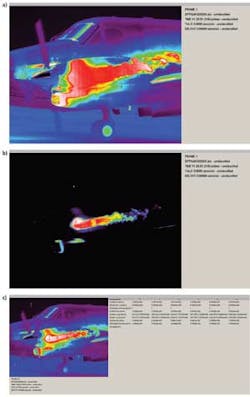

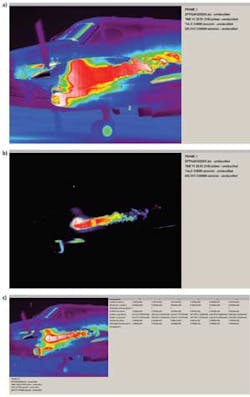

When extreme temperatures appear within a scene, the hottest parts of the image will be saturated and the coldest parts will appear black or noisy, resulting in a loss of image detail and invalid temperature measurements. This is particularly disturbing in R&D applications when imaging high-speed digital video of scenes with large temperature differences such as engine monitoring, rocket launches, or explosions.

However, by taking a set of typically four images (sub-frames) of the scene at progressively shorter exposure times in very rapid succession, then repeating this cycle, sub-frames from each cycle can be merged into a single "super-frame" that combines the best features of the four sub-frames that have different exposure times. In this way, the super-frame image will be high in contrast and wide in temperature range (see figure).

To accomplish this, post-processing software is used to determine if a pixel in the first sub-frame is saturated. If so, the corresponding pixel from the next sub-frame is selected. If that pixel is satisfactory, the pixel is selected. If not, the corresponding pixel in the next sub-frame is selected, etc. After the super-frame is computed, all pixel values are converted into temperature or radiance units.