Image sensors expand machine vision applications

Just as CMOS sensors have replaced CCD devices, the emergence of newer, niche-based imagers is expanding the functionality of machine vision applications.

Andrew Wilson, European Editor

According to Yole Développement (Lyon, France; www.yole.fr), the machine vision camera market will grow from $2 Billion in 2017 to approximately $4 Billion in 2023 with a Compound Annual Growth Rate (CAGR) of 12%. In its technology and market report entitled Machine Vision for Industry & Automation, Dr. Alexis Debray of Yole says that the need for quality control has boosted the use of machine vision in the automotive, electronic, semiconductor, food and packaging industries.

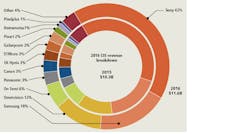

Figure 1: Of the major CMOS Image Sensor (CIS) companies, the leader Sony has a market share of 42% (courtesy of Yole Développement).

Now, machine vision systems can be found in agricultural applications, on roadways for license plate recognition, and more recently, in autonomous cars. “This has created a highly dynamic market,” comments Pierre Cambou at Yole, “in which from 2014 to 2017, mergers and acquisition activity has accelerated for both image sensor and camera companies.

Examples include Teledyne Technologies (Thousand Oaks, CA, USA; www.teledyne.com) acquiring e2v in 2017, FLIR (Wilsonville, OR, USA; www.flir.com) acquiring Point Grey in 2016, the acquisition of CMOSIS by ams (Premstaetten, Austria; www.ams.com) in 2015 and the consolidation of Aptina and Truesense by ON Semiconductor (Phoenix, AZ, USA; www.onsemi.com) in 2014. This has left only a few major CMOS Image Sensor (CIS) companies, with the leader Sony (Tokyo, Japan; www.sony-semicon.co.jp) having a market share of 42% according to Yole. (Figure 1).

Sony’s market dominance is reflected by the use of its devices by many camera companies. “Sony’s Pregius is the most promising line of CMOS imagers,” says Petko Dinev, CEO and President of Imperx (Boca Raton, FL, USA, www.imperx.com). Indeed, his company has implemented several versions of cameras based on 3.2 to 12.0 MPixel devices with interfaces that include GigE Vision, Camera Link and SDI and intends to add 16 and 20 MPixel versions and support 10GigE and CoaXPress interfaces.

“Our customers are leaning towards Sony imagers mostly due to their smaller size - 2/3in for a 5 MPixel imager, for example - and a higher signal to noise ratio,” adds Doug Erlemann, Business Development Manager at Baumer (Southington, CT, USA; www.baumer.com).

Sony’s imagers are also supported by numerous other companies such as Basler (Ahrensburg, Germany, www.baslerweb.com) that use second-generation Pregius devices in its ace camera models. Useful videos and comparison charts on these and emerging third-generation devices can also be found on the website of FLIR Integrated Imaging Solutions (Richmond, BC, Canada; www.ptgrey.com) at: http://bit.ly/VSD-FLIRMV.

For companies developing cameras at the high-end of the market, only a few commercial sensor companies can fulfill their needs. These include ams, with devices such as the CMOS CMV50000, a 47.5 MPixel imager, ON Semiconductor with its Python 16 MPixel and 25 MPixel devices and Canon (Tokyo, Japan, www.canon.com) with its 120MXS 120 MPixel CMOS sensor.

Figure 2: Panasonic’s 8k 60 fps CMOS sensor uses an image sensor based on an organic photo-conductive film (OPF). By changing the applied voltage to the OPF, the sensitivity of the device can be changed to accomplish both high sensitivity and high saturation modes. Thus, it is possible to capture the fine winding structures of lamp filaments that could not be accomplished when the sensor is used in high sensitivity mode.

“Canon has instituted a program, where every customer wanting the 120 MPixel rolling shutter-based sensor must sign a non-disclosure agreement,” says Rusty Ponce de Leon, President of Phase 1 Technologies (Deer Park, NY, USA; www.phase1vision.com), Canon’s distributor in the United States.

One of these companies, Imperx, has evaluated the 120 MPixel device but Petko Dinev says that the small 2.2 µm pixel size means that the dynamic range is relatively small and that using the imager limits camera developers to using Canon lenses.

Stacked sensors

Driven by the demand to reduce the form factor of imagers for mobile phone applications, stacked CMOS image sensors first came to the fore in 2012 when Sony announced the world’s first such device for consumer electronics, according to Ray Fontaine at Chipworks (Ottawa, ON, Canada; www.chipworks.com), see “The State of the Art of Mainstream CMOS Image Sensors,” at http://bit.ly/VSD-MCIS.

Today, stacked image sensors are being produced by companies such as Canon, OmniVision Technologies (Santa Clara, CA, USA; www.ovt.com), ON Semiconductor, Panasonic (Osaka, Japan; https://industrial.panasonic.com), Samsung Electronics (Suwon, South Korea; www.samsung.com), Sony, and STMicroelectronics (Geneva, Switzerland; www.st.com).

In these stacked CIS designs, multiple ICs are used for image sensing and digital processing using through-silicon vias (TSVs) to connect separate ICs. An example of such a device is the PureCel-S OV13860 13 MPixel sensor from OmniVision, a backside-illuminated image sensor that separates the imaging array from the image sensor processing pipeline in a stacked die structure. This allows additional functionality to be implemented on the sensor while providing a smaller die size compared to non-stacked sensors. Among the OV13860’s features include a frame rate of 30 fps at full resolution, a 1.3 µm pixel size and 720p image capture at 120 fps.

Like stacked image sensors constructed using two silicon layers to perform image capture and image processing, companies such as Sony and Samsung have now developed three-layer devices that incorporate memory between the silicon used for image capture and image processing to enable high-speed image capture.

At last December’s International Electron Devices Meeting (IEDM), for example, Sony presented a three-layer stacked backside illuminated and thinned 19.3 MPixel imager, with 1Gbit DRAM and readout electronics that can run at 120 fps (see “IEDM 2017: Sony’s 3-layer stacked CMOS image sensor technology;” http://bit.ly/VSD-IEDM).

Like Sony, Samsung has also introduced a three-layer stacked device, its ISOCELL Fast 2L3, a device that incorporates a 12 MPixel sensor with integrated 2G bit DRAM and read-out electronics. This allows the device to temporarily store a larger number of high-speed images in the sensor’s DRAM allowing the sensor to record images at 960 fps.

Just as sensor vendors are reducing the form factor of their devices to meet the need of portable imaging applications, so too are they developing image sensors that use novel architectures to broaden their applications. In February this year, for example, Panasonic described an 8k CMOS sensor, capable of 60 fps that uses an image sensor based on an organic photo-conductive film (OPF). In this design, the OPF performs photoelectric conversion and the circuit area performs charge storage and signal readout. By changing the applied voltage to the OPF, the sensitivity of the device can be changed allowing both high sensitivity and high saturation modes be accomplished with the same pixel structure (http://bit.ly/VSD-PAN). Thus, it is possible to capture, for example, the fine winding structures of lamp filaments that could not be accomplished when the sensor is used in high sensitivity mode (Figure 2).

Physical stress

Novel architectures are also being applied to CMOS devices to address both the measurement of physical stress in transparent and semi-transparent fluids and solids and waste separation and food analysis.

One of the most recent developments to address this has been the introduction of CMOS imagers designed to replace polariscopes that are used in existing applications.

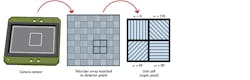

Figure 3: 4D Technology bonds a micro-polarizer to a CMOS sensor in its PolarCam camera to perform the task of stress analysis in transparent materials and fluids.

Six years ago, for example, researchers at the Fraunhofer Institute for Integrated Circuits (Erlangen, Germany; www.iis.fraunhofer.de) developed a camera dubbed Polka that used a custom 560 x 256 pixel CMOS image sensor overlaid with a 2 × 2 matrix of polarizing filters. (see “Smart camera measures stress in plastics and glass,” Vision Systems Design Magazine, January 2012; http://bit.ly/VSD-SMCA).

Like Fraunhofer, 4D Technology (Tucson, AZ, USA; www.4DTechnology.com) used a similar principle in its PolarCam camera that bonds a micro-polarizer to the sensor (Figure 3).

In addition to 4D Technology, other camera manufacturers have used area sensors to perform the task of stress analysis in transparent materials and fluids.

Photron (San Diego, CA, USA; www.photron.com), for example, has used a 1 MPixel CMOS image sensor with a 20 µm pixel size and a polarizer affixed directly to the sensor in its Crysta 2D polarization camera. The polarizer uses groups of four square pixels, each having a different polarization axis at 0˚, 45˚, 90˚ and 135˚ providing polarization measurements at 7,000 fps.

Similar to Photron, Lucid Vision Labs (Richmond, BC, Canada; www.thinklucid.com) has also developed a polarized version of its Phoenix camera, that was displayed for the first time at Photonics West in San Francisco this year.

The PHX050S-P camera is based on the IMX250 Sony Pregius CMOS monochrome sensor with four different directional polarizing filters (0°, 90°, 45°, and 135°) on every four pixels.

Both the intensity and polarized angle of each image pixel is then output over a GigE Vision interface.

Such developments have not gone unnoticed by others wishing to enter such specialized markets.

Last September, for example, Teledyne DALSA (Waterloo, ON, Canada; www.teledynedalsa.com) introduced its Piranha4 line-scan polarization camera that uses a quad-linear CMOS image sensor with micro-polarizer filters that outputs independent images of 0°(s), 90° (p), and 135° polarization states and an unfiltered channel.

“Polarization brings vision technology to the next level for many industrial applications. It detects material properties such as birefringence and stress that are not detectable using conventional imaging” says Xing-Fei He, Senior Product Manager.

Hyperspectral imaging

Integrating specialized filters on a per-pixel basis onto imagers monolithically can also be used to build different types of hyperspectral sensors. In his review of many of the cameras used in hyperspectral imaging, Telmo Adão of the Institute for Systems and Computer Engineering, Technology and Science (INESC-TEC (Porto, Portugal; www.utad.pt), points out that while multispectral imagery generally employs 5-12 spectral bands, hyperspectral imagery consists of a hundreds or even thousands of bands each with a narrower bandwidth.

Developments in hyperspectral imaging have resulted in smaller sensors that can currently be integrated in unmanned aircraft systems (UASs) for both scientific and commercial purposes (see “A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry” that examines hyperspectral imagers from fourteen different companies (http://bit.ly/VSD-HYP).

Cameras built using multispectral devices are also useful in waste sorting, inspection of foodstuffs and surveillance and mapping applications. One of the first organizations to develop such devices was IMEC (Leuven, Belgium; www.imec-int.com) that now offers a number of hyperspectral image sensors based on the CMV2000 image sensor from ams.

These hyperspectral image sensors are being used by a range of camera companies in different line-scan and area-array sensor configurations and are offered in a variety of camera-to-computer interfaces to suit various applications (see “Vision landscape expands with the rise of hyperspectral imaging technology,” Vision Systems Design, December 2017, http://bit.ly/VSD-HYP2). An extensive list of these hyperspectral cameras with links to their individual specifications can be found on the IMEC website at: http://bit.ly/VSD-HYP3.

While the mobile phone market will continue to drive developments in stacked CIS devices, these will also find other uses in drones, robotics and surveillance applications. At the same time, many security, automotive and medical imaging applications will demand CIS devices and cameras with greater sensitivity. In the future, also expect the use of more novel custom-filter arrays to expand the color fidelity of such devices and their use in multispectral, hyperspectral and materials testing applications.

Companies mentioned

4D Technology

Tucson, AZ, USA

www.4DTechnology.com

ams

Antwerp, Belgium

www.ams.com

Basler

Ahrensburg, Germany

www.baslerweb.com

Baumer

Southington, CT, USA

www.baumer.com

Canon

Tokyo, Japan

www.canon.com

Chipworks

Ottawa, ON, Canada

www.chipworks.com

FLIR

Wilsonville, OR, USA

www.flir.com

FLIR Integrated Imaging Solutions

Richmond, BC, Canada

www.ptgrey.com

Fraunhofer Institute for Integrated Circuits

Erlangen, Germany

www.iis.fraunhofer.de

IMEC

Leuven, Belgium

www.imec-int.com

INESC-TEC

Porto, Portugal

www.utad.pt

Imperx

Boca Raton, FL, USA

www.imperx.com

Lucid Vision Labs

Richmond, BC, Canada

www.thinklucid.com

OmniVision Technologies

Santa Clara, CA, USA

www.ovt.com

ON Semiconductor

Phoenix, AZ; USA

www.onsemi.com

Panasonic

Osaka, Japan

https://industrial.panasonic.com

Phase 1 Technologies

Deer Park, NY, USA

www.phase1vision.com

Photron

San Diego, CA, USA

www.photron.com

Sony

Tokyo, Japan

www.sony-semicon.co.jp

Samsung Electronics

Suwon, South Korea

www.samsung.com

TMicroelectronics

Geneva, Switzerland

www.st.com

Teledyne DALSA

Waterloo, ON, Canada

www.teledynedalsa.com

Teledyne Technologies

Thousand Oaks, CA, USA

www.teledyne.com

Yole Développement

Lyon, France

www.yole.fr

For more information about image sensor companies and products, visit Vision Systems Design’s Buyer’s Guide buyersguide.vision-systems.com