Video for Voice

C.G. Masi, Contributing Editor

Many qualities of a human voice result from subtle differences in vocal-fold dynamics—which in turn depend on mechanical properties of tissues forming the human glottis where the vocal cords are located. In the past, limitations on image-capture sensitivity, resolution, and frame rate have made it impossible to obtain true real-time measurements of vocal-fold dynamics that can be compared to acoustical measurements.

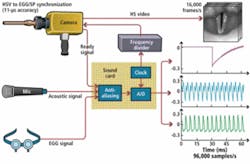

To enable these measurements, researchers have now combined a high-speed, high-sensitivity machine-vision camera with Gigabit Ethernet and data acquisition to create a system capable of simultaneously capturing real-time video of vocal folds along with audio recordings of voice waveforms and additional sensor measurements. A high-speed video (HSV) system feeds this information into a data server that clinicians and researchers can use to post-process the data for their studies.

The researchers are Dimitar Deliyski, associate professor with the Department of Communication Sciences and Disorders in the Arnold School of Public Health at the University of South Carolina, working in collaboration with Steven Zeitels and Robert Hillman in the Center for Laryngeal Surgery and Voice Rehabilitation at Massachusetts General Hospital.

The glottis is the space through which air expelled from the lungs passes through the larynx when a human utters vocalizations (see Fig. 1). To make sounds, two muscles called vocal folds (vocal cords) constrict the glottis, raising the pressure differential through the larynx and powering audio-frequency vibrations in the vocal-fold tissues. The voice’s timbre and quality depend on the mechanical properties of the vocal-fold tissues as well as the strength and evenness of the folds’ muscle tension.

“We are trying to find subtle abnormalities that occur in the vibrations of the vocal cords that may explain voice disorders,” says Deliyski. “On a deeper scientific level we are trying to explain better how they function. What drives that vibration? What affects it?”

Voice of reason

Voice research studies beginning in the early 1900s used high-speed motion photography to record glottal and epiglottal movements. Later, combining digital video and data acquisition techniques made it possible to compare glottal variations with acoustic waveforms. These studies, however, were severely limited by technology. Specifically, high-speed photography and video require high illumination to attain proper exposure levels during the short exposure times consistent with high frame rates.

The illumination, however, would be enough to damage the epiglottal tissues, essentially causing sunburn in the subject’s larynx. This practical limitation meant that researchers were constrained to illumination levels too low for cameras to reach speeds allowing the required detail to be captured.

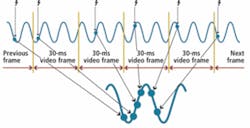

The only solution to this problem has been to time-slice images captured at lower frame rates, consistent with practical illumination levels (see Fig. 2). This technique, known as videostroboscopy, is similar to equivalent-time sampling of repetitive waveforms by waveform digitizers. In it, images taken at slightly later stages of the vibration on different cycles of the audio waveform are combined into a motion picture simulating a higher frame rate.

While these studies could provide information about motions that persisted from cycle to cycle, they could not capture important intercycle variations, nor could they capture motions occurring on time scales shorter than the expanded exposure times. There was also a problem accurately matching the series of images with acoustic waveforms and other instrumentation inputs, such as electroglottography, airflow, and intra-oral pressure measurements.

The ability to collect a high-dynamic-range image depends on the number of photons captured per pixel at the image sensor. The ability to form an image is thus proportional to the illumination level multiplied by the exposure integration time divided by the number of pixels in the image. For a given maximum practical illumination level, there is thus a tradeoff between image resolution and exposure time.

The only way out of this dilemma is to reduce the number of photons needed to achieve adequate dynamic range and capture the required details. Deliyski’s system makes use of recently introduced high-sensitivity CMOS image sensors that simultaneously provide high resolution and dynamic range wide enough to capture subtle details.

Vocal design

The current HSV system designed by Deliyski incorporates a Vision Research Phantom V7.3 camera with a JedMed Model 49-4072 rigid laryngoscope (borescope) having a 10-mm diameter and a 70° angular field of view. The camera uses a self-resetting CMOS image sensor with maximum resolution of 600 × 800 pixels. A counterbalanced crane carries the camera’s weight, providing stability for the researcher or clinician to accurately aim the laryngoscope at the subject’s glottis during the examination (see Fig. 3).

At full resolution, the camera is capable of speeds up to 6668 frames/s. Achieving higher speed requires compromising resolution. “Most of the time, we don’t need full resolution,” Deliyski reports. “We need more like 500 × 500.” At that resolution, the camera can reach speeds faster than 10,000 frames/s needed for real-time capture of vocal-fold vibrations.

Deliyski counts the camera’s synchronization features as very important as well: “It is essential for basic science on voice to be able to synchronize images with other signals, such as voice acoustics and what is called electroglottography (EGG). If we record at, say, 16,000 frames/s and have five more channels recording at 96,000 samples/s, we can precisely determine which six samples correspond to each frame.”

The HSV acquisition system includes a trigger button attached to the camera, which allows the user to control the beginning and end of acquisition. A computer includes an Avid Technologies Model M-Audio Delta 1010LT data acquisition card (DAQ) that triggers the camera’s synchronization input and captures various channels of data in real time. All studies collect at least an audio channel from a headset microphone that the subject wears. Additional channels such as for EGG, airflow, and intra-oral pressure can be collected as needed. Once an acquisition has been made, the computer uploads the data over the facility’s LAN to a data server capable of storing 34 Tbytes of data (see Fig. 4).

The camera has 8 Gbytes of internal cache memory circular buffer to store video frames locally during acquisition. When the user releases the camera trigger, the camera:

- Completes acquisition of last image;

- Sends acquisition-stop “ready” signal to DAQ computer; and

- Uploads image sequence to DAQ computer via Ethernet connection.

The DAQ computer packages all the data for that acquisition into an archive, which it can upload to the data server over the LAN at the end of the session. In real time, however, the DAQ computer provides images and waveforms to the user via two monitor screens that form the local human-machine interface (HMI) mounted on the electronics cart. One screen provides video from the camera, while the other displays real-time waveforms from whatever sensors are being used.

Light from a 300-W constant xenon light source travels via optical fiber to the laryngoscope and then to episcopically illuminate the laryngoscope’s field of view. The laryngoscope has a central image-handling periscope optical system to deliver the image to the image sensor. Periscope optical systems such as this one use an arrangement of lenses to reduce vignetting during the transfer of images from one end of a long tube to the other. This allows the periscope to achieve an angular field of view that would be impossible otherwise. The manual trigger signal also goes back to the illumination-source power supply to minimize exposure of the epiglottal tissues to potentially damaging light.

To keep the camera frames aligned with samples in the other DAQ channels, the camera uses an external clock obtained from the DAQ computer. The DAQ computer puts out a sampling-clock signal at a frequency several times lower (typically six) than the DAQ sampling rate. A separate divide-by counter, also mounted on the electronics cart, divides this sampling signal and feeds it to the camera.

Sound results

“In the clinic,” Deliyski points out, “we are trying to provide more reliable information about several aspects of the vibration. First, what is called the mucosal wave. The vocal folds are covered with mucosa, which is very pliable tissue. The pliability of this tissue is essential for producing quality voice and to protect the health of the tissue because if your voice is not working well, you tend to force the tissue, and that is abusive to the tissue.”

“Everything has been working out beautifully so far,” he adds. “We’ve learned so much about the voice that we didn’t know before. I’m constantly being asked by researchers who study voice across the world to work together because nobody has a system like it.”

Deliyski has built three systems. One is at Massachusetts General Hospital. He works with several clinics to perform HSV studies with the other two in his lab. Deliyski’s Voice and Speech Lab has been in close collaboration with Terri Treman Gerlach of the Charlotte Eye Ear Nose and Throat Associates, and Bonnie Martin-Harris of the Medical University of South Carolina, Charleston, SC.

For the future, he and his collaborators hope to extend their work in two areas. The first is to replace the rigid laryngoscope with a flexible fiberoptic unit that can be inserted through the nose instead of the mouth.

“If we use a rigid scope through the mouth,” he points out, “all we can do is look at a single vowel, but we cannot produce connected speech. If the subject has an endoscope stuck in the mouth, obviously they cannot talk. If we want to see the function of the vocal folds in a natural environment, we need to allow the mouth to function properly. If we go through the nose, we can position the endoscope inside the vocal tract just above the vocal folds.”

In the past, HSV technology using a nasal fiberscope was not considered practical. The nasal fiberscope gives an image that is 6–7 times darker than the rigid laryngoscope. Deliyski did the first experiments with a nasal fiberscope in early 2008 with a monochrome version of the camera, which is approximately four times more sensitive than the color version. The results were encouraging enough to motivate further research to develop the technique.

The second area of development is an attempt to relate acoustic and other signals to glottal vibration patterns seen via HSV and then relate those patterns to disease conditions. In this way, they hope to develop a less invasive protocol that can be used more widely. HSV studies, after all, are invasive, equipment intensive, and not that easy to interpret. “Let’s say we want to do a screening of a large number of people for voice disorders,” Deliyski says. “Maybe we can get a lot of information by less invasive methods.”

In the hoped-for protocol, clinicians would record parameters that Deliyski and his collaborators have studied. A personal computer or workstation running pattern-matching software would then compare the clinical results with patterns Deliyski and his collaborators have correlated, through their HSV studies, to specific disease states. It would then be possible to indicate medical conditions affecting real patients.

To view sample clips of human vocal folds captured in slow motion, visit www.visionresearch.com/go/vocal.

Company Info

Charlotte Eye Ear Nose and Throat Associates

Charlotte, NC, USA

www.ceenta.com

Massachusetts General Hospital, Center for Laryngeal Surgery and Voice Rehabilitation

Boston, MA, USA

www.massgeneral.org/voicecenter

Medical University of South Carolina

Charleston, SC, USA

www.musc.edu

University of South Carolina, Department of Communication Sciences and Disorders, Arnold School of Public Health

Columbia, SC, USA;

www.sph.sc.edu

Vision Research, Wayne, NJ, USA

www.visionresearch.com