Embedded deep learning creates new possibilities across disparate industries

Martin Cassel

Embedded vision systems capable of performing on-device deep learning inference offer a capable solution for applications in many different industries, including assembly, collaborative robotics, medical technology, drones, driver assistance, and autonomous driving. Advantages of deploying deep learning on embedded devices compared with cloud-based solutions include reduced network load and lower latencies, which opens up new applications. However, doing so, requires the right set of tools and hardware.

Developers must factor in available hardware, processing resources, memory size and type, and speed requirements such as image frequency, processing, and bandwidth. Additional considerations include the required image resolution or quality, power consumption, and the size of the system. Available for such systems are a range of embedded image processing systems from smart cameras to vision sensors to single-board computers (SBC).

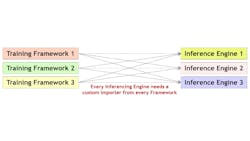

In deep learning, neural network training does not take place on embedded devices. Rather, the execution (inference) of the trained network takes place directly on the devices. Fully trained networks generally focus on one specific task, like surface inspection, but can run on various types of embedded systems. To do this, trained networks are converted into special formats like the Open Neural Network Exchange (ONNX) format or the Neural Network Exchange Format (NNEF; bit.ly/VSD-NNEF; Figure 1), or into a network description file with shared weights. Among the multitude of neural networks, convolutional neural networks (CNN) stand out for deep learning because of high performance and low power consumption due to fewer weights in comparison to other networks. These factors also make CNNs viable for most embedded vision applications.

To save resources, CNNs can be streamlined using measures such as compression (of deep learning algorithms and data) and pruning (removal of parts of the network such as certain characteristics, neurons, and weights if they have only a slight effect on the result). CNNs can also be simplified by reducing the computational accuracy to 8-bit or even 4-bit fixed-point (quantization). Binarized neural networks (BNN) make further reductions, as such networks operate with binary weights and reduce fixed-point multiplications in the neural network layers to 1-bit operations. BNNs require lower processing power, power consumption, and cycle rates, but these savings come at the cost of computational accuracy that may be too low for embedded applications.

The effect of accuracy loss differs depending on the application, but it helps to reduce the need for storage space. Trained ternary quantization (TTQ) offers an approach that transforms weights into ternary values stored on 2 bits a time. Weights are quantized in three values specific for each layer: Zero to break off useless connections, a positive value, and a negative value. Ultimately for an embedded system, the right balance must be struck between performance and downsizing of the weights in every case.

Choosing the appropriate method

In deep learning, CNNs process images using techniques for object detection or pattern/anomaly identification and issue a result such as a classification, to name one example. Embedded systems trail behind PCs in terms of handling large volumes of data. For that reason, embedded systems running CNNs require high computing power (5 to 50 TOPS or Tera operations per second) and corresponding large bandwidth capacities at the lowest possible power consumption of approximately 5 to 50 watts. System performance is determined by power consumption demands as well as a customer’s willingness to pay a certain price for the system.

Depending on application and corresponding data volume needs, various chipsets that function as accelerators in combination with central processing units (CPU) are available. This includes the Myriad 2 and Myriad X vision processing units (VPU) from Intel Movidius (Santa Clara, CA, USA; www.movidius.com) or the Hailo-8 dedicated deep learning processor (Figure 2) from Hailo (Tel Aviv, Israel; www.hailo.ai). Such chipsets are specifically designed to enable developers and device makers to deploy deep neural network and artificial intelligence applications in embedded devices.

In embedded systems, CPUs are typically based on ARM architecture. CPUs alone demonstrate no parallel structures and not enough computational capacity, making them reliant upon the addition of processors for inference. Graphics processing units (GPU) however offer massive parallelism and high memory bandwidth, which relieves the main memory. GPUs also have a high thermal output and are optimized in embedded versions increasing computational speed. Examples include the NVIDIA (Santa Clara, CA, USA; www.nvidia.com) Jetson or AMD (Santa Clara, CA, USA; www.amd.com) Ryzen embedded.

An experimental facial recognition application recognized one to four faces per second with CPUs, contrasted with up to 400 faces identified using newer embedded GPUs. To alleviate these performance gaps and more strongly challenge GPUs, CPU manufacturers are already working on strengthening computational speed.

Field-programmable gate arrays (FPGA) combine high-computing speed with low thermal output and low latencies and offer an alternative deep learning processor with great potential. For example, with some programming efforts, developers can modify FPGAs like software to execute various neural networks. If an application requires several neural networks over time, FPGAs represent a good option.

Another option, application-specific integrated circuits (ASIC) are designed from the ground up for deep learning acceleration—with an engine for rapid matrix multipliers and direct convolution, for example. ASICs offer high computing power with low thermal output. These devices attempt to minimize memory access and keep the maximum amount of data on the chip to accelerate processing and throughput. However, ASICs are only marginally programmable and as such, not as flexible in implementation. Additionally, manufacturing individual ASICs is expensive. Still, ASICs represent a suitable option for embedded vison systems and can also be combined with each other.

In summary, embedded vision systems for deep learning typically consist of small processing boards and miniature camera modules, such as Basler’s (Ahrensburg, Germany; www.baslerweb.com) dart BCON for MIPI development kit (Figure 3). Within this architecture, systems on a chip (SoC) act as the main processing unit and contain a CPU as an application accelerator, and additional processors such as GPUs, FPGAs, or a deep learning chipset can be added. Systems on modules (SoM) contain an SoC supplemented with important components like memory (RAM) and power management, making an SoC practical for embedded deep learning use. Such systems still typically require carrier boards with physical connectors for peripheral devices such as cameras that customers can develop to meet specific requirements. In contrast, an SoC in a single-board computer is mounted on a carrier board from the outset with fixed ports for peripheral devices. Additionally, an embedded operating system is required to control the individual components.

Robotic requirements

Anchored industrial robots or mobile/collaborative robots often make use of 3D imaging technology to perceive the surrounding environment. Such robots classify objects, detect anomalies, collaborate accident-free with other humans and machines, position tools, and assemble devices. For many of the tasks robots accomplish, deep learning adds a new dimension of flexibility.

In transfer learning, for example, pre-trained neural networks can be used right away for several robotics applications, both time- and cost-effectively. Such a network adapts to new environments using deep reinforcement learning by incentivizing a robot’s behavior. For rewards or punishments (negative rewards), parameters are modified so that the robot repeats good actions, enabling it to learn new work stages in a relatively short time period, even for difficult and variable inspection environments like device assembly.

The modified robot behavior in question could be programmed using conventional algorithms, but only with great effort. In contrast, using deep learning, bin-picking robots can identify and grab pieces independent of position or orientation, even if they are angled or covered by other pieces. In so doing, the robot orients itself independently and finds known parts based on input data such as normal, stereo, or 3D images.

Appropriate target applications include packing and palletizing, machine loading and unloading, pick-and-place applications, bin picking, quality testing in automotive manufacturing, electronics assembly, precision agriculture and automation of work stages, and medical technology including smart devices, early detection of illnesses, surgical assistance systems.

Conclusion

Deploying deep learning in embedded devices reduces security risks associated with processing of protected images in the cloud. In embedded applications, the generation and use of data takes place at one point, increasing the entire plant’s effectiveness. Increased computational resources can be offset using more powerful small networks, new processors for embedded applications, and improved processes such as compression, pruning, and quantization.

Suitable chipsets and processing boards for deep learning vary greatly depending upon the application and must be precisely coordinated together with the software for different applications. Using technologies such as transfer and reinforcement learning, the networks used and the entire application along with them can be quickly modified, improving manufacturing flexibly. Deep learning on embedded devices will also continue to play a key role beyond robotics. Which components and networks are suited for which application? This problem will continue to challenge system integrators.

Martin Cassel is an Editor at Silicon Software (Mannheim, Germany; https://silicon.software)

Related stories:

Deep learning chip enables efficient embedded device processing

3D welding helmet employs extreme dynamic range imaging

Smart cameras evolve to meet expanding machine vision needs

Share your vision-related news by contacting Dennis Scimeca, Associate Editor, Vision Systems Design