IoT Embedded Vision—What is it and where can it be used?

IoT Computer Embedded Vision - Applications & Definition

If you checked out the Buyers Guide in the March/April issue of Vision Systems Design, you hopefully noticed a new category: IoT Embedded Vision. It made sense to add it. This area of embedded vision is poised to grow as more and more applications make use of it. That said, IoT embedded vision can mean different things to different people. Plus, there are myriad applications to which it applies.

What is IoT Embedded Vision?

Internet of Things (IoT) refers to objects featuring embedded sensors, software (like edge computing) that are designed to exchange information with other devices over the Internet. Devices employing IoT are found in applications like industrial automation, home automation, and commercial applications.

Sebastien Dignard, president, iENSO (Richmond Hill, Ontario, Canada; www.ienso.com), says that IoT embedded vision goes a step beyond embedded vision systems—systems that can capture and process visual information, either still images or video, that are integrated into another product. “It refers to products where the intended purpose of the vision is not only to capture images/video for their sakes but also to take the data produced to make decisions, inform, and deliver more value,” he says. “This typically means that the product in question uses vision data to achieve its primary purpose, such as a door lock that can ‘see’ who is at the front door and recognize members of your household to keep your home secure.”

iENSO creates complete solutions for companies that want to embed vision data into their product to increase value and profit. These application-specific solutions include integrated hardware, image processing, Edge AI, secure cloud connection, and manufacturing. One example is the turnkey iVS-AWV3-AR0521 IP (Figure 1) integrated embedded vision system that is configured with the Allwinner (Zhuhai City, Guangdong Province, China; www.allwinnertech.com) V3 system on module and the ON Semiconductor (Phoenix, Arizona, USA; www.onsemi.com) AR0521 image sensor for autonomous vehicle and aftermarket home automation camera systems.

Jan-Erik Schmitt, CEO, Vision Components (Ettlingen, Germany; www.vision-components.com), states, “Ultra-compact electronics with integrated image sensors, reduced to the maximum functionality with no unnecessary overhead and tailored to a specific application: that’s how we define embedded vision systems. Vision Components embedded vision systems have been deployed in network solutions in most cases. IoT is just the logical next step for connected embedded devices.”

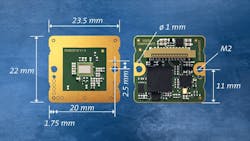

Vision Components offers VC picoSmart (Figure 2), an embedded vision system the size of a conventional image sensor module designed to facilitate and speed up the development of vision sensors. Image capture and processing are integrated on a 22 × 23.5 mm board. Target applications include object detection, position detection, code reading, edge detection, and fill level detection. VC picoSmart features a monochrome 1 MPixel global shutter image sensor with high light sensitivity and high frame rates, an FPGA, a high-end FPU processor, memory, and an FPC port to connect an interface board or a display for live image output and for interaction and control. The FPGA handles most of the image processing tasks.

Not everyone sees IoT embedded vision the same way. For example, John DeWaal, President, CoastIPC (Hingham, MA, USA; www.coastipc.com), thinks about the concept of IoT in the context of the buzzword “edge AI vision.”

“IoT refers to going out to distant, disparate locations for data collection that used to be too expensive because it required a PLC or an expensive computer for gathering and retrieving data points,” says DeWaal. “Today, small but fairly robust computers with built-in GPUs can gather an extensive amount of data and perform AI vision at the edge. Object detection tasks are possible for very little money, for instance. This is our version of an IoT solution.”

CoastIPC offers two new compact embedded computers from Neousys Technology (New Taipei City, Taiwan; www.neousys-tech.com): the POC-400 (Figure 3) and POC-40. These fanless embedded computers feature Intel (Santa Clara, California, USA; www.intel.com) Elkhart Lake Atom processors and measure just 56 × 108 × 153 mm and 49 × 89 × 112 mm, respectively. Designed for industrial applications such as factory automation, the POC-400 features Intel’s x6425E quad-core processor. Targeting factory data collection, rugged edge computing, and mobile gateway applications, the POC-40 features Intel’s x6211E dual-core processor. I/O interface options on both models include two USB 3.1 Gen 1 ports, two USB 2.0 ports, DisplayPort connectors (one on the POC-40; two on the POC-400), a software-programmable RS-232/422/485 port, and a three-wire RS-232 port (or an RS-422/485 port on the POC-400).

The term “IoT embedded vision” is actually two very broad terms that can have different meanings, according to Jonathan Hou, president, Pleora Technologies (Kanata, Ottawa, Canada; www.pleora.com). “IoT, or Internet of Things, is generally connecting devices so they share data and make autonomous or semi-autonomous decisions,” he says. “A simple example is a smart thermostat that uses networked sensors to track when a home is occupied and an Internet connection to monitor the weather. Using the data, the thermostat decides when to turn on the furnace or AC. In the industrial automation market, IoT very broadly means machines are sharing data and either taking an automated action or providing decision-support for humans. This can be around specific applications, such as quality inspection, to broader ‘smart factory’ initiatives looking to network all systems generating and processing data.”

He adds, “Embedded vision is key to evolving toward an IoT ‘smart factory’. In a traditional inspection system, all data is generally fed back to centralized decision making. In an IoT system, embedded technologies need to start making decisions at different edge points in the network and passing data on to the next step in the process. Embedded technologies are taking that centralized processing power and placing it at points in the system where local decisions need to be made.”

For brand owners and manufacturers who need to automate or improve quality inspection, Pleora’s AI Gateway (Figure 4) is a turnkey algorithm development and edge processing platform that simplifies machine learning deployment. The “hybrid AI” solution lets designers and integrators easily train and seamlessly deploy advanced machine learning skills without disrupting existing infrastructure and processes.

Gerrit Fischer, Director of Solutions Business Management, Basler AG (Ahrensburg, Germany; www.baslerweb.com) states, “From our point of view, it is networking of machines (IoT) enriched by embedded vision: a setup where physical devices acquire information through vision sensors and process the information where they are being acquired—at the edge. Only results or metadata are retrieved and further processed. We see increasing convergence with neighboring technology fields such as AI/machine learning and cloud solutions.”

According to Fischer, IoT embedded vision system design is complex. The three major obstacles usually are attaining a seamlessly integrated hardware and software architecture, optimizing vision machine learning models for the target platform, and establishing cloud connectivity. Basler’s answer is the AI Vision Solution Kit (Figure 5) that enables developers to use, train, and deploy machine learning models provided in the cloud on an edge device. For this purpose, pre-trained neural networks are available in the cloud as software containers that are designed for direct use. Users also have the option of expanding these networks as required. The software containers with the selected machine learning models can be loaded onto the edge device or the embedded system for prototyping of application examples. The inference and actual image processing are performed on the edge device. In this way, cloud-specific application examples can be tested easily and with little programming effort, and metadata can be generated. In the next step, users can send the metadata to the cloud via a defined interface and store it in a database, for example, or visualize it with the help of a dashboard using the appropriate tools.

In cooperation with Amazon Web Services (AWS) (Seattle, Washington, USA; aws.amazon.com), Basler has developed an optimized software environment. With Basler Container Management, the company offers developers a new software module on the edge device that includes a direct connection to the cloud. This makes it easier for users of embedded IoT vision systems to use selected machine learning models and cloud-specific tools. The two companies are currently working on an “AI machine vision platform” and plan to release more details in Q3 2021.

The Basler Cloud Server is used to provide the various software modules in the cloud. Machine learning models and cloud-specific tools are converted and made available here for download onto the edge device. Using Basler Container Management and the Basler Cloud Server, software containers can be easily loaded from the cloud to the embedded vision system and used for image processing applications.

For Toby McClean, Vice President, AIoT Technology and Innovation, ADLINK (New Taipei City, Taiwan; www.adlinktech.com), IoT embedded vision is, “the integration of camera technology and processing technology to enable visual perception to create a stream of structured data that can be processed by other applications.”

ADLINK's NEON-2000 Series (Figure 6) of the NVIDIA (Santa Clara, California, USA; www.nvidia.com) Jetson based industrial AI cameras, integrate the Jetson™ Xavier NX or Jetson TX2, image sensor, optimized OS, and rich I/O for vision applications in a compact chassis with verified thermal performance, as well as allowing minimized footprint and cabling required for installation.

Supporting four types of image sensor and integration of DI/O and offering 1x communication port and 1x LAN port in a compact chassis, the NEON-2000 Series is suitable for AI vision applications at the edge. For harsh environments requiring ingress protection, the NEON-2000-JT2-X Series features IP67 protection. Features include: integration of Jetson TX2, image sensor, and vision software suites; all-in-one design that minimizes cabling, footprint, and maintenance; FPGA based DI/O for accurate real-time triggering; USB Type-C port for video, power, and USB simplified connectivity; 1x microSD slot for external storage (not supported by NEON-2000-JT2-X); choice of four different image sensors; DI/O, 1x LAN and 1x COM and support for a C-mount lens.

For Sylvia Huang, Product Manager, Cincoze (New Taipei City, Taiwan; www.cincoze.com), IoT embedded vision solutions are made possible by artificial intelligence (AI) and IoT. “Applications based on AI and machine vision require powerful computing power to provide accurate and actionable insights,” she says. “Therefore, a rugged and powerful computer supports connection and processing at edge harsh environment.”

Cincoze’s GP-3000 (Figure 7) is an expandable industrial computer. An Intel® workstation-grade processor and up to two 250 W full-length graphic cards provide performance within a total 720 W power budget. The GP-3000 features the GPU Expansion Box (GEB) design, whereby a single or dual GPU GEB is attached to the main embedded system to add GPU processing power. The additional GPU performance accelerates complex industrial AI and machine vision tasks.

JC Ramirez, Vice President of Engineering/Product Manager, ADL Embedded Solutions, Inc. (San Diego, California, USA; www.adl-usa.com), says IoT embedded vision for our customers equates to “small, rugged, wide-temperature, Internet-connected industrial IoT (IIoT)systems for remote monitoring and security of mission critical assets like border/toll crossings, plants, and refineries (access control, leak detection, valve positioning, and key instrumentation).”

For IIoT applications, ADL Embedded Solutions offers the ADLEPC-1700 (Figure 8), at the heart of which is a wide-temperature, compact Intel® Atom™ E3900-series SBC with a host of onboard and mPCIe expansion features. The compact chassis design has a very small footprint at 3.3 × 4.6 in., the approximate size of an index card, making it suitable for embedded use in IoT applications or retrofitting into high-value assets and infrastructure such as edge devices for power grid protection, field surveillance, oil and gas, and unmanned applications. The ADLEPC-1700 is highly customizable and can easily be adapted for particular customer needs including Wi-Fi, CAN, RS232, military-grade power, MIL-STD-1553, ARINC, and much more.

According to Charlie Wu, Product Supervisor, Advantech North America (Milpitas, California, USA; www.advantech.com) embedded vision is an independent system that mainly includes the following features: stand-alone AI box that can perform real-time vision data analytics and offers compact dimension and low power consumption. Advantech offers AIR-200 (Figure 9), an edge AI inference system featuring a 6th Gen Intel® Core i5-6442EQ QC and two Intel MA2485 VPUs. It comes with the Edge AI Suite software toolkit that integrates Intel® OpenVINO™ toolkit R3.1 to enable accelerated deep learning inference on edge devices and monitor real-time device status on the GUI dashboard. AIR-200 detects, records, and processes the stream/video data captured from IP cameras. It performs AI inference and reports the analytics data, all in one system.

Applications

McClean states that while IoT embedded vision is widely applicable, it has proven to be very well suited for quality inspection applications. “A second application that it has shown promise with is monitoring an environment to ensure compliance with safety regulations whether that be wearing the proper protective equipment or detecting when someone enters an unsafe zone,” he says. “Finally, it is probably most widely used in autonomous driving, autonomous robots, and autonomous driver assistance systems.”

One application example McClean cites is a manufacturer of brake disc casting that would like to detect scratches and dents in the castings during the manufacturing process. “To solve the problem,” he says, “the manufacturer has built a solution that combines a camera and a NVIDIA Jetson TX2 processing board into a single form factor. Running on the processing board is a deep learning algorithm that is able to classify an image of the casting taken with camera as either defect-free, scratched, or dented. If the casting is scratched or dented, it is sorted automatically into a rework bin, and the classification is also sent to AWS via IoT Hub. The operations manager is able to monitor, in real time, any quality issues they may have in manufacturing process through the use of this IoT embedded vision solution.”

Dignard says that any application where a vision-based decision is needed is suitable for IoT embedded vision. “We usually list home automation, precision farming, security, etc. but really, our customers surprise us every time. There is so much innovation happening in this space as we speak that it’s difficult to list all of the possibilities and how product companies are helping their clients use that data.”

He cites the earlier example of the door lock that can process the image data and, “decide whether to unlock the door (machine-made decision), send images of all visitors to the homeowner’s smartphone asking whether to unlock the door (human-made decision), or process the images locally and only ask the homeowner in specific scenarios (hybrid).”

Cincoze's embedded computers can be used in various IoT embedded vision applications, according to Huang—from factory automation, mechanical automation, machine vision, AIoT, robotics, in-vehicle computing, and smart transportation, to smart warehousing and logistics, playing a key role in processing, connectivity, and control.

One example of a recent Cincoze IoT embedded vision application is a customer in China that set up thermal imaging cameras for fast individual screenings in airports, hospitals, factories, office buildings, restaurants, and stores to prevent COVID-19 transmission. The customer wanted to develop automated thermal imaging systems to provide real-time body temperature indicators through contactless thermal monitoring and biometric data analysis, allowing on-site personnel to quickly identify individuals with potential illnesses.

Combined with AI and deep learning algorithms, it can also perform mask detection, face recognition, image search, footprint tracking, and other functions, providing real-time information for the intelligent analysis of big data in the central control center. An industrial computer is essential because of its computing performance for inference analysis, enabling large-scale screening to eliminate coronavirus outbreak and other infectious diseases.

Hou describes an application for consumer goods packaging inspection. “One of the key challenges for a brand owner when considering AI and embedded vision is algorithm training,” he says. “The perception is it’s expensive and complicated. There are now software tools that simplify machine learning algorithm development into an easy ‘drag-and-drop’ process. ‘No code’ machine learning models are also available for common inspection requirements. In this example, the brand owner is already using computer vision but wants to enhance inspection and improve results. They port the AI algorithm onto edge processing hardware that sits between the camera and the processor. The embedded device intercepts the video, applies the required machine learning skill, and then passes the data on to the processor.

“The brand owner may use AI and embedded vision for a range of requirements. They may want to inspect packaging to make sure the correct ingredients are listed, brand colors used, and barcodes are machine readable. AI can help reduce errors in many of these processes that still rely on human visual inspection.”

Another type of application, according to Schmitt, could be a city with connected smart camera solutions deployed to manage traffic flow in real time; to reduce congestion, accidents, and traffic violations; and to reliably identify vehicles. “For such ALPR-/ANPR-applications, Vision Components has developed VC DragonCam, a ready-to-use board-level camera with Snapdragon Quad-core processor and up to 32 GB of eMMC Flash memory,” he says. “It’s a complete embedded vision system with a Linux Debian-based operating system on a size of only 65 × 40 mm. VC DragonCam’s integrated IMX273 image sensor from the Sony Pregius series allows for a high image resolution of 1456 × 1088 pixel and a frame rate of more than 200 fps. It can be connected easily with any network.”

DeWaal states that IoT embedded vision and edge AI would be suitable for any company deploying high-speed inspection of parts or products, for example. “IoT and edge AI technologies help companies operate more efficiently and increase throughput,” says DeWaal. “For example, one person can manage a long machine with multiple operator stations, so the ability for that system to collect data, make automated decisions, and display results on a screen for the operator to visualize makes his or her job that much easier.”

Fischer cites automated retail check-out terminals equipped with AI software as still another example of where IoT embedded vision finds application. “Today’s self check-out systems usually use 2D barcode scanners to detect and record products on conveyor belts,” he says. “More recent systems use traditional object classification methods (using features like color or type) to ensure precise identification of product features. These methods are not robust when deployed in uncontrolled environments where different light and geometry conditions occur. Those problems can be avoided when adding AI to the system. With the latest AI technology, it is possible to detect products without barcodes in a seamless environment and scale up the product portfolio easily on the fly.”

Wu adds the following applications to the list: factory automation like robotic defect inspection, warehouse AGV and drone, smart cities such as auto street light control systems and traffic monitoring, and security applications such as face recognition for access control in buildings.

The Future

There is agreement that the IoT embedded vision market will continue to grow. It is an easy technology to deploy, and it is very complementary to existing vision technologies like deep learning and AI. There are myriad applications available for IoT embedded vision, so what does the future look like? McClean states, “IoT embedded vision has the potential to be the most widely deployed sensing technology as it is applicable to many industries and verticals but also is often much easier to deploy than other sensing technology.”

Dignard states, “Historically, imaging innovation and growth has come from either consumer or industrial applications and suppliers. Due to the growth of embedded vision and new applications, we see a new breed of supplier and customer driving the next phase of growth for the imaging industry. We believe these new companies will become evident in the next two to three years, and the leaders will be decided in the next five to seven years.” He continues, “Arguably, the biggest opportunity of IoT embedded vision comes not from the buyers of the product but from other participants in the value chain. Aggregate vision data can help make valuable business decisions related to supply/demand, logistics, and more.”

Schmitt advises, “We would like to encourage everybody who is still using PC-based systems to consider and evaluate the possibilities of more robust and energy-saving embedded systems. Thanks to the latest processor developments in the embedded field there are hardly any restrictions, but many benefits.”

Hou looks at recent developments, particularly hybrid AI, as steppingstones to more complex IoT systems. “The past 18 months have seen increasing interest in IoT and embedded technologies, especially in the vision market,” he says. “Evaluating these technologies, brand owners and system integrators generally share the same concerns around integration with existing infrastructure, algorithm training costs and complexity, as well as maintaining end-user processes. One successful approach for brand owners has been a ‘hybrid AI’ deployment, where they can maintain existing processes and equipment but use new embedded technologies to gradually add more advanced machine learning-based inspection. It’s a more evolutionary approach to AI and embedded vision and a steppingstone toward more complex IoT and smart factory systems.”

DeWaal is excited about where things stand with edge AI and machine vision. “The whole area of edge AI and machine vision AI is pretty exciting right now. A lot of companies are trying to understand how best to apply it in their facilities, and it’s nice that we have a variety of products of different sizes and capabilities that we can bring to the table for them. So, whatever their application is, we’re pretty confident that we’re going to have a solution for them.”

Wu adds, “With the rapid growing number of IoT devices and the amount of vision data in recent years and the ever-evolving new technologies like deep learning, new IoT applications to make our lives more digital and convenient are feasible. As IoT devices are normally small sized, power-limited, and not too expensive, it is suitable and reasonable to use IoT embedded vision solutions to build an IoT device with vision AI processing capability and to provide add-on values to customers.”

Ramirez concludes, “IIoT embedded vision is a growing market that will likely explode for ADL Embedded Solutions with the advent of lower power CPUs with increased vision and I/O capabilities driven by a multitude of needs for next-generation military and industrial applications.”

Companies Mentioned

ADL Embedded Solutions, Inc.

San Diego, California, USA

www.adl-usa.com

ADLINK

New Taipei City, Taiwan

www.adlinktech.com

Advantech North America

Milpitas, California, USA

www.advantech.com

Allwinner

Zhuhai City, Guangdong Province, China

www.allwinnertech.com

Amazon Web Services (AWS)

Seattle, Washington, USA

aws.amazon.com

Basler AG

Ahrensburg, Germany

www.baslerweb.com

Cincoze

New Taipei City, Taiwan

www.cincoze.com

CoastIPC

Hingham, MA, USA

www.coastipc.com

iENSO

Richmond Hill, Ontario, Canada

www.ienso.com

Intel

Santa Clara, California, USA

www.intel.com

Neousys Technology

New Taipei City, Taiwan

www.neousys-tech.com

NVIDIA

Santa Clara, California, USA

www.nvidia.com

ON Semiconductor

Phoenix, Arizona, USA

www.onsemi.com

Pleora Technologies

Kanata, Ottawa, Canada

www.pleora.com

Vision Components

Ettlingen, Germany

www.vision-components.com

About the Author

Chris Mc Loone

Editor in Chief

Former Editor in Chief Chris Mc Loone joined the Vision Systems Design team as editor in chief in 2021. Chris has been in B2B media for over 25 years. During his tenure at VSD, he covered machine vision and imaging from numerous angles, including application stories, technology trends, industry news, market updates, and new products.