Computing at the Edge and More for Embedded Vision

Although embedded vision is not new to the vision/imaging industry, it means something different to many people. In a recent Vision Systems Design Webinar, “Embedded Vision—Is It Right for Your Application?” Perry West said that embedded vision is an exciting, growing area of computer vision but that, “there is no standards committee that says: ‘this is exactly what it means.’ “

Jan-Erik Schmitt, CEO of Vision Components (Ettlingen, German; www.vision-components.com), says vision solutions for image capture and processing are getting smaller and more powerful, with cameras being “embedded as sensors in a number of applications. Accordingly, the label ‘embedded vision’ is often used,” he says. “For us, however, the claim goes even further: embedded vision means that the components are perfectly tailored to the respective applications and that they dispense with unneeded overhead in terms of components and functionality. These solutions are as small as possible and optimally suited for edge devices and mobile applications.”

Vision at the Edge

Edge computing capabilities have been a factor allowing embedded vision to grow quickly.

Harishankkar Subramanyam, vice president of business development at e-con Systems (San Jose, CA, USA; www.e-consystems.com), says that with advancements in camera technology and embedded platforms, the breadth of applications embedded vision can serve has expanded exponentially. He goes on to say that many traditional machine vision systems are being migrated to embedded vision systems owing to their edge processing capabilities. “With cameras and processing platforms getting smaller and better in terms of quality and performance, innovators are coming up with newer applications where smart camera systems are used,” he states.

Michael Huang, product manager with Cincoze (New Taipei City, Taiwan; www.cincoze.com), says that mainstream PC technology for embedded vision is mature. Embedded PCs, however, shrink in size, with price and performance being obvious advantages. “When incorporated with sensors and Internet of Things (IoT) devices, edge computing performs more efficient computations at the local edge network,” he says.

Ulli Lansche, technical editor, MATRIX VISION (Oppenweiler, Germany; www.matrix-vision.com), says that the goal of embedded vision systems is usually to bring image processing, including hardware and software, into the customer's housing. The camera is located relatively close to the computer platform, and the application-specific hardware is embedded in or connected to the system. Additional components such as lighting, sensors, or digital I/O are integrated or provided. “This vision-in-a-box approach is naturally suitable for many applications and is naturally predestined for edge computing,” he says. “Edge computing is known to be the approach of decentralizing data processing, i.e., carrying out the initial preprocessing of the compressed data at the edge, so to speak, to the network and then performing the further compression in the (cloud) server. In IoT, embedded vision represents the image processing solution for edge computing. It is thus clear that even with Industry 4.0, the requirement profile of embedded vision has not changed much.”

Deep Learning/AI

Chris Ni, product director, vice president, Neousys Technology (Northbrook, IL, USA; www.neousys-tech.com), discusses how deep learning has expanded embedded vision into new applications, saying that traditional embedded vision systems usually comprise a camera, proper lighting, adequate computing power, and a computer vision algorithm and relies on a quality image for image processing. “The boost of deep learning technology helps us reduce the impact of low-quality images acquired from poorly lit conditions,” he says. “Deep-learning vision can automatically generate feature maps and identify or classify objects like how we see the world with our eyes and brain. This new territory of deep learning vision, along with a new breed of cameras, helps us deal with more complicated vision applications in the dynamic environments.”

Subramanyam adds that the increased adoption of artificial intelligence (AI) in various applications such as robotics, intelligent video analytics, remote patient monitoring, diagnostics, autonomous shopping systems, etc. has also propelled the growth of embedded vision in recent years.

Thomas Charisis, marketing manager, Irida Labs (Rio, Patras, Greece; www.iridalabs.com), observes that, in the embedded vision market, camera manufacturers are beginning to assert themselves in a field that used to be dominated by semiconductor and IoT companies. “One after another, big camera manufacturing companies are introducing smart camera solutions that are equipped with embedded AI processing capabilities,” he says.

This transition, according to Charisis, is indicative of the new capabilities that are brought when vision AI processing is executed at the edge in conjunction with resource-demanding and privacy-concerning cloud computing that used to be the predominant solution. Edge computing also comes with compact sensor dimensions that allow easy integration with existing infrastructure as well as interconnectivity with existing software stack via industry-standard IoT and sensor protocols.

Huang adds, “[Another] important development is the rise of AI and machine learning. GPUs dramatically speed up computational processes for deep learning. Embedded vision evolves from simple environmental perception to the intelligent vision-guided automation system. It helps to process more complex scenarios and make real-time decisions at the edge.”

Emerging Applications

Just as it is difficult to come up with an all-encompassing definition for embedded vision, so is it difficult to compile a comprehensive list of applications. Although embedded vision has roots on the factory floor, it’s use today goes well beyond the confines of industrial automation. “The industrial field should be regarded as the starting point of embedded vision,” Huang says. “Embedded vision was widely used on production lines in factories and was fixed on equipment to complete the tasks of positioning, measurement, identification, and inspection. Today, embedded vision walks out of the ‘box’ as an industrial robot or an autonomous mobile robot (AMR). It is widely used in a variety of sectors ranging from agriculture, transportation, automotive, mining, medical to military.”

Mentioning AMRs means the discussion turns toward more dynamic environments. For example, Ni cites warehouses as one of these more dynamic environments. Unlike embedded vision systems deployed on production lines, which feature well-configured lighting, warehouse applications feature autonomous guided vehicles (AGVs)/AMRs that use vision for localization and obstacle detection. Another example is intelligent agricultural machinery that uses cameras for autonomous guidance. “These applications bring new challenges since it’s almost impossible to have a stable, well-set-up illumination condition,” he says. “Thus, we may need new cameras, new computing hardware, and new software to get the job done.”

Schmitt says Vision Components sees growing demand for embedded vision in all fields of business—both consumer and industrial. One recent application used AMRs for intralogistics and manufacturing processes. MIPI camera modules provide reliable and precise navigation, especially when collaborating with other robot systems and when positioning and aligning in narrow passages.

Taking industrial automation as an example for AMRs, Kenny Chang, vice president of system product BU at ASRock Industrial (Taipei City, Taiwan; www.asrockind.com), explains that AMRs employ embedded vision to sense their surroundings in the factory. “In addition, AI-implemented Automated Optical Inspection is another big trend for smart manufacturing, delivering huge benefits to manufacturers.”

Speaking of AI, Jeremy Pan, product manager, Aetina (New Taipei City, Taiwan; www.aetina.com), says that other AI applications for embedded vision include AI inference. One example is a virtual fence solution to detect if factory staff is entering a working robotic arm’s movement/motion radius to force the robotic arm to stop. Additionally, AI visual inspection can be used for defect detection in factories.

Ed Goffin, Marketing Manager, Pleora Technologies Inc. (Kanata, ON, Canada; www.pleora.com), adds, “Probably like many others, we’re seeing more emerging applications integrating AI and embedded processing for vision applications. For offline or manual inspection tasks, there are desktop systems that integrate a camera, edge processing, display panel, and AI apps to help guide operator decision-making. The next step in the development of these systems is to integrate the processing directly into the cameras, so they are even more compact for a manufacturing setting. For manual inspection tasks, these desktop embedded vision applications help operators quickly make quality decisions on products. Behind-the-scenes, the AI model is consistently and transparently trained based on user actions. These systems really take advantage of ‘the best of’ embedded processing, in terms of compact size, local decision making, and powerful processing—plus cost—to help manufacturers leverage the benefits of AI.”

Charisis cites smart cities as an emerging application for embedded vision. “What we recognize as an emerging trend is the increasing adoption of embedded vision at scale in civilian and commercial applications on smart cities and smart spaces,” he says. Applications here include smart lighting poles that sense the roads and adapt luminance to vehicle traffic and pedestrian usage, smart traffic lights that optimize traffic management and minimize commute or dwell times by adjusting in real-time traffic conditions, and smart bus stops that sense people and improve queues through better planning and routing. There are even smart trash bins that optimize waste management and maintenance scheduling.

Basler’s (Ahrensburg, Germany; www.baslerweb.com) Florian Schmelzer, product marketing manager, Embedded Vision Solutions, explains that high dynamic range (HDR) is opening up applications for its dart camera series, for example intelligent light systems. These systems must operate reliably in highly variable conditions—there could be glistening daylight or there could be twilight situations. “This is just one scenario where Basler’s embedded camera dart with HDR feature is able to deliver the required image quality so that the embedded vision system as a whole functions,” he says.

Lansche cites a recent example from MATRIX VISION where two remote sensor heads with different image sensors—each for day or night use—were part of a license plate recognition system for a British traffic monitoring company. “Also, multicamera applications in agriculture, in the food sector, medical technology, or in production are predestined for embedded vision,” he says.

“The most exciting innovation in embedded vision is currently happening with the combination and optimization of the edge and the cloud,” says Sebastien Dignard, president, iENSO (Richmond Hill, ON, Canada; www.ienso.com). “That means, embedding a camera is no longer about taking a great picture. It’s about how you analyze the picture, what vision data you can extract, and what you do with that data. The System on Chip (SoC), which is the ‘brain’ of the camera, is driving this new reality. SoCs are becoming smaller, more powerful, and more affordable. Optimization from the edge to the cloud, aggregating and analyzing vision data from multiple devices, and making business decisions based on this analysis—these are all elements of embedded vision that are taking center stage. We see this being deployed in applications from home appliances to robotics and precision farming.”

Subramanyam states that embedded vision has been revolutionizing retail, medical, agricultural, and industrial markets. He also says that some of the cutting-edge applications across these markets where embedded vision is creating a wave of change are automated sports broadcasting systems, smart signages and kiosks, autonomous shopping systems, agricultural vehicles and robots, point of care diagnostics, life sciences and lab equipment.

Implementation

Once you decide embedded vision is appropriate for a system you are building for a customer, what’s next? Following is a sampling of products available as you design the system.

The 28- × 24-mm VC Power SoM (Figure 1) from Vision Components is designed around an FPGA with approximately 120,000 logic cells and with integrated MIPI controllers. It is suitable for embedded vision applications such as color conversions, 1D barcode identification, or epipolar corrections. It can also be deployed for AI-accelerations directly in any MIPI datastream.

Basler’s embedded vision processing board includes various interfaces for image processing and a flexible SoM and carrier board (Figure 2) approach based on NXP's i.MX 8M Plus SoC. The board includes Basler’s pylon Camera Software Suite, which provides certified drivers for all types of camera interfaces, simple programming interfaces and a comprehensive set of tools for camera setup. For vision applications, BCON for MIPI, GigE Vision, and USB3 Vision are available as interfaces. It can be used in applications ranging from factory automation, logistics and retail to robotics, smart city, and smart agriculture.

e-con Systems’s qSmartAI80 (Figure 3) is a ready-to-deploy Qualcomm® QCS610-based AI vision kit with a Sony 4K ultra low light camera for AI-based camera applications. This edge AI vision kit comprises e-con's Sony STARVIS IMX415-based 4K MIPI CSI-2 camera module, a VVDN Qualcomm® QCS610-based SoM, and a carrier board. The 4K camera module delivers clear images even in low light conditions. Also, this kit is engineered to enable computing for on-device image processing with power and thermal efficiency.

The Aetina SuperEdge AIS-D422-A1 (Figure 4), with high-level GPUs, can be used as an AI training platform in different fields including smart cities, smart factories, and smart retail. Besides running AI training and inference tasks, AIS-D422-A1 enables its users to monitor multiple edge AI devices with Aetina's EdgeEye, a type of remote monitoring software. Users can view system status of the edge devices through EdgeEye dashboard. The platform can also be paired with and powered by NVIDIA A2 Tensor Core GPU to run AI-related development tasks with better performance.

Neousys’s latest Jetson Xavier NX platform (Figure 5), NRU-51V and NRU-52S, features compact and fanless form factors and offers reliable wide-temperature operation. The NRU-51V offers GMSL2 interfaces to acquire images from automotive CMOS or time of flight (ToF) cameras with a SerDes interface, plus a 10GBASE-T interface to support a high-resolution camera. The NRU-52S provides multiple GigE ports to connect GigE or IP cameras.

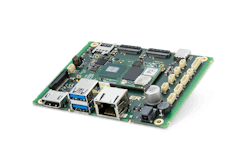

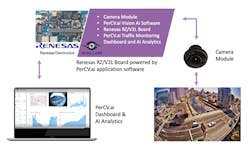

Irida Labs recently collaborated with Renesas Electronics to develop the Vision AI Sensor (Figure 6) that allows municipal infrastructure operators, public utility companies, or OEMs to deploy scalable, AI-powered solutions for traffic monitoring, pedestrian flow monitoring, or smart parking. This sensor is built on Renesas’s RZ/V2L hardware module and powered by Irida Labs’s PerCV.ai proprietary Vision AI software. To accommodate an easy/fast prototype system deployment and speed up the overall time to market, the sensor is shipped on a preconfigured plug-and-play prototype package that comes with the necessary hardware (camera module, board) and Vision AI software as well as a user interface that allows sensor and project management but also insights and analytics.

Cincoze’s DS-1300 (Figure 7) is a rugged, high performance, highly expandable embedded computer, powered by Intel® Xeon® or Core™ CPU and supports up to 2x PCI/PCIe slots. It allows flexible PCI/PCIe expansion with the addition of GPU, image capture, data acquisition, and motion control cards and is suitable for industrial embedded vision applications.

iENSO’s new platform, Embedded Vision Platform as a Service (EVPaaS) (Figure 8), offers product companies and OEMs a secure, end-to-end embedded vision solution platform that includes everything needed to capture, analyze, and integrate vision data into products and recurring revenue plans. Companies can deploy the entire platform or look at deploying pieces of it.

The mvBlueNAOS (Figure 9), from MATRIX VISION, uses PCI Express (PCIe) for image transmission. GenICam-compatible software support ensures compatibility with existing image processing programs. The first models with Sony Pregius and Pregius S sensors offer resolutions ranging from 1.6 to 24.6 MPixels. The PCIe x4 interface allows transmission rates up to 1.6 GB/s and has enough space for higher bit depths, simultaneous image preprocessing, and future sensors with higher frame rates.

Pleora Technologies expanded its machine vision portfolio with a new software solution that converts embedded platforms, sensors, and cameras into GigE Vision devices. With eBUS Edge™ (Figure 10), manufacturers can design or upgrade imaging solutions for more advanced applications, including 3D inspection and multisensor Industrial Internet of Things (IIoT) systems, without investing in new hardware. Pleora’s software solution converts 3D images and data into GigE Vision and GenICam-compliant time-stamped data that is transmitted with associated metadata over low-latency Ethernet cabling. Data from multiple sensors, including 1D and 2D images, can be synchronized and transported in parallel using multiple streams.

ASRock Industrial’s iEPF-9010S-EY4 robust AIoT platform (Figure 11) features Intel® 12th Gen (Alder Lake) Core™ Processors with R680E Chipset; 4x 260-pin DDR4 SO-DIMM up to 128 GB (32 GB per DIMM); 1x PCIe x16 (PCIe Gen 4) or 2x PCIe x8 (PCIe Gen 4), 2x PCIe x4 (PCIe Gen 4); 1x M.2 Key M, 1x M.2 Key B, 1x M.2 Key E, 2x Mini PCIe; 6x USB 3.2 Gen2x1, 6x COM, 4x SATA3, 8 x DI, 8x DO; 5x Intel 2.5G LAN (2 support PoE, 1 supports vPro), and 1x Displayport, 1x HDMI 2.0b, 1x VGA.

Looking Ahead

Subramanyam believes that the future of embedded vision is going to be about building self-reliant smart camera systems that can run complex AI algorithms to enable machines to make more intelligent decisions with much less to little human intervention. “Though the smart camera revolution has started, the future will see embedded cameras making these camera systems smarter and more accurate,” he says.

Dignard says, “For companies looking into adding embedded vision to their products or innovating on what they currently have, it’s important to look at this as a big opportunity to differentiate. With a holistic, organization-wide approach, you’re able to go far beyond a ‘me too’ product and consider where embedded vision can take your product roadmap. By creating a vision that is beyond just a product with an embedded camera, you can completely redefine how your product is used, what customer needs it addresses, and the implications to your business model and go-to-market strategy. Addressing all the parts of a complete embedded vision system, which include image capture, edge processing, cloud, and security, you can capture the imaginations of customers in an emerging new space.”

“Embedded vision is continuing to revolutionize the machine vision market,” says Goffin. “Depending on the application, it can make it easier for a machine, or even a human, to make a local decision. In the manufacturing market, and especially for processes that require human visual inspection, embedded vision promises to bring real-time decision-support insight directly to the operator level. There’s also a real demand to combine the benefits of GigE Vision, including multicasting, networking, and processing flexibility, with embedded vision for emerging IIoT types of applications.”

Ultimately, whether embedded vision is definable or not, end users continue deploying it in more and more applications.

About the Author

Chris Mc Loone

Editor in Chief

Former Editor in Chief Chris Mc Loone joined the Vision Systems Design team as editor in chief in 2021. Chris has been in B2B media for over 25 years. During his tenure at VSD, he covered machine vision and imaging from numerous angles, including application stories, technology trends, industry news, market updates, and new products.