Industrial Computing Advances Power Evolution in Robotics, AI, Machine Vision

What you will learn:

- Hardware innovations, such as multi-core CPUs, GPUs and TPUs, enable real-time AI inferencing directly on the edge.

- Data throughput via PCIe 6.0, NVMe, and rugged RAM eliminates bottlenecks and can smoothly feed data to AI modules without latency or thermal constraints.

- Small, fanless industrial PCs with USB3/Thunderbolt/PCIe enable on‑robot intelligence.

For many years, industrial automation has been a consistent beneficiary of advances in robotic technology and advanced software solutions for data processing, analysis, and decision-making. The evolution of software has led to more revolutionary artificial intelligence (AI) solutions across a spectrum of market segments and applications. The journey hasn’t been easy or straightforward, however.

In the case of AI, many early leaps in capability were not deployable until recently, hamstrung by lagging computing capability in form factors and packages not ideally suited to remote, edge, and mobile deployment. Today, modern advances in hardware technologies are intersecting in ways that rapidly fill identified gaps in system performance capability, paving the way for sophisticated computer vision and AI software solutions to finally deploy in scalable fashion and with meaningful results.

CPUs, GPUs, and TPUs Open New Doors

When we think about the progress made in computing capability, CPU and GPU advances often are top of mind, and for good reason. CPUs are the core of a computing platform, responsible for executing program instructions, running the operating system, and managing system inputs and outputs (I/O). The number of cores in a typical CPU has risen steadily along with speed and memory bandwidth, contributing to a continuous rise in performance.

In addition, CPUs with two distinct types of cores on the same device are becoming more readily available. The advantage of these performance cores (P-cores) and efficiency cores (E-cores) is that the workload is distributed based on resource needs. For example, background and lighter processing tasks are assigned to E-cores, which are smaller, more efficient, and well suited to basic tasks. P-cores, on the other hand, are like traditional cores and are performance-oriented. They are used as needed to handle intense processing demands such as computer vision, gaming, and machine learning tasks.

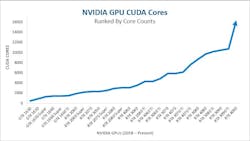

While the CPU is the main processor, the GPU—originally developed to offload graphical processing and display tasks from the CPU—has evolved into the go-to technology for graphically and computationally intensive processing. Modern GPU devices contain thousands of cores capable of running multiple processes in parallel and are ideally suited for executing many smaller tasks at one time. They have been instrumental in supporting the proliferation of AI capabilities due to their ability to accelerate processing tasks. As with CPUs, GPU technology is advancing in two directions at once—with larger, more capable devices at the core of data centers, video editing systems, and AI model training, and with smaller, more efficient devices that enable deployment of powerful processing at the edge and in mobile and robotic systems.

In addition to the two main processor types, tensor processing units (TPUs) are rapidly gaining traction in applications that look to leverage machine learning. As the name implies, these processors are specifically designed for and tailored to perform tensor operations in support of neural network computations. TPUs are more power-efficient than GPUs, and because of their purpose-built design, they can execute training and inference tasks more quickly than their GPU counterparts. In addition, TPUs are integrated into the TensorFlow machine learning framework, lowering the barriers for developers who wish to leverage their capabilities. TPUs represent a promising processor technology that further enhances the performance capabilities of industrial computing systems used in AI applications. They are expected to grow and mature rapidly in support of the demands of those software developments.

PCIe Pushes Data Transfer Forward

Computing performance cannot advance on processor technology alone. Data needs to be able to flow from input devices into the system and from device to device within the system at rates sufficient to maximize the performance of available processors. Without this, the technological leaps and bounds are lost, and benefits are left unmaterialized. Fortunately, other core technologies central to computing systems have also been evolving to support the greater ecosystem. Data bus, memory, and storage technologies are leading the charge to enable faster, more efficient, and higher overall system performance. These elements—when combined with cutting-edge processing—create the environment necessary to foster accelerated growth of AI, robotics, machine vision, mobile computing, and edge computing.

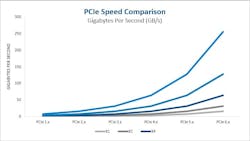

The Peripheral Component Interconnect Express (PCIe) bus is the infrastructure roadway for almost all data in a PC. From its groundbreaking introduction nearly 20 years ago to today, it continues to be the bedrock of many computing platforms and facilitates the rapid transfer of data within a system. The most recent version, PCIe 6.0, was announced in 2021 and provides a total bidirectional bandwidth of 256 GB/s. This ensures that data can move at rates sufficient to keep modern memory and processors utilized for maximum overall productivity. PCIe 6.0 is a major boost for cloud computing, AI, and machine learning applications, as these need reliable and robust interconnectivity and performance while routinely placing intense data transfer and processing demands on a system. By enabling faster access and transfer and by minimizing idle times, PCI 6.0 also reduces inference and training times.

Storage and Memory Developments

With some exceptions, most of the data input into a computer moves through system memory or RAM. System memory has the potential to be a major bottleneck to data throughput and reliability. As with the PCIe bus and other peripheral interfaces, we continue to see advances in memory technology to help ensure this does not happen. DDR4 (double data rate 4) memory, for example, facilitates speeds of up to 3200 MHz, which translates into peak transfer rates of approximately 25 GB/s—fast enough for nearly any modern computing task.

In terms of reliability, many computing platforms intended for use in edge and robotic applications tend to be smaller in size and form factor. To combat environments with excessive dirt, dust, or contaminants, those systems are increasingly making use of fanless architectures, which are sealed and conduction cooled. Memory has historically been vulnerable to some of these applications, but fortunately these challenges are also being solved with the recent availability of extreme-temperature DDR4 memory. A safe operating range of –40°C to 125°C permits high-performance computing in harsh operating environments, where robotics and mobile systems are increasingly being leveraged to increase safety for human workers.

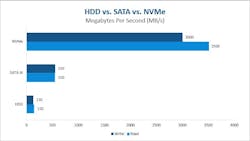

One of the last remaining bottlenecks in PC performance revolves around storage. Whether retrieving data from storage media for processing or writing processed data to media for storage, archival purposes, or future use, hard disk drive (HDD) and solid-state drive (SSD) technology have routinely lagged advances in processor, memory, and data bus technology. The arrival of Non-Volatile Memory Express (NVMe) technology coupled with SSD architecture represents a major jump in storage performance, with access times and transfer times improving by nearly an order of magnitude. This, combined with PCIe 6.0 and DDR4 memory technology, rounds out a platform of innovation that, when complemented with modern processing devices, provides developers, researchers, and users with industrial computing performance adequate to meet the demands of today’s advanced computer vision, machine learning, and AI software.

Small Form Factors, High Speeds

With hardware potential catching up with software capability, which applications stand to benefit most?

Robotic systems are being tapped for more sophisticated uses every day, and we routinely see robots with adaptive navigation systems that can assess their environments and make decisions thanks to the integration of AI technologies into their platforms. Because of their mobile nature, these systems are almost always battery-powered, which places an importance on energy conservation while still demanding optimal computing.

Small form factor embedded industrial computers answer the call, facilitating high-speed data acquisition through modern input interfaces such as USB 3.0, Thunderbolt, and PCIe, combined with real-time processing and decision-making. Mission-critical and time-sensitive applications now find themselves with the additional support of robotic capability where previously only human involvement would suffice.

In the case of simulation and digital twin applications, it is possible to replicate manufacturing and factory automation environments using real data obtained from an active manufacturing line to further train AI models as well as to accurately assess the impact of software changes and the addition of new equipment or devices, all without compromising the integrity of the live operation. Rapid data movement made possible by today’s computing technology enables this, with the result being eventual increases in productivity, safety, and product quality. Everyone benefits.

Expanding the Automation Space

In the machine vision world, throughput and accuracy are paramount in manufacturing, inspection, and assembly tasks. The ability to make use of more sophisticated algorithms and software capability will continue to open doors for automation and expand the overall marketplace in which it is used. This thirst will likely see software continue to push the limits of hardware and propel its evolution further in future generations in an effort to meet the processing needs of tomorrow and beyond.

About the Author

Nathan Hepp

Nathan Hepp is the creative director at CoastIPC (Hingham, MA, USA), which specializes in industrial embedded computing solutions, designed in partnership with its customers. Hepp has 16 years of experience in creative storytelling, strategic thinking and graphic design for print and digital marketing campaigns.