April 2019 snapshots: motion-sensing hardware redesigned for the scientific community, sub-terahertz sensors for autonomous vehicles, fully-automated test processes for aircraft cockpits, and an autonomous delivery truck fleet project

Researchers at MIT develop system to assist autonomous vehicles

LiDAR technology, a central feature in many autonomous vehicles’vision systems, has difficulty dealing with dust, fog, and atmospheric disturbance. Autonomous vehicles that can’t account for poor driving conditions require the intervention of human drivers. Researchers at MIT (Cambridge, MA, USA; www.mit.edu) have made a leap toward solving thischallenge.

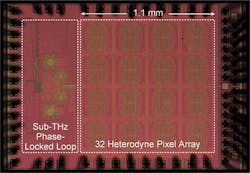

Sub-terahertz radiation operates on wavelengths between microwaves and infrared on the electromagnetic spectrum. Radiation on these wavelengths can be easily-detected through fog and dust clouds and used to image objects via transmission, reflection, and measurement of absorption. The IEEE Journal of Solid-State Circuits on February 8published a paper (http://bit.ly/VSD-GHZ) in which MIT researchers describe a sub-terahertz receiving array that can be built on a single chip and performs to standards traditionally only possible with large and expensive sub-terahertz sensors built using multiplecomponents.

The array is designed around new heterodyne detectors—schemes of independent signal-mixing pixels—much smaller than traditional heterodyne detectors that are difficult to integrateinto chips.

Each pixel, called a heterodyne pixel, generates a frequency difference between a pair of incoming sub-terahertz signals. Each individual pixel also generates local oscillation – an electrical signal that alters a received frequency. This produces a signal in the megahertz range that can easily be interpreted by the prototype sub-terahertz receiving array’sprocessor.

Synchronization of these local oscillation signals is required for the successful operation of a heterodyne pixel array. Previous sub-terahertz receiving arrays featured centralized designs that shared local oscillation signals to all pixels in the array from a single hub. The larger the array, the less power available to each pixel, which results in weak signals and low sensitivity that makes imagingdifficult.

In the new sub-terahertz receiving array developed at MIT, each pixel independently generates this local oscillation signal. Each pixel’s oscillation signal is synchronized with neighboring pixels. This results in each pixel having more output power than would be provided by asinglehub.

A stable frequency of the local oscillation signal is required for the system to properly measure distance. The researchers used a component called a phase-locked loop that takes the sub-terahertz frequency of all 32 individually-generated oscillation signals and locks them to a low-frequency reference that remains stable. This results in identical, high-stability frequency and phase forallsignals.

Because the new sensor uses an array of pixels, the device can produce high-resolution images that can be used to recognize objects as well as detect them.

The prototype has a 32-pixel array integrated on a 1.2 mm square device. A chip this small becomes a potentially viable option for deployment on autonomous vehiclesandrobots.

“Our low-cost, on-chip sub-terahertz sensors will play a complementary role to LiDAR for when the environment is rough,” says Ruonan Han, director of the Terahertz Integrated Electronics Group in the MIT Microsystems Technology Laboratories and co-author ofthe paper.

Microsoft announces Azure Kinect vision system

Microsoft (Redmond, WA, USA;www.microsoft.com ) has leaned-in to the rejection of the Kinect by the entertainment industry and adoption instead by the medical and scientific communities by announcing a new version of the Kinect device with advanced artificial intelligence meant to power computer vision and speechapplications.

The original Microsoft Kinect device released in 2010 was intended for video gaming and never took off in that direction. However, the medical community in particular adopted and adapted the Kinect for a variety of uses. Now, Microsoft has announced the Azure Kinect DK, a new device that is squarely aimed at the health and life sciences, retail, and manufacturing industries, that will begin shipping in late June2019.

The Azure Kinect DK is equipped with a 1 MPixel Time-of-Flight depth sensor that can provide 640 x 576 or 512 x 512 at 30 fps, or 1024 x 1024 at 15 fps; an RGB camera based on an OmniVision (Santa Clara, CA, USA;www.ovt.com) OV12A10 12 MPixel CMOS rolling shutter sensor that can provide 3840 x 2160 at 30 fps; a 7-microphone circular array; a 3-axis accelerometer; and a 3-axis gyroscope. The device is designed to be synchronized with multiple Kinect cameras simultaneously and to be integrated with Microsoft’s Azure cloud services, including Azure Cognitive Services, to enable the use of Azure MachineLearning.

According to Microsoft, the new Kinect device is appropriate for a wide range of applications, including physical therapy and patent rehabilitation, inventory management, smart palletizing and depalletizing, part identification, anomaly detection, and incorporation into roboticsplatforms.

Microsoft is also promoting a set of software development kits (SDK) and application programming interfaces (API) for the Azure Kinect DK, including a Body Tracking SDK that can observe and estimate 3D joints and landmarks in order to measure human movement, and a Vision API that can enable image processing algorithms, optical character recognition, or imagecategorization.

The camera measures 103 x 39 x 126 mm and features a USB 3.1 Gen 1 interface with a Type-C connector. It can operate in an ambient temperature range of 10° Cto 25° C.

Russian autonomous vehicle project to develop fleet of self-driving trucks

The Russian mining industry management system developerVIST Group (Moscow, Russia;http://vistgroup.ru/en/), a subsidiary of industrial digital technology corporation Zyfra (Helsinki, Finland; https://zyfra.com/en/), has teamed-up with Russian truck and engine manufacturer KAMAZ (Naberezhnye Chelny, Russia;https://kamaz.ru/en/) and Nazarbayev University (Astrana, Kazakhstan;https://nu.edu.kz/) to launch an autonomous truck initiative. The hope is to develop an autonomous KAMAZ truck fleet for long-distancetransportation.

This new project builds on VIST’s success in acollaboration with Belarusian earthmoving equipment manufacturer BelAZ (Zhodzina, Belarus; http://belaz.ca/). In late 2018, at a testing ground in Belarus, VIST and BelAZ demonstrated a remote-operated 130-ton BelAZ-751R dump truck and a fully-automated BelAZ-78250 front loader as they worked together to move mounds of dirt.

The project was part of VIST’s “Intelligent Mine” project, which saw the development of autonomous mining equipment designed to decrease the risks of human injury while allowing for 24-hourproduction.

For VIST’s new collaboration, a KAMAZ 5490 Neo chassis designed as a flagship for the KAMAZ fleet, will be fitted with six Orlaco (Barneveld, Netherlands;www.orlaco.com) EMOS low-latency Ethernet cameras, with aperture angles of 60° or 120°. The EMOS camera is ruggedized for use with onboard computer systems in trucks and heavy machinery, has a latency below 100 ms, and can stream MJPEG or H.264 via RTP and AVB protocols. The KAMAZ 5490 will also be equipped with short and long-range radars manufactured by Continental Automotive (Hanover, Germany;www.continental-corporation.com), and VLP-16 “Puck” LiDAR manufactured by Velodyne(San Jose, CA;www.velodynelidar.com). An NVIDIA (Santa Clara, CA, USA; www.nvidia.com) Jetson TX2 module will process and synchronize the data received by the varioussensors.

“Computer vision can be used to solve tasks such as lane following, traffic light and sign recognition, and object detection and classification, which are not possible with conventional sensors, including radars and lidars,” says a researcher on the project. “The current state of the technology does not allow precisely and robustly identifying the distance, spatial measures or velocity of the surrounding objects, however. In this project we plan to blend all possible sensor inputs like cameras, radar, and LiDAR to build a robust autonomous truck where each and every sensor can complement or substitute for othersensors.”

“We are managing the engineering operations for the adaptation and improvement of computer vision modules for the unmanned control of the Kamaz Neo vehicle,” saysproject manager Zhandos Yesenbayev, senior researcher at Nazarbayev University. “Together with the VIST Group specialists, who provide us with the necessary additional equipment and software, we have started the project, specifically the operations to introduce vehicle trajectory planning functions into software and generate vehicle control commands to detect and recognizeobstacles.”

The project is expected to be finalized by September 2019.