April 2017 snapshots: Super Bowl drones, robot built by high-schooler, Facebook's computer vision algorithms, imaging at the ISS

Drones light up the sky for Super Bowl halftime performance

Collaborating with Pepsi and the NFL, Intel (Santa Clara, CA, USA;www.intel.com) deployed 300 of its Shooting Star drones (http://bit.ly/VSD-SB51-1) to create an image of the American flag as halftime performer Lady Gaga performed at Houston's NRG Stadium.

The drones were recently tested with Disney (http://bit.ly/VSD-SB51-2), as this past holiday season, visitors at Disney Springs-a waterfront shopping, dining, and entertainment district-saw 300 of the drones deployed in the night sky. "Starbright Holidays, An Intel Collaboration" was held during this past holiday season. During the show, the drones performed a synchronized light show choreographed to holiday music.

Intel's Shooting Star drones are 15.11 in x 15.11 x 3.66 in. (384 x 384 x 93 mm) with a rotor diameter of 6 in. and can fly for up to 20 minutes. The drones have a maximum takeoff weight of 0.62 lb. and can fly up to almost one mile away. Additionally, the drones feature built-in LED lights that can reportedly create more than 4 billion color combinations in the sky. All 300 drones are controlled with one computer and one drone pilot, with a second pilot on hand as backup. In order to perform at the halftime show, Intel received a special waiver from the FAA to fly the fleet up to 700 feet. Furthermore, they were required to obtain a special waiver to fly the drones in the more restrictive Class B airspace.

Steve Fund, CMO at Intel, commented on the drones in Forbes (http://bit.ly/VSD-SB51-3).

"We have been using our drone technology to create amazing experiences. We put the Intel logo in the sky in Germany. We recently partnered with Disney in their fireworks show-they used drones instead of fireworks. We can program them to create any pattern that you want. We think we're doing something that's unique," he said. "We knew our technology would be utilized in the game. We just think it takes things to the next level."

While the technology and the end result is undeniably impressive, Wired (http://bit.ly/VSD-SB51-4) notes that the drone performance was taped on an earlier night, and did not appear live to the crowd at Super Bowl LI.

NVIDIA high-school intern builds humanoid robots

Sixteen-year-old NVIDIA (Santa Clara, CA, USA;www.nvidia.com) intern Prat Prem Sankar's interest in robotics began more than five years ago when his father bought him a Lego (Billund, Denmark; www.lego.com) Mindstorms NXT set, which is a programmable version of the toy. At that point, he said, he knew he wanted to be a robotics engineer. Flash forward to 2016's GPU Technology Conference-the world's largest event for GPU developers-where he sat in on a tutorial on deep learning. Here, said Sankar, is where he first saw the possibilities of what deep learning could be used for.

"It was the kind of technology I wanted to see in robotics. I knew right away I had to be part of the company."

Two months later, he joined NVIDIA as a summer intern, where he was given the chance to utilize deep learning by building three humanoid robots using Jetson TX1 embedded system developer kits, which are capable of running complex, deep neural networks.

The Jetson TX1 features 1 Teraflop / 254-core with NVIDIA Maxwell architecture, 64-bit ARM A57 CPU, 4K video encode (30 Hz) and decode (60 Hz), and a camera interface capable of handling up to six cameras, or 1400 MPixels/s. Sankar's robots, which he named Cyclops, were programmed by showing them thousands of images from the internet. In the video, the Cyclops robot is shown "looking" at an apple using a Logitech webcam, and is able to recognize it and keep it within its range of vision. It sees it as an apple, and is "confirming it constantly, checking back with the Jetson."

"The second that apple drops out of frame, and you put an orange [in frame] or replace it with some other kind of fruit, the robot continues searching-it's still looking for the apple. Deep learning is flashing thousands of images of apples, oranges, bananas, and other types of fruit-or whatever objects you want-and it creates a network, saying if it is red, go here, if it's a little round, go here."

In addition to his internship at NVIDIA, Sankar continues to build robots with his FIRST robotics team, the Arrowbotics. As the vice president of engineering, he has helped his team excel in competitions. Earlier this year, they built an obstacle-tackling robot that made it to the quarterfinals in the Silicon Valley Regional FIRST Robotics Competition. NVIDIA was recently named a gold-level supplier of the FIRST Robotics Competition, which is part of the company's effort to inspire more young students like Sankar to become science and technology innovators.

Editor's note: NVIDIA recently introduced the Jetson TX2. View more information on this here:http://bit.ly/VSD-TX2

Facebook computer vision algorithms caption your photos

Most Facebook (Menlo Park, CA, USA;www.facebook.com) users know by now that when you upload an image with you or one of your friends in it, that the social networking website uses facial recognition algorithms to suggest who you might "tag" in the image. What some users may not know, is that Facebook also tags photos with data like how many people are in a photo, the setting of a photo, and even whether or not someone is smiling.

In April of 2016, Facebook rolled out (http://bit.ly/VSD-FB-1) automated alternative (alt) text on Facebook for iOS, which provides visually impaired and blind people with a text description of a photo using computer vision algorithms that enable object recognition. Users with a screen reader can access Facebook on an iOS device, and can hear a list of items that may be shown in a photo.

A new Chrome extension now shows what images Facebook has automatically detected in your photos using deep learning. "Show Facebook Computer Vision for Chrome" shows the alt tags that have been added to images that you upload that are populated with keywords representing the content of your images. Facebook, according to the Chrome extension developer Adam Geitgey (http://bit.ly/VSD-FB-2) is labeling your images using a deep convolutional network built by Facebook's AI Research (FAIR) Team.

"On one hand, this is really great," said the developer. "It improves accessibility for blind users who depend on screen readers which are only capable of processing text. But I think a lot of internet users don't realize the amount of information that is now routinely extracted from photographs."

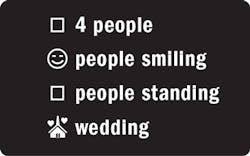

I put the extension to the test myself, and searched for an image that might come up with interesting results. The image was from a wedding of myself and three friends, two of which were the bride and groom. The word "wedding" was not in the caption, nor did it appear anywhere in the image. The results are to the right:

While the computer vision algorithms may leave out some details or not always produce results that are 100% accurate, in this particular case, the algorithms were correct, as they were in the image above as well, which I took with my phone from a vacation in Maine this past summer. Other companies are doing this, as well, including Google, which developed machine learning software that can automatically produce captions to describe images as they are presented to the user. The software, according to a MayVision Systems Design article (http://bit.ly/VSD-FB-3) may eventually help visually impaired people understand pictures, provide cues to operators examining faulty parts and automatically tag such parts in machine vision systems.

Vision system to enable autonomous spacecraft rendezvous

NASA's (Washington, D.C.;www.nasa.gov) Raven technology module launched aboard the 10th SpaceX (Hawthorne, CA, USA; www.spacex.com) commercial resupply mission on February 19. It features a vision system comprised of visible, infrared, and lidar sensors that will be affixed outside the International Space Station (ISS) to test technologies that will enable autonomous rendezvous.

Through Raven, NASA says it will be one step closer to having a relative navigation capability that it can take "off the shelf" and use with minimum modifications for many missions, and for decades to come. Raven's technology demonstration objectives are three-fold:

• Provide an orbital testbed for satellite-servicing relative navigation algorithms and software.

• Demonstrate multiple rendezvous paradigms can be accomplished with a similar hardware suite.

• Demonstrate an independent visiting vehicle monitoring capability.

Raven's visible camera, the VisCam, was originally manufactured for the Hubble Space Telescope Servicing Mission 4 on STS-109. The 28 Volt camera features an IBIS5 1300 B CMOS image sensor from Cypress Semiconductor (San Jose, CA, USA;www.cypress.com), which is a 1280 x 1024 focal plane array with a 6.7 μm pixel size that outputs a 1000 x 1000-pixel monochrome image over a dual data-strobed Low Voltage Differential Signaling (LVDS) physical interface.

The camera is paired with a commercially available, 7 radiation-tolerant 8 - 24 mm zoom lens that has been ruggedized for spaceflight by NASA's Goddard Space Flight Center. The motorized zoom lens provides zoom and focus capabilities via two, one-half inch stepper motors. The adjustable iris on the commercial version of the lens has been replaced with a fixed f/4.0 aperture. Additionally, the Viscam provides a 45° x 45° FOV when at the 8 mm lens setting and a 16° x 16° FOV while at the 24 mm lens setting. The combination of the fixed aperture and variable focal length and focus adjustments in the lens yield a depth of field of approximately four inches from the lens out to infinity, according to NASA. Furthermore, the VisCam assembly includes a stray light baffle coated with N-Science's ultra-black Deep Space Black coating, which protects the VisCam from unwanted optical artifacts that arise from the dynamic lighting conditions in Low Earth Orbit.

Raven's infrared camera, the IRCam, is a longwave infrared (LWIR) camera that is sensitive in the 8 - 14 μm wavelength range. The camera features a 640 x 480 pixel U6010 Vanadium Oxide microbolometer array from DRS Technologies (Arlington, VA, USA;www.drs.com) and has an internal shutter for on-orbit camera calibration and flat- field correction. Furthermore, the camera operates via USB 2.0 interface and includes an athermalized, 50 mm f/1.0 lens that yields an 18° x 14° FOV.

Also included in Raven's sensor payload is flash lidar, which collects data by first illuminating the relative scene with a wide-beam 1572 nm laser pulse and then collecting the reflected light on a 256 x 256 focal plane array. By clocking the time between transmission and reception, the focal plane array can accurately measure the distance to the reflected surface, as well as the return intensity, at a rate of up to 30 Hz.

Five days after launch, Raven was removed from the unpressurized "trunk" of the SpaceX Dragon spacecraft by the Dextre robotic arm, and attached on a payload platform outside the ISS. Here, Raven provides information for the development of a mature real-time relative navigation system.

"Two spacecraft autonomously rendezvousing is crucial for many future NASA missions and Raven is maturing this never-before-attempted technology," said Ben Reed, deputy division director, for the Satellite Servicing Projects Division (SSPD) at NASA's Goddard Space Flight Center in Greenbelt, Maryland - the office developing and managing this demonstration mission.

While on the ISS, Raven's components will gather images and track incoming and outgoing visiting space station spacecraft. Raven's sensors will feed images to a processor, which will use special pose algorithms to gauge the relative distance between Raven and the spacecraft it is tracking. Based on these calculations, the processor will send commands that swivel Raven on its gimbal to keep the sensors trained on the vehicle, while continuing to track it. During this, NASA operators on the ground will evaluate the Raven's capabilities and make adjustments to increase performance.

Over two years, Raven will test these technologies, which are expected to support future NASA missions for decades to come.

View a technical paper on Raven athttp://bit.ly/VSD-RAVEN.