Novel thermal imagers fill gap in autonomous vehicle sensor suite

To drive at night, autonomous vehicles need thermal imaging sensors

Yakov Shaharabani

When it comes to the development of autonomous vehicles (AV), one of the key obstacles stumping automakers working toward full autonomy is driving at night.

According to the Insurance Institute for Highway Safety, pedestrian fatalities are rising fastest during the hours when the sun is down. More than three-quarters of pedestrian deaths happen at night, one of which is the now infamous Uber crash in Arizona that occurred in March of this year. Until the challenges of night driving are resolved, the development—and ultimate deployment—of autonomous vehicles cannot move forward.

Current sensors cannot detect everything

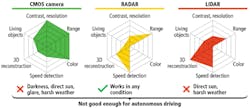

One main reason fully-autonomous vehicles have yet to take over roads is that they lack sufficient sensing technologies to give them sight and perception in every scenario. Many OEMs currently use LiDAR, radar, and cameras, but each of these have perception problems that limit the vehicle’s autonomy and require a human driver to be ready to take control at any moment. These sensors are particularly compromised at night.

For example, while radar can sufficiently detect objects at long range, it cannot clearly identify those objects. Cameras are better

at accurately identifying objects but can only do so adequately at close range distance. Radar and cameras are—therefore—often deployed in conjunction by many automakers: Radar is used to detect an object far down the road, while the camera is used to classify the object it as it approaches.

Many automakers also equip their autonomous vehicles with LiDAR (light detection and ranging) sensors. Like radar, LiDAR works by sending out signals and using the reflection of those signals to measure the distance to an object. (Radar uses radio signals, and LiDAR uses lasers or light waves.) While LiDAR delivers a wider field of view than radar and seems to deliver adequate detection and coverage to autonomous vehicles, it is, at present, too expensive for deployment to the mass market. Several companies are attempting to bring the cost down, but this only results in lower-resolution sensors that cannot provide the coverage needed for Level-5 autonomy.

Figure 1: Current sensing technologies suffer from perception problems. CMOS camera, radar, and LiDAR cannot function in dynamic lighting or harsh weather conditions.

The added challenge of night driving

To compensate for the weaknesses of the currently-used sensors, many automakers use multiple sensor types, creating a redundant network of sensing solutions. In this practice, where one sensor may fail at detection, it is backed up by the other(s).

But even with several sensors working together, today’s AVs still cannot achieve Level-5 autonomy. The problem is primarily one of classification. Together, radar, LiDAR, and cameras may be able to sufficiently detect all objects in the vehicle’s surroundings, but they might not properly classify the objects. When you add in the challenge of driving at night, this issue becomes even more serious—and even more gravely dangerous.

Consider the Uber crash. According to a report from the National Transportation Safety Board (http://bit.ly/VSD-NTSB), the vehicle detected the pedestrian six seconds before the accident, but the autonomous driving system classified the pedestrian as an unidentified object, first as a car and then as a bicycle. In other words, the vehicle’s sensors detected the victim, but its software wrongly determined that she wasn’t in danger and that no evasive action was required.

This is called a false positive, which is when an AV successfully detects an object, but wrongly classifies it. The software in autonomous vehicles is programmed to ignore certain objects, like an errant plastic bag or newspaper flicking across the street. These accommodations must be made for autonomous vehicles to drive smoothly, especially on high-speed roads. However, Uber’s fatal incident proves that autonomous vehicle software is still challenged by false positives—to perilous results. It need not be stated that, with pedestrians coexisting on roads with AVs, there is no room for error in classification of objects, as any error is extremely dangerous and, possibly, fatal.

Until the detection and classification process of current sensing solutions improves, autonomous vehicles will not be able to operate safely and reliably amongst pedestrians at night, and we may never see the deployment of Level-5 AVs. To safely detect and classify every pedestrian, autonomous vehicles require a new perception solution: thermal sensors.

Figure 2: A side-by-side comparison of a camera with low light sensitivity and Viper shows objects undetected by current sensing technology are visible with a FIR solution.

The only solution to safe night driving is thermal sensors

A new type of sensor using far infrared (FIR) technology can provide the complete and reliable coverage needed to make AVs safe and functional in any environment—in day or night.

Unlike radar and LiDAR sensors that must transmit and receive signals, an FIR camera senses signals from objects radiating heat, making it a “passive” technology. Because it scans the infrared spectrum just above visible light, a far-infrared camera generates a new layer of information, detecting objects that may not otherwise be perceptible to camera, radar, or LiDAR sensors.

Besides an object’s temperature, an FIR camera also captures an object’s emissivity—how effectively it emits heat. Since every object has a different emissivity, this allows a far-infrared camera to sense any object in its path. Most importantly, this enables thermal sensors to immediately detect and classify whether the object in question is a human or an inanimate object.

With this information, an FIR camera can create a visual painting of the roadway, both at near and far range. Thermal FIR also detects lane markings and the positions of pedestrians and can, in most cases, determine if a pedestrian is going off the sidewalk and is about to cross the road. The vehicle can then predict if it is at risk of hitting the pedestrian, thus, helping to avoid the challenge of false positives and enabling AVs to operate independently and safely in any kind of environment, whether it be urban or rural, during the day or night.

One company developing FIR technology for AVs is AdaSky, an Israeli startup that recently developed Viper, a high-resolution thermal camera that passively collects FIR signals, converts it to a high-resolution VGA video, and applies deep learning algorithms to sense and analyze its surroundings.

The future of autonomous vehicle technology must include thermal sensors

At present, OEMs are still evaluating the expense of adding FIR and thermal sensors to their AVs, and few have taken the plunge. Newer sensor companies, such as AdaSky, hope to change this, having developed technology that is scalable for the mass market.

Ultimately, the future deployment of fully autonomous vehicles in the mass market is reliant upon FIR technology, as it is the only sensing solution capable of providing dependable detection and classification of a vehicle’s surroundings in any environment, at day or night.

Yakov Shaharabani, CEO, AdaSky (Yokneam Illit; Israel; www.adasky.com)