May 2018 snapshots: Amazon’s robots, autonomous driving software, collaborative robots, and artificial intelligence development

Autonomous driving software company AImotive receives $38 million in funding round

AImotive (Budapest, Hungary; https://aimotive.com/), a full-stack autonomous vehicle technology company, has received $38 million USD in a Series C funding round.

The funding round was led by B Capital Group and Prime Ventures (Amsterdam, The Netherlands; www.primeventures.com), with participation from Cisco Investments (San Jose, CA, USA; www.ciscoinvestments.com), Samsung Catalyst Fund (Menlo Park, CA, USA; https://samsungcatalyst.com), and Series A and B investors Robert Bosch Venture Capital (Stuttgart, Germany; www.rbvc.com), Inventure (Moskva, Russia; http://inventurepartners.com), Draper Associates (Menlo Park, CA, USA; www.draper.vc), and Day One Capital (Budapest, Hungary; www.dayonecapital.com). It will be used to continue the development of its propriety autonomous driving technology, which relies primarily on cameras and artificial intelligence-based vision processing.

AImotive’s technology, according to the company, is inherently scalable due to its “low-cost modularity and flexibility, while also open to the fusion of non-vision based sensors for additional safety in poor visibility conditions.” With minimal additional costs, AImotive’s software can reportedly be ported into various car models, driving in diverse locations around the world. The solution is designed for automotive OEMs, mobility service providers, and other mobility players, according to the company, which is working extensively with established automotive players including Groupe PSA (Paris, France; https://www.groupe-psa.com/en), SAIC Motor (Shanghai, China; http://www.saicusa.com), and Volvo (Gothenburg, Sweden; www.volvo.com).

“As a fund with many years of investment experience in deep technologies, we are excited to be part of shaping the next chapter in automotive,” said Monish Suri, partner at Prime Ventures. “What the AImotive team has been able to achieve to date, leveraging simulation technology, is very impressive, and we are looking forward to helping the company grow further and bring their technology into production.”

AImotive describes the product as a full-stack software suite, with algorithms relying on cameras as primary sensors for perception, localization, decision making, trajectory planning, and vehicle control. aiDrive is built from four hierarchic software engines, each responsible for a key component in the self-driving technology, combined into one hardware-agnostic suite. The four engines are described by the company as follows:

• Recognition engine: Identifies objects and interprets the environment around the vehicle in real time, utilizing a “camera-first approach,” fusing low- and high-level information from radar and ultrasonic sensors. Recognition tasks are performed using machine learning techniques and deep neural networks.

• Location engine: Integrates AImotive’s in-house navigation engine to enhance positioning precision, utilizing vision-based localization and mapping algorithms optimized for parallel computing hardware. The component relies on sensor fusion data to study its environment and uses conventional and AI-based methods for feature extraction, ego-motion calculation and localization, assuring compatibility with all major HD landmark databases.

• Motion engine: Handles the complete self-driving decision chain, relying on the abstract 3D environment created by the Recognition and Location engines. This engine explores the surroundings of the vehicle with object tracking and state prediction techniques, integrating probability-based behavior elements. It also uses reinforcement learning to choose the next maneuver, and translates high-level routing, detection, and fused sensor information to the local trajectory, taking the physical constraints of the vehicle into account.

• Control engine: Enables vehicle control through low-level actuator commands, integrating AImotive’s in-house developed drive-by-wire solution with the corresponding APIs. This engine translates and communicates the calculated dynamic trajectory to the vehicle, and provides a universal, safety-compliant platform, which satisfies local public road testing requirements.

AImotive has received autonomous testing licenses on public roads for multiple locations, and started testing its car fleet in Hungary, France, and California in the summer 2017. The company plans to further extend testing to Japan, China, and other US states this year.

“The auto industry is moving rapidly toward autonomy, and AImotive’s vision-first strategy for solving perception and control is far more scalable than LiDAR-based approaches as an industry standard,” said Gavin Teo, partner at B Capital Group.

Collaborative robot provides support in automated warehouse environment

Developed by the SecondHands (Hatfield, UK; https://secondhands.eu) team of global researchers, the ARMAR-6 collaborative robot is designed to aid automation maintenance technicians in automated warehouse environments.

Funded by the European Union’s Horizon 2020 Research and Innovation program, SecondHands “aims to develop a robot assistant that is trained to understand maintenance tasks so that it can either proactively, or as a result of prompting, offer assistance to automation maintenance technicians performing routine and preventative maintenance.” The robot would theoretically provide a second pair of hands to the maintenance technician, such that once the robot has been trained, it can predict when it can usefully provide help and knows which actions to take to provide it, according to SecondHands.

On January 11, a prototype of the collaborative robot was presented. This robot will act as the platform for testing and developing new technologies related to the maintenance and repair of automation equipment in Ocado’s highly automated warehouses using a robot assistant. The prototype was developed at the Karlsruhe Institute of Technology (KIT; Karlsruhe, Germany; www.kit.edu/english) by Tamim Asfour and his team at the High-Performance Humanoid Technologies Lab (H²T) of the Institute for Anthropomatics and Robotics.

Ocado (Hatfield, UK; www.ocado)—a UK-based online supermarket—along with its research partners KIT, École Polytechnique Fédérale de Lausanne (EPFL; Lausanne, Switzerland; https://www.epfl.ch/index.en.html), Sapienza Università di Roma (Rome, Italy; http://en.uniroma1.it), and University College London (UCL; London, UK; www.ucl.ac.uk), are “working to advance the technology readiness of areas such as computer vision and cognition, human-robot interaction, mechatronics, and perception and ultimately demonstrate how versatile and productive human-robot collaboration can be in practice.”

Below is a summary of how each project partner is contributing to the project:

• EPFL: Human-robot physical interaction with bi-manipulation, including action skills learning

• KIT (H²T): Development of the ARMAR-6 robot including its entire mechatronics, software operating system and control as well as robot grasping and manipulation skills

• KIT (Interactive Systems Lab, ISL): The spoken dialog management system

• Sapienza University of Rome: Visual scene perception with human action recognition, cognitive decision making, task planning and execution with continuous monitoring

• UCL: Computer vision techniques for 3D human pose estimation and semantic 3D reconstruction of dynamic scenes

• Ocado Technology: Integration of researched functionality on the robot platform and evaluation in real-world demonstrations

Collaborative robots have become an increasingly important part of industrial automation and robotics over the past few years. This can be evidenced by the fact that, last November, the AIA and RIA introduced a new conference, “Collaborative Robots and Advanced Vision,” which explored a range of current advancements in collaborative robots and advanced vision, focusing on technology, applications, safety implications, and human impacts. The show was met with positive feedback and will be held again in Santa Clara from October 24-25 later this year.

Collaborative robots today are driving one of the most transformative periods in the robotics industry. Advancements with sensors, software and end-of-arm tooling are expanding collaborative robot capabilities and applications, according to the AIA.

Robotics on a global scale have continued to grow in recent years. The North American robotics market, for example, had a record 2017. Orders valued at $1.473 billion were sold in North America for the first nine months of the year, which is the highest level ever recorded in any year during the same period.

Additionally, the German robotics and automation industry is hitting new high marks in 2017. In October, the VDMA raised its growth forecast from 7% to 11%, and in German robotics, the initial projection of 8% growth was nearly doubled to 15%. These results, according to the VDMA, confirm recent statistics from the International Federation of Robotics (IFR), which indicate a global boom in robotics.

Artificial intelligence developer program launched by Intel to bring AI devices to market

Intel (Santa Clara, CA, USA; www.intel.com) has announced the launch of “AI: In Production,” a program that enables developers to bring their artificial intelligence (AI) prototypes to market.

Intel has selected embedded and industrial computing company AAEON Technologies (New Taipei City, Taiwan; www.aaeon.com) as the first Intel AI: In Production partner. As part of this, AAEON provides two streamlined production paths for developers integrating the low-power Intel Movidius Myriad 2 Vision Processing Unit (VPU) into their product designs, according to Intel.

The first option is the new AI Core from AAEON’s UP Bridge the Gap, which is a mini-PCIe module that features an Intel Movidius Myriad 2 VPU designed to work with a wide range of x86 host platforms. The AI Core is compatible with the Intel Movidius Neural Compute Stick—which has gained a developer base in the tens of thousands, according to Intel—and delivers the low-power capabilities of the Movidius Myriad 2 VPU deep neural networks accelerator.

The Myriad 2 VPU, which the company calls the industry’s first “always-on vision processor,” features an architecture comprised of a complete set of interfaces, a set of enhanced imaging/vision accelerators, a group of 12 specialized vector VLIW processors called SHAVEs, and an intelligent memory fabric that pulls together the processing resources to enable power-efficient processing. The Myriad 2 also includes an SDK that enables developers to incorporate proprietary functions.

Intel’s Neural Compute Stick, meanwhile, is a USB-based deep learning inference kit and self-contained AI accelerator that delivers dedicated deep neural network processing capabilities. Designed for product developers, researchers and makers, the Movidius Neural Compute Stick features the Myriad 2 VPU, supports the Caffe framework and was designed to reduce barriers to developing, tuning and deploying AI applications by delivering dedicated high-performance deep-neural network processing in a small form factor.

For companies requiring further customization, AAEON is offering development and board manufacturing services.

“Intel AI: In Production means we can expect many more innovative AI-centric products coming to market from the diverse and growing segment of technologies utilizing Intel technology for low-power inference at the edge,” said Remi El-Ouazzane, Intel vice president and general manager of Intel Movidius.

Fabrizio Del Maffeo, AAEON vice president, managing director of AAEON Technology Europe and founder of UP Bridge the Gap, also commented: “Intel Movidius Myriad 2 technology makes AI Core one of the most powerful and versatile AI hardware accelerators for edge computing,” he said. “AI Core bridges the gap between the lab and volume production, allowing the innovators who adopted the Intel Movidius Neural Compute Stick to roll out a field deployment.”

Intel customers, according to the company, are already building products using the Intel AI: In Production program. The first is from CONEX, a Diam International company that specializes in point-of-sale display systems for the cosmetics industry.

“Our innovation team started prototyping advanced retail deep learning algorithms and tested the Intel Movidius Neural Compute Stick,” said Nicolas Lorin, president of CONEX. “Now through the Intel AI: In Production program, CONEX will be able to rapidly go from our validated prototypes to actual end products. Thanks to this new path to production, we will be deploying an AI-enhanced, point-of-sale retail device to some of the largest cosmetic goods retailers as soon as this spring.”

Amazon files patent for autonomous robots at delivery locations

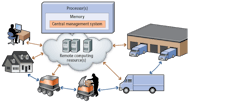

Amazon (Seattle, WA, USA; www.amazon.com) has applied for a patent for autonomous ground vehicles (AGV) that will be used to retrieve items from delivery trucks to and bring it right to your door.

In the patent application that was published on January 25, Amazon states that, in various implementations, the AGVs may be owned by individual users and/or may service a group of users in an area such as an apartment building or neighborhood. The AGVs may travel out to the user’s residence to meet a delivery truck to receive items, and may be joined by other AGVs that have traveled out to meet the delivery truck. In other words, the robots could be shared by neighbors, apartment building residents, and so on.

AGVs would line at the delivery truck and receive their packages in turn, and subsequently deliver them to an address or delivery-locker type of location. After items are received, wrote Amazon, the AGVs may travel back (e.g., to the user residences) to deliver the items, and may be equipped to open and close access barriers (e.g., front doors, garage doors, etc.).

The design of the robot that is sketched out in the patent application, GeekWire (Seattle, WA, USA; www.geekwire.com) points out, is quite similar to that of the food delivery robot from Starship Technologies (Tallinn, Estonia; www.starship.xyz). In order for it to operate autonomously, this particular robot uses a minimum of nine visible cameras, including three stereo pairs for a total of six visible spectrum cameras, along with three 3D Time of Flight cameras and an NVIDIA (Santa Clara, CA, USA; www.nvidia.com) Tegra K1 mobile processor to perform machine vision and autonomous driving functions.

Amazon’s AGV would likely use some sort of remotely-similar setup. In the patent application, it states the following, regarding a vision system:

“The AGV may also include a user interface. The user interface is configured to receive and provide information to a user of the AGV and may include, but is not limited to, a display, such as a touch-screen display, a scanner, a keypad, a biometric scanner, an audio transducer, one or more speakers, one or more image capture sensors, such as a video camera, and any other types of input or output devices that may support interaction between the AGV and a user.”

Additionally, the patent indicates that a camera and vision system would also be used for the storage compartment:

“The storage compartment may also include an image capture sensor, such as a camera, and optionally an illumination component (not shown), such as a light emitting diode (LED), that may be used to illuminate the inside of the storage compartment.”

The sensor, according to Amazon, may also be used to capture images and/or detect the presence or absence of items within the compartment. This includes tasks such as identifying the type of object in the compartment and recording video/images of access within the storage compartment. Additionally, a barcode scanner or similar technology may also be used to determine the identification of an item that is being placed or has been placed in the compartment. Images captured by the robot’s vision system may be recorded and may be provided to a user to identify what items have been placed within the compartment for delivery.

Concerning Amazon and robots, some may first think of the Amazon Prime drone that has been much talked about, or logistics robots that operate within a warehouse. With this patent application, it is evident that Amazon is considering robots in all facets of its operation. View the patent application:http://bit.ly/VSD-AMZ.

In addition to robotics, Amazon also entered into the computer vision space in a significant way, in the form of its new Amazon Go convenience store, which uses computer vision technology to enable a shopping experience with no lines or cashiers.

The experience, according to Amazon, is made possible by using computer vision, sensor fusion, and deep learning technologies. With “Just Walk Out” technology, users enter the store with the Amazon Go app, shop for products, and walk out without lines or checkout. The technology automatically detects when products are taken or returned to shelves and keeps track of them in a virtual cart. When the shopping is finished, users leave the store and their Amazon account is charged. Learn more about Amazon Go here: http://bit.ly/VSD-AMZ2.