March 2018 snapshots: Non-visible imaging, deep learning, artificial intelligence

Memory saving approach for deep learning training developed

Mapillary Research (Malmö, Sweden; https://research.mapillary.com) has developed a novel approach to training deep learning models. According to the company, the new approach handles up to 50% more training data than was previously possible in each learning iteration.

Mapillary provides a service for sharing geotagged photos and sets out to represent the whole world—not only streets—with photos using crowdsourcing. Its research group, Mapillary Research, has a mission of “pushing the boundaries of visual computing through machine intelligence.” Research done by the group targets fundamental challenges in computer vision and machine learning. One such technology is deep learning, and with this newly-developed technique, the group can improve over the winning semantic segmentation method of this year’s Large-Scale Scene Understanding Workshop (http://bit.ly/VSD-LSUN) on the challenging Mapillary Vistas Dataset, setting a new state of the art, according to the company.

At Mapillary, the company uses computer vision for extracting map data from street-level images. The company uses a device-agnostic platform, but the extracted map data, from both the number of images and the image resolution, can get massively large. Semantic segmentation helps us to understand images on a pixel level, forming the basis of true scene understanding; but in doing this, two major challenges are presented. First, recognition models must be trained that can absorb all the relevant information from training data. Second, once these models are acquired, they must be applied to new and previously unseen images, so they can recognize all objects in which the user is interested.

To address the first challenge, Mapillary developed a novel, memory-saving approach to training recognition models. In a technical paper, the group presents its technique, In-Place Activated Batch Normalization (INPLACE-ABN), which substitutes the conventionally-used succession of BatchNorm + Activation layers with a single plugin layer, hence avoiding invasive framework surgery while providing straightforward applicability for existing deep learning frameworks, according to a technical paper on the technique.

To provide context, Mapillary stated that its previous models were trained using Caffe a popular deep learning framework within the machine-learning sphere. Using this approach, they could only use a single crop of pixel size 480 x 480 per GPU when training on Mapillary Vistas.

Now, however, the group has migrated to PyTorch, a Python package, which together with their memory-saving idea drastically increases data throughput to handling three crops per GPU, each of size 776 x 776. This, according to the company, means they can pack about eight times more data on GPUs during training than they could before.

Mapillary’s proposed resolution, according to the company, allows them to recover necessary quantities by re-computing them from saved intermediate results in a computationally very efficient way.

“In essence, we can save ~50% of GPU memory in exchange for minor computational overhead of only 0.8–2.0%,” wrote Peter Kontschieder for Mapillary.

View the technical paper entitled, “In-Place Activated BatchNorm for Memory-Optimized Training of Deep Neural Networks” by Samuel Rota Bulò, Lorenzo Porzi, and Peter Kontschieder, Mapillary Research: http://bit.ly/VSD-MAP2

Researchers use non-visible imaging approach to reveal secrets of ancient Egyptian mummies

An international team lead by University College London (UCL; London, UK; www.ucl.ac.uk) researchers has developed a non-destructive multimodal imaging technique that utilizes multispectral imaging and a range of other methods to reveal text from ancient Egyptian mummy cases for research and analysis.

UCL suggests that by using advanced imaging techniques, the potential exists to dramatically improve the study of papyri encapsulated in ancient artifacts and potentially solve the problem of invasive, destructive approaches to these remains.

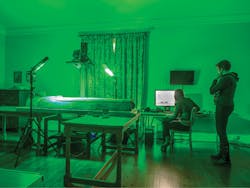

This image shows the Chiddingstone Castle Egyptian coffin lid under the UCL Centre for Digital Humanities multispectral imaging system, with Dr. Kathryn Piquette (standing) and Cerys Jones (seated) Credit: Eric Beadel l.m.p.a. Astracolour Photographic Services.

Working with a range of international partners and collections between November 2015 and December 2017, the pilot project tested the feasibility of non-destructive imaging of multi-layered Papyrus found in Egyptian mummy cartonnages.

First, the team describes the approach it took while utilizing multispectral imaging technology. Multispectral imaging, notes UCL, can be used to analyze the colors of objects and has been shown to highlight otherwise illegible details in items such as the Archmides Palimpsest, the Syriac Galen Palimpsest, and Livingstone’s diaries. Provided by RB Toth Associates (Oakton, VA, USA; www.rbtoth.com) the system utilizes an IQ260 achromatic camera developed by Phase One (Frederiksberg, Denmark; www.phaseone.com), which has a 60 MPixel CCD image sensor with a 6 μm pixel size and produces true black and white imagery. With this, a filter wheel with five long-pass filters and computer-controlled lighting with 12 different wavelengths from UV to NIR was used, which was supplied by Dr. Bill Christens-Barry of Equipoise Imaging LLC (Ellicott City, MD, USA; www.eqpi.net).

In addition to the multispectral camera, the team of researchers utilized optical coherence tomography (OCT), which uses similar wavelengths to multispectral imaging, but works at a much smaller scale, according to UCL. The system is arranged so that light travels a similar distance along two paths, one a reference arm and the other interrogating the sample. As light penetrates the sample and reflects back off different layers, the distance it travels changes. This change in distance is measured precisely using interferometry. It can image to depths of around a millimeter and give micrometer resolution, thus making it suitable for imaging layered structures, according to the team, which says that OCT—while still experimental is in use for imaging historical manuscripts—could be a valuable addition to their imaging options.

Terahertz imaging, which uses electromagnetic waves that are longer than visible waves, can penetrate further through dry, insulating samples than visible waves, but are attenuated by water and metals, noted UCL. In dry samples such as mummy cartonnages, the team expects to be able to penetrate by 1 cm or more, potentially allowing them to see through plaster, cloth, or other coverings. Like OCT, its application to imaging manuscripts is experimental, but some early results in cultural heritage, including manuscripts, have been reported.

Other imaging techniques utilized by the team include:

X-ray fluorescence: An established technique which analyzes secondary x-ray emitted from a sample that is being irradiated. Secondary x-rays are characteristic of a particular element, so analysis of the x-ray fluorescence spectrum can determine the composition of a sample. This was done by the team using a handheld Olympus Delta Premium device, and helped to identify the presence of ink beneath the surface of the object and to give information about its elemental composition.

Phase contrast x-ray: X-rays that pass through a sample develop a phase shift, depending on the material in which they passed through. This phase shift can be measured and provide contrast to changes that would otherwise not be visible with standard medical x-rays. UCL researchers have developed an approach for laboratory-based phase contrast x-ray.

X-ray microCT: Developed for medical applications and having been previously used for imaging intact mummies, x-ray microCT is used to image objects such as small animals, medical samples, and other materials that require a resolution down to a few micrometers. The team used this technique to determine the internal structure of the mummy cartonnage fragments to assist the interpretation of other imaging techniques.

Research found that no current single imaging technique was able to identify both iron and carbon-based inks at depths within cartonnage. As a result, the team developed a multimodal approach. While the system has limitations that include cost, access to imaging systems, and the portability of both the system and the cartonnage, the results have led to an improved understanding of which imaging methods are worth pursuing in future research projects.

One of the first successes of the new technique, according to BBC News, was on a mummy case kept at a museum at Chiddingstone castle in Kent, UK. The researchers identified text written on a footplate not visible to the naked eye by using the imaging techniques, which revealed the name “Irethorru” – a common name in Egypt which meant “the eye of Horus is against my enemies.”

Dr. Kathryn Piquette, of University College London (Bloomsbury, London, UK; www.ucl.ac.uk), commented in the BBC article: “I’m really horrified when we see these precious objects being destroyed to get to the text. It’s a crime. They are finite resources and we now have a technology to both preserve those beautiful objects and also look inside them to understand the way Egyptians lived through their documentary evidence - and the things they wrote down and the things that were important to them.”

View more information on the research: http://bit.ly/VSD-UCL

BrainChip ships first AI accelerator board to major European car maker

BrainChip Holdings Ltd. (Sydney, Australia; www.brainchipinc.com)—a developer of software and hardware accelerated solutions for artificial intelligence and machine learning applications—has shipped its first BrainChip Accelerator board to a major European automobile maker for evaluation in advanced driver assistance systems (ADAS) and autonomous vehicle applications.

Based on an 8-lane, PCI-Express add-in card, the board increases the speed and accuracy of the object recognition function of BrainChip Studio artificial intelligence software by up to six times, while increasing the simultaneous video channels of a system to 16 per card at more than 600 fps. Processing, according to the company, is done by six cores in a Xilinx (San Jose, CA, USA; www.xilinx.com) Kintex Ultrascale FPGA, each of which performs user-defined image scaling, spike generation, and spiking neural network comparison to recognize objects.

Scaling images up and down, according to BrainChip, increases the probability of finding objects, and due to the low-power characteristics of spiking neural networks, each core consumes approximately one watt while processing up to 100 fps. The BrainChip Accelerator add-in card learns from a single low-resolution image, which can be as small as 20 x 20 pixels, and excels in recognition in low-light, low-resolution, noisy environments.

As the first commercial implementation of a hardware-accelerated spiking neural network (SNN) system, the shipment of the Accelerator board is a significant milestone in neuromorphic computing, a branch of artificial intelligence that simulates neuron functions, according to the company.

“This is an exciting first evaluation of BrainChip Accelerator that was released just last month,” said Bob Beachler, BrainChip’s Senior Vice President for Marketing and Business Development. “Our spiking neural network provides instantaneous ‘one-shot’ learning, is fast at detecting, extracting and tracking objects, and is very low-power. These are critical attributes for automobile manufacturers processing the large amounts of video required for ADAS and AV applications.

He added, “We look forward to working with this world-class automobile manufacturer in applying our technology to meet its requirements.”

Computer vision sensor uses artificial intelligence for personalized home automation

Artificial intelligence startup Crea.vision has developed a prototype computer vision sensor that utilizes artificial intelligence technology for home automation.

Crea.vision’s sensor is a proprietary technology that employs long-range computer vision, artificial intelligence, and biometrics to automate within the home. The device was designed so that users don’t have to manage their smart devices through screen or voice commands, as Crea.vision’s sensor combines “real-time user identification and location data, creating a robust deep learning system that enables personalization without smartphones and wearables.”

“Vision capability will help artificial intelligence to understand what’s going on and adapt environments for each user,” Crea.vision explains in a YouTube video describing the product, which can be found here: http://bit.ly/VSD-CREA2.

The sensor will effectively learn each user’s preferences and eliminate the need for programming of smart tasks and scenes, and it will automatically adjust connected devices’ settings. Tasks the sensor can complete include alerting users of an incoming call if a phone is in the other room; managing lights, climate, and entertainment; keeping an eye on family members or pets; keeping an eye on those who may need assistance and notifying a homeowner if an unauthorized person is detected in the house. The sensor has also been designed to run all processing on the device itself without connection to the cloud, so user data is encrypted and accessible to authorized devices only.

“Being enthusiastic early adopters of new technologies, my co-founder and I have experienced the first-hand frustration of dealing with limitless apps controlling too many gizmos. We believe the real intelligence can only be achieved with the shift from transactional user interfaces (screen or voice controlled systems) to fully autonomous ones. Only then, technology will become truly helpful and unobtrusive,” said CEO Victor Mudretsov.

The sensor made its debut at the Consumer Electronics Show from January 9-12, 2018 in Las Vegas.