January 2018 snapshots: Self-driving cars, convolutional neural networks, small parts inspection, new MIPI specification

Fully self-driving cars are here, says Waymo

Waymo's (Mountain View, CA, USA;www.waymo.com) fully self-driving vehicles are now test driving in fully autonomous mode on public roads without a driver after having worked on the technology for more than eight years.

A subset of vehicles from Waymo-formerly the Google self-driving car project-will operate in fully autonomous mode in the Phoenix metro region. Eventually, the fleet will cover a region larger than the size of Greater London, with more vehicles being added over time.

Waymo explains in a press release that it has prepared for this next phase by putting its vehicles through the world's longest and toughest ongoing driving test.

"Since we began as a Google project in 2009, we've driven more than 3.5 million autonomous miles on public roads across 20 U.S. cities. At our private test track, we've run more than 20,000 individual scenario tests, practicing rare and unusual cases," wrote Waymo via Medium. "We've multiplied all this real world experience in simulation, where our software drives more than 10 million milesevery day. In short: we're building our vehicles to be the most experienced driver on the road."

Waymo's vehicles, according to the company, are equipped with the necessary features, including sensors and software, to provide full autonomy, including backup steering, braking, computer, and power that can bring the vehicle to a safe stop, if needed. The vehicles' sensor suite includes the following:

LiDAR (Light detection and ranging):This sensor beams out millions of laser pulses per second in 360° to measure how long it takes to reflect off a surface and return to the vehicle.

Waymo's system includes three types of LiDAR developed in-house: a short-range LiDAR that provides an uninterrupted view directly around it, a high-resolution mid-range LiDAR, and a next generation long-range LiDAR that can see almost three football fields away.

Vision systems:The vision system includes 360° field of view cameras that detect color in order to spot things like traffic lights, construction zones, school buses, and the flashing lights of emergency vehicles. The system is comprised of several sets of high-resolution cameras that operate at long range in daylight and low-light conditions.

In addition to LiDAR and a vision system, the vehicles feature a radar system with a continuous 360° view and supplemental sensors including audio detection and GPS.

"With Waymo in the driver's seat," according to a statement, "we can reimagine many different types of transportation, from ride-hailing and logistics, to public transport and personal vehicles, too."

"We've been exploring each of these areas, with a focus on shared mobility. By giving people access to a fleet of vehicles, rather than starting with a personal ownership model, more people will be able to experience this technology, sooner. A fully self-driving fleet can offer new and improved forms of sharing: it'll be safer, more accessible, more flexible, and you can use your time and space in the vehicle doing what you want."

The first application of Waymo's fully-self driving technology will be a Waymo driverless service. Over the next few months, Waymo will be inviting members of the public to take trips in its fully self-driving vehicles.

View a video of the driverless car in action here:http://bit.ly/VSD-WAYMO

Standalone vision system targets small parts inspection

Machine vision solution provider and systems integrator Artemis Vision (Denver, CO, USA;www.artemisvision.com) has developed the visionStation standalone vision system, which is designed to perform the inspection of small parts.

When it comes to small parts inspection, there are number of challenges that must be overcome, starting with space constraints. Some small parts manufacturers have limited space for inspection equipment, so it is important to understand the manufacturer's needs and space requirements. Accuracy and repeatability must also be considered. Inspecting small parts by eyesight alone can be a daunting task, and maintaining a consistent method or process of inspection can change from person to person. Small parts inspection applications must be consistent for them to be successfully accurate and repeatable.

Lastly, for small parts inspection to be successful, the manufacturer needs to know what defects they are looking for, as some defects can be so small that they go unnoticed. Additionally, manufacturers need to catalog known defects, which becomes easy when machine vision is used, as opposed to human inspection.

Artemis Vision's visionStation is precision machined from aluminum and fitted with a digital machine vision camera and lighting that is tailored to suit the type of inspection performed. The system is an operated-loaded unit that can be used as a standalone inspection station, or integrated into an existing operation.

"The visionStation is ideal for customers who need to run approximately 20,000 parts per day or less," stated Tom Brennan, President at Artemis Vision. "At 3 seconds per cycle, an operator can load about 10,000 parts per shift. Customers who need flexible automation and repeatability between operators and production batches without necessarily wanting or needing full inline automation where parts are conveyed on a belt.

Within the visionStation is a Prosilica GT4905 machine vision camera from Allied Vision (Stadtroda, Germany;www.alliedvision.com). This camera features the KAI-16050 CCD image sensor from ON Semiconductor (Phoenix, AZ, USA; www.onsemi.com), which is a 16 MPixel sensor that achieves a frame rate of 7.5 fps through GigE interface. This camera was selected, according to Brennan, as a result of its "high resolution and excellent dynamic range."

"The dynamic range plays an important part when imaging the shiny areas of a part, created by the lighting source. Furthermore, the Prosilica GT4905 can handle the rigorous conditions the visionStation may be used in," he said.

Software for the system includes Omron Microscan's (Renton, WA, USA;www.microscan.com) Visionscape and Artemis Vision's proprietary visionWrangler software.

"Our software is built from our extensive library of components - reading a data matrix, doing measurements, absence-presence and counts," explained Brennan.

The visionStation is offered in two sizes, the small and larger system measuring 1 and 1.5 cubic ft, respectively. The small system can accommodate parts up to 2 in. (5.08 cm), while the large system can hold parts up to 6 in. (15.24 cm). The minimum defect size allowance for each system size is: 0.003 in. (76.2 μm) for the small station and 0.01 inches (254 μm) for the larger station.

Artemis Vision builds visionStation systems based on the parts a manufacturer supplies and intends to have inspected. In the next generation visionStation, Artemis Vision reportedly plans to upgrade its sensor.

"We will likely upgrade to a newer CMOS camera and use C-mount optics," Brennan commented, "which will provide more lensing options and reduce the overall size of the unit."

New specification from MIPI Alliance streamlines integration of image sensors in mobile devices

The MIPI Alliance (www.mipi.org)-a global organization that develops interface specifications for mobile and mobile-influenced industries-has released a new specification that provides a standardized way to integrate image sensors in mobile-connected devices.

Named MIPI Camera Command Set v1.0 (MIPI CCS v1.0), the specification defines a standard set of functionalities for implementing and controlling image sensors. This specification is offered for use with MIPI Camera Serial Interface 2 v2.0 (MIPI CSI-2 v2.0) and is now available for download. Additionally, in an effort to help standardize use of MIPI CSI-2, MIPI Alliance membership is not required to access the specification.

MIPI CSI-2, according to the alliance, is the industry's most widely-used hardware interface for deploying camera and imaging components in mobile devices, including drones. The introduction of MIPI CCS to MIPI CSI-2 provides further interoperability and reduces integration time and costs for complex imaging and vision systems, according to the alliance.

"MIPI Alliance is building on its success in the mobile camera and imaging ecosystem with MIPI CCS, a new specification that will enhance the market-enabling conveniences MIPI CSI-2 already provides," said Joel Huloux, chairman of MIPI Alliance. "The availability of MIPI CCS will help image sensor vendors promote greater adoption of their technologies and it will help developers accelerate time-to-market with innovative designs targeting the mobile industry, connected cars, the Internet of Things, AR/VR and other areas."

With imaging applications becoming more sophisticated and manufacturers deploying multiple image sensors in their products, implementation becomes more complex and time consuming. MIPI CCS aims to address these issues by making it possible to craft a common software driver to configure the basic functionalities of any off-the-shelf image sensor that is compliant with MIPI CCS and MIPI CSI-2 v2.0, according to the MIPI Alliance. The specification provides a complete command set that can be used to integrate basic image sensor features, including resolution, frame rate and exposure time, as well as advanced features like phase detection auto focus, single frame HDR or fast bracketing.

"The overall advantage of MIPI CCS is that it will enable rapid integration of basic camera functionalities in plug-and-play fashion without requiring any device-specific drivers, which has been a significant pain point for developers," said Mikko Muukki, technical lead for MIPI CCS. "MIPI CCS will also give developers flexibility to customize their implementations for more advanced camera and imaging systems."

The new specification was developed for use with MIPI CSI-2 v2.0 and is backward compatible with earlier versions of the MIPI CSI-2 interface. It is implemented on either of two physical layers from MIPI Alliance: MIPI C-PHY or MIPI D-PHY.

View more information on the specification:http://bit.ly/VSD-MIPI

Deep learning and convolutional neural networks

Deep learning with neural networks provide significant image processing benefits regarding object classification, image analysis and image quality. Because small neural networks suffice for many typical machine vision applications, processors such as FPGAs (field programmable gate arrays) can be implemented effectively for convolutional neural networks (CNNs), resulting in application expansion beyond current classification tasks and efficient use within embedded vision systems.

Deformed test objects, irregular shapes, object variations, irregular lighting and lens distortions push the limits of classic image processing when framework conditions for image acquisition cannot be controlled. Even individual algorithms for feature description are often barely possible.

CNNs, on the other hand, define characteristics through its training method, without using mathematical models, which make it possible to capture and analyze images in difficult situations such as reflecting surfaces, moving objects, face detection and robotics, especially when easier classification of image data directly from the preprocessing to the classification result is required.

Nevertheless, CNNs cannot cover all areas of classic image processing, such as precise location determination of objects. Here, new and advanced CNNs must be developed. Practical experience with CNNs from prior years led to mathematical assumptions and simplifications (pooling, ReLu and overfitting avoidance, to name a few) which led to a reduction in computational expense, which in turn enabled implementation of deeper networks.

By reducing image depth at the same rate of detection and by optimizing the algorithm, CNNs can be significantly accelerated and are now ideally suited for image processing. Because CNNs are shift and partially scale invariant, they allow use of the same network structures for different image resolutions, and smaller neural networks are often sufficient for many image processing tasks.

Due to the high degree of parallel processing, neural networks are particularly well suited to FPGAs, upon which CNNs also analyze and classify high-resolution image data in real time. In machine vision, FPGAs function as massive accelerators of image processing tasks and guarantee real-time processes with deterministic latencies.

Until now, the high programming effort and the relatively-low resources available in an FPGA hindered efficient use. Algorithmic simplifications now make it possible to construct efficient networks with high throughput rates in an FPGA.

To implement CNNs on FPGA hardware platforms, the VisualApplets graphical environment from Silicon Software (Mannheim, Germany;www.silicon.software) can be used. The CNN operators in VisualApplets allow users to create and synthesize diverse FPGA application designs without hardware programming experience in a short time.

By transferring the weight and gradient parameters determined in the training process to the CNN operators, the FPGA design is configured for the application-specific task. Operators can be combined into a VisualApplets flow diagram design with digital camera sources as image input, and additional operators to optimize image preprocessing.

For larger neural networks and more complex CNN applications, a new programmable frame grabber in the microEnable marathon series is being released that has a 2.5-times more FPGA resources compared to the current marathon series. The new frame grabber is expected to be suitable for neural networks with more than 1GB/sec CNN bandwidth.

The CNNs are not only capable of running on the frame grabbers' FPGAs but also on VisualApplets compatible cameras and vision sensors. Since FPGAs are up to ten times more energy-efficient compared to GPUs, CNN-based applications can be implemented particularly well on embedded machine vision systems or mobile robots with the required low heat output.

Application diversity and complexity with neural networks based on newly-developed processors is expected to increase. A cooperation and exchange of research results with Professor Michael Heizmann from the Institute for Industrial Information Technology (IIIT) at the Karlsruhe Institute of Technology (KIT; Karlsruhe, Germany;https://www.kit.edu/english), part of the FPGA-based "Machine Learning for Industrial Applications" project, will generate future hardware and software advances.

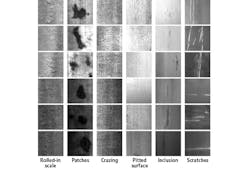

To determine the percentage of correctly-detected defects in difficult environmental conditions, a neural network with 1,800 reflective metallic surface images was trained on which of six different defect classes were defined. Large differences in scratches coupled with small differences in crazing, paired with different surface grey tones from lighting and material alterations, made the analysis of the surface almost impossible for conventional image processing systems.

The test results demonstrated that the different defects were positively classified by the neural network an average of 97.4%, which is a higher value compared to classic methods. The data throughput in this application configuration was 400MB/sec. By comparison, a CPU-based software solution achieved an average 20MB/sec.

The implementation of deep learning on FPGA processor technology, which is ideally suited for image processing, is an important step. Requirements that the method must be deterministic and algorithmically verifiable, however, will make entry into all areas of image processing more difficult. Likewise, options to document which areas were identified as errors, as well as segmentation and storage, have not yet been implemented.

Thus far, training and operational use of CNNs are two separate processes. In the future, new generations of FPGAs with higher resources or using powerful ARM / CPU and GPU cores will enable on-the-fly training on the newly acquired image material, which will further increase the detection rate and will simplify the learning process.

This article was written by Martin Cassel, Silicon Software (Mannheim, Germany;www.silicon.software)