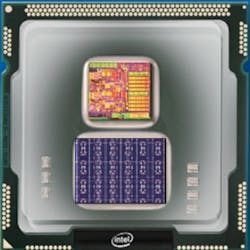

Self-learning chip from Intel aims to speed artificial intelligence by working like the human brain

Codenamed Loihi, Intel’s new self-learning neuromorphic chip is reportedly able to mimic how the human brain functions by learning to operate based on various modes of feedback from the environment, with an ultimate goal of speeding artificial intelligence technologies, according to the company.

Billed as a "first-of-its-kind" chip from the company, Loihi uses data to learn and make inferences and uses an asynchronous spiking computing method, which enables it to use data to learn and make inferences, and get smart over time, much like the brain, according to Intel.

"We believe AI is in its infancy and more architectures and methods – like Loihi – will continue emerging that raise the bar for AI," according to Dr. Michael Mayberry, corporate vice president and managing director of Intel Labs. "Neuromorphic computing draws inspiration from our current understanding of the brain’s architecture and its associated computations."

He added, "The brain’s neural networks relay information with pulses or spikes, modulate the synaptic strengths or weight of the interconnections based on timing of these spikes, and store these changes locally at the interconnections. Intelligent behaviors emerge from the cooperative and competitive interactions between multiple regions within the brain’s neural networks and its environment."

Loihi’s test chip, which in 2018 will be shared with leading university and research institutions with a focus on advancing artificial intelligence, includes digital circuits that mimic the brain’s basic mechanics, making machine learning faster and more efficient while requiring lower compute power. The neuromorphic chip models draw inspiration from how neurons communicate and learn, using spikes and plastic synapses that can be modulated based on timing, which could help computers self-organize and make decisions based on patterns and associations, according to Intel.

The test chip offers on-chip learning and combines training and inference on a single chip, which allows machines to be autonomous and to adapt in real-time.

"The self-learning capabilities prototyped by this test chip have enormous potential to improve automotive and industrial applications as well as personal robotics – any application that would benefit from autonomous operation and continuous learning in an unstructured environment. For example, recognizing the movement of a car or bike," suggested Mayberry.

Features of the test chip, as described in an Intel press release, will include:

- Fully asynchronous neuromorphic many core mesh that supports a wide range of sparse, hierarchical and recurrent neural network topologies with each neuron capable of communicating with thousands of other neurons.

- Each neuromorphic core includes a learning engine that can be programmed to adapt network parameters during operation, supporting supervised, unsupervised, reinforcement and other learning paradigms.

- Fabrication on Intel’s 14 nm process technology.

- A total of 130,000 neurons and 130 million synapses.

- Development and testing of several algorithms with high algorithmic efficiency for problems including path planning, constraint satisfaction, sparse coding, dictionary learning, and dynamic pattern learning and adaptation.

"As AI workloads grow more diverse and complex, they will test the limits of today’s dominant compute architectures and precipitate new disruptive approaches," said Mayberry. "Looking to the future, Intel believes that neuromorphic computing offers a way to provide exascale performance in a construct inspired by how the brain works."

He added, "I hope you will follow the exciting milestones coming from Intel Labs in the next few months as we bring concepts like neuromorphic computing to the mainstream in order to support the world’s economy for the next 50 years. In a future with neuromorphic computing, all of what you can imagine – and more – moves from possibility to reality, as the flow of intelligence and decision-making becomes more fluid and accelerated."

Announcing the Loihi chip is just the latest headline regarding artificial intelligence and cutting edge vision-related technologies to come from Intel. Recent, relevant news includes the launch of the Movidius Myriad X vision processing unit (VPU), which is a system-on-chip that features a neural compute engine for accelerating deep learning inferences at the edge.

The neural compute engine is an on-chip hardware block specifically designed to run deep neural networks at high speed and low power without compromising accuracy, enabling devices to see, understand and respond to their environments in real time, according to Intel, which says that the Myriad X architecture is capable of 1 TOPS (trillion operations per second) of compute performance on deep neural network inferences.

Other items of note include:

- Intel acquires Mobileye, plans to deploy 100 autonomous vehicles later this year

- Deep learning device from Intel enables artificial intelligence programming at the edge

- 360-degree sports replay vision system from Intel now installed in 11 NFL stadiums

View the Intel press release.

Share your vision-related news by contacting James Carroll, Senior Web Editor, Vision Systems Design

To receive news like this in your inbox, click here.

Join our LinkedIn group | Like us on Facebook | Follow us on Twitter

About the Author

James Carroll

Former VSD Editor James Carroll joined the team 2013. Carroll covered machine vision and imaging from numerous angles, including application stories, industry news, market updates, and new products. In addition to writing and editing articles, Carroll managed the Innovators Awards program and webcasts.