Autonomous vehicle mobility service being tested by Nissan and DeNA

In this week’s roundup from the Association for Unmanned Vehicle Systems International(AUVSI), which highlights some of the latest news and headlines in unmanned vehicles and robotics,learn about a robotic vehicle mobility service begin developed by Nissan and DeNA, a plug-and-play solution for using multiple infrared Time of Flight distance sensors for robotics, and a successful testing of computer vision technology for UAS-based railway inspection.

Nissan and DeNA to begin a field test of "robo-vehicle mobility service" in Japan in March

On March 5, Nissan Motor Co., Ltd. and DeNA Co., Ltd. will begin a field test of their "robo-vehicle mobility service," Easy Ride, which is envisioned as a mobility service "for anyone who wants to travel freely to their destination of choice in a robo-vehicle."

The field test will take place in the Minatomirai district of Yokohama, in Japan's Kanagawa Prefecture. During testing, participants will travel along a set route in vehicles equipped with autonomous driving technology. The route is about 4.5 kilometers between Nissan's global headquarters and the Yokohama World Porters shopping center.

To ensure efficient fleet operation, and to make sure that customers maintain a peace of mind during their rides, Nissan and DeNA have set up a remote monitoring center that uses their respective advanced technologies.

During this trial, Nissan and DeNA will also test Easy Ride's unique service functions. One of those functions involves passengers using a dedicated mobile app to input what they want to do via text or voice and choose from a list of recommended destinations. Nearly 500 recommended places of interest and events in the vicinity will be displayed on an in-car tablet screen, and there will also be approximately 40 discount coupons for retailers and restaurants in the area that will be available for download on the participants' own smartphones.

Following their rides, participants will be asked to complete a survey about their overall user experience, usage of content and coupons from local retailers and restaurants. They will also be asked about preferred pricing for the Easy Ride service.

The results of these surveys will be used by Nissan and DeNA as they continue to develop the offering, and for future field tests.

Nissan and DeNA say that the field test will allow them to "learn from the experience of operating the Easy Ride service trial with public participation, as both companies look toward future commercial endeavors." The companies say that they will also work on developing service designs for driverless environments, expanded service routes, vehicle distribution logic, pick-up/drop-off processes and multilingual support.

Initially, Nissan and DeNA are looking to launch Easy Ride in a limited environment, but they hope to introduce a full service in the early 2020s.

Terabee's new TeraRanger Hub Evo provides 'lean sensing' for robotics

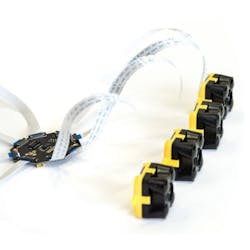

Terabee, which created the TeraRanger Time of Flight distance sensors, has announced the release of the TeraRanger Hub Evo, which the company describes as a new generation "plug and play solution for using multiple distance sensors."

A "small, octagonal, PCB," the TeraRanger Hub Evo allows users to connect up to eight TeraRanger Evo distance sensors (infrared Time of Flight sensors) and place them in whatever configuration they might need, so that they can monitor and gather data from just the areas and axes they need, and create custom point clouds, optimized to their application.

The Hub offers a variety of capabilities, as it provides power to each sensor, prevents sensor crosstalk, synchronizes the data and outputs an array of calibrated distance values in millimeters. It also has a built-in IMU.

"We’ve been advocating the use of ‘selective point clouds’ for a while," says Max Ruffo, CEO of Terabee.

"We understand that people often feel more secure by gathering millions of data points, but let’s not forget that every point gathered needs to be processed. This typically requires complex algorithms and lots of computing power, relying heavily on machine learning and AI as the system grows in complexity. And as things become more complex, the computational demands of the system rise and the potential for hidden failure modes also increases."

Max goes on to explain that "taking the mechanical engineering approach - trying to solve the problem in the easiest way possible - ‘lean-sensing’ could well be a less complex, lower cost and more reliable solution in many applications."

In this example, Terabee uses a large mobile robot used for wrapping pallets with thin film to showcase lean-sensing and selective point cloud creation. The company says that in the real-world of logistics, "pallets are often loaded with random shapes and sometimes these can be overhanging the edges of the pallet or the items they are loaded on top of."

"Our customer needed a contactless method for their robot to rotate around a pallet of goods at high speed (up to 1.3 meters per second) to apply thin film pallet wrap," Max explains. "As well as being fast, the system needed to be computationally lean (to ensure robustness) and also cost-effective."

What Terabee created uses just 16 single-point distance sensors, operating as small arrays, to generate selective point clouds.

To maintain a safe and consistent distance to the pallet, three sensors look to the side. Five sensors look forward to provide collision avoidance and safety in the event that something or someone enter the path of the robot.

Terabee says that "another array, this time eight sensors, looks up into the area where pallet contents are," and in real-time, this array builds a "lean point cloud of the pallet contents, including any irregularities such as overhangs." This three-dimensional data is then fed to the control system so that the two-dimensional trajectory of the robot can be adapted to make sure the robot avoids any overhanging items, and optimizes its path for pallet wrapping.

Just 16 numbers—along with some minor data from wheel odometry and the IMU—are fed to the control system, which allows three-dimensional data points to change the two-dimensional trajectory of the robot, in real time and at high speed.

Max says that "the solution could probably run just as well with fewer sensors," adding that the company is now testing "to see how few sensors can be used without compromising performance, reliability or safety."

"We’ll continue to develop our Hub and multi-sensor concept, making it easy for people to use our sensors in configurations to meet their specific needs," Max says. "It’s already proven to be a very popular way for people to prototype and use multiple sensors for drones, robotics, industrial applications and more recently some smart city use cases too."

Bihrle and BNSF's 'RailVision' proves beneficial during long range UAS railway inspections

Bihrle Applied Research (Bihrle) and BNSF Railway (BNSF) have announced that they successfully demonstrated the processing of several thousand images at a time, covering hundreds of miles of track for the automatic detection, classification and reporting of rail conditions.

This feat was made possible thanks to RailVision, which is a "computer vision technology solution" developed by the companies in support of BNSF’s UAS research initiatives.

RailVision allows BNSF to automatically process images collected by UAS during supplemental railway inspection flights, and generates actionable reports in a significantly less amount of time required by traditional methods.

According to Bihrle and BNSF, the success of RailVision has enabled BNSF to apply its use to expanded operations starting this year.

"Bihrle’s computer vision capabilities have been used in conjunction with our railway safety enhancement research and the FAA’s Pathfinder Program," says Todd Graetz, Director, Technology Services at BNSF. "The breadth of railway anomaly detection capabilities provided by Bihrle allows us to further research into the use of long range UAS."

Jack Ralston, President of Bihrle Applied Research, says, "UAS are typically flown with one or more imaging capabilities that result in terabytes of images and their associated metadata. Bihrle has been working with BNSF for over 4 years to create an automated computer vision solution that processes the images, allowing human Subject Matter Experts to review the actual findings rather than being burdened with the task of looking at raw image files, thereby fully exploiting the value of UAS based inspection."

View more information on the AUVSI.

Share your vision-related news by contacting James Carroll, Senior Web Editor, Vision Systems Design

To receive news like this in your inbox, click here.

Join our LinkedIn group | Like us on Facebook | Follow us on Twitter

About the Author

James Carroll

Former VSD Editor James Carroll joined the team 2013. Carroll covered machine vision and imaging from numerous angles, including application stories, industry news, market updates, and new products. In addition to writing and editing articles, Carroll managed the Innovators Awards program and webcasts.