SwRI Develops Off-Road Autonomous System with Passive Sensors

The Southwest Research Institute (SwRI) has developed algorithms for off-road autonomous driving that eliminate the need for LiDAR and active sensors, relying instead on visual information from stereo cameras.

SwRI (San Antonio, TX, USA) developed the machine vision solution for military, space, and agricultural machine vision applications.

Called Vision for Off-Road Autonomy (VORA), the passive system can perceive objects, model environments, and simultaneously localize and map while navigating off-road environments. It is designed to be plugged into existing software/AI solutions for real-time autonomous navigation.

Vision System Offsets Shortcomings of LiDAR, Radar, GPS

The research team developed the tools to offset the shortcomings of current methods.

For example, LiDAR, or light detection and ranging, uses pulsed lasers to probe objects and calculate depth and distance. It is often used in autonomous systems because it creates high-resolution point clouds in any type of weather. But it isn’t ideal for military operations because LiDAR sensors produce light that can be detected by hostile forces.

Meanwhile, radar, which emits radio waves, is also detectable. GPS navigation can be jammed, and its signals are often blocked in canyons and mountains, which can limit agricultural automation.

In space, cameras make more sense than power-hungry LiDAR systems.

Related: Honda Releases Prototype Autonomous Work Vehicle

For the new toolset, the team uses stereo vision and IMU (inertial measurement unit) information combined with new algorithms to provide data for localization, perception, mapping, and world modeling.

Unlike autonomous travel on roads, off-road navigation requires a world model, or map, to determine whether an area is traversable, and the map must be updated continuously because the terrain changes as, for example, soil errodes or vegetation grows.

“For our defense clients, we wanted to develop better passive sensing capabilities but discovered that these new computer vision tools could benefit agriculture and space research,” says Meera Towler, an assistant program manager at SwRI who led the project.

Related: Technology Trends in Autonomous Vehicles

Flexible Camera Hardware Options

While the team built a hardware platform to facilitate the research, the algorithms could work with other options, too. “We are capable of using commercial off-the-shelf or custom stereo sensors; we’ve done so in the past,” explains Abe Garza, senior research engineer in SwRI’s Intelligent Systems Division.

The hardware for the prototype comprises a Carnegie Robotics (Pittsburgh, PA, USA) IP67-rated MultiSense S 27 camera, which is designed for off-road vehicles. The camera includes a left and right camera to produce depth information and an RGB rolling shutter camera in the middle.

The camera has a FOV angled at 24° below horizonal and on-board IMU capabilities.

Stereo Matcher Tool and Factor Graph Enable SLAM

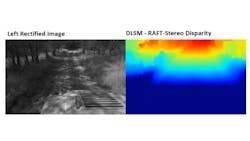

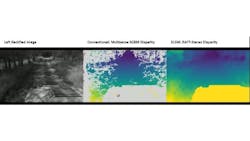

SwRI researchers built a deep learning-enabled stereo matcher tool to match images from the left and right cameras using a recurrent neural network. This creates a disparity map that includes dense depth information and is produced in real time.

“The reason the recurrent approach was taken is it effectively builds up your disparity map over multiple iterations. It scans across your left and your right image and is trying to find a match,” Garza adds.

The information from the stereo matching algorithm feeds into a second algorithm, a factor graph, which combines visual and IMU data to measure time and distance, essentially replacing LiDAR.

“Our offering is our own in-house visual SLAM (simultaneous localization and mapping) utilizing factor graphs,” Garza says.

Despite the success developing the algorithms, Garza concedes that the system does not overcome the challenges cameras face in capturing images in adverse weather conditions, low visibility or when objects are occluded.

Garza and the team plan to test the solution in an autonomous vehicle, which is an older-model military HMMWV (high mobility multipurpose wheeled vehicle), on an off-road course at SwRI’s San Antonio campus.

About the Author

Linda Wilson

Editor in Chief

Linda Wilson joined the team at Vision Systems Design in 2022. She has more than 25 years of experience in B2B publishing and has written for numerous publications, including Modern Healthcare, InformationWeek, Computerworld, Health Data Management, and many others. Before joining VSD, she was the senior editor at Medical Laboratory Observer, a sister publication to VSD.