Smart cameras challenge PC-based systems for embedded applications

With many of the functions of PC-based systems, smart cameras are lowering the cost of machine vision design

Andrew Wilson, Editor

Smart cameras were once relegated to performing point processing tasks such as presence/absence detection and barcode detection. However, with the reduction in size and cost of sensors, semiconductor memory and the increased capability of embedded processors, it is now possible to purchase smart cameras that can perform many of the image processing functions that, in the past, could only be handled by PC-based systems.

Indeed, the popularity of such smart camera systems has led Future Market Insights (FMI; London, England; www.futuremarketinsights.com) to predict that the current market for such cameras will grow at a compound annual growth rate of approximately 23.84% from now until 2020 (see "Smart Camera: Global Industry Analysis and Opportunity Assessment, 2015-2020," http://bit.ly/1cBG2FT).

Using these cameras, developers can simplify the deployment of machine vision systems by leveraging embedded lighting, lenses, sensors, processors, software and I/O capabilities while simultaneously lowering the cost of their designs. By implementing systems with smart cameras, developers need not worry about choosing the individual components often associated with machine vision design, but rather concentrate on the software required to perform a particular function.

Having said that, such smart cameras have their limitations since by bundling all of the capabilities of a machine vision system into one camera system, the developer is limited in the choice of image sensor, camera speed, processing power and software capabilities offered by any particular vendor.

On-board processing

Smart camera systems are now being deployed in numerous applications ranging from barcode inspection, object recognition, process monitoring and quality control. To perform these applications, smart camera designers must employ camera architectures to speed the image processing functions required. FPGAs are often deployed for timing control of the CCD or CMOS imager used to capture the image and perform point processing functions or neighborhood operators such as flat-field correction, filtering and Bayer interpolation. By performing such pre-processing functions with an FPGA, the camera's on-board processor can then be freed to perform global image processing tasks better performed by CPUs or DSPs within the camera.

While many smart cameras use CPUs and DSPs to perform image processing functions, Toshiba Teli (Tokyo, Japan; www.toshiba-teli.co.jp) has taken a more radical approach in the design of its latest offering the SPS02 smart sensor. Featuring GPIO, USB and RS-232 interfaces, the camera employs a 144 x 176 pixel Q-Eye Image sensor from AnaFocus (Seville, Spain; www.anafocus.com), with 33.6 x 33.6μm pixel sizes. This proprietary pixel architecture allows such functions as temporal filtering, morphological operators and blob analysis to be performed without the use of an FPGA or host CPU. To support the SPS02, Toshiba Teli offers a graphical programming environment known as Visual Architect that is similar to LabVIEW in appearance and designed for graphically creating, compiling, debugging and executing vision applications.

Application specific

By performing image processing tasks in-camera, there is no need to transmit captured images to a host computer for analysis. Rather, the results obtained can be transmitted (often across a low-cost Ethernet interface) built into the camera. In this way, the limited able lengths of other high-speed computer-to-camera interfaces such as Camera Link is overcome, allowing smart cameras to transmit data over far greater distances while eliminating the cost of high-speed cables and connectors. Similarly, many smart camera implementations offer I/O capabilities allowing these cameras to trigger external devices such as PLCs or power external devices such as lighting components.

Specifying a smart camera for any given application depends on a number of different factors including the type of application, the development time that will be required, the number of cameras to be deployed and the cost per camera.

In applications that require very many cameras to be deployed to perform a single task, for example, it may be more effective to purchase a smart camera with minimal high-level software support. Using PC-based development tools, image processing software can then be written in high-level languages such as C++, compiled and run on the camera's host processor. Although the development time required to accomplish this will be longer, the systems developer will avoid any run-time license fees that would have otherwise been required by purchasing an off-the-shelf image processing library.

Where the number of camera systems required may be fewer or may be required to perform multiple functions on a production line, purchasing a smart camera with high-level software development tools may be more effective. These allow multiple image processing functions to be called from high-level languages using standard PC development tools and then integrated into the smart camera.

While companies such as Cognex (Natick, MA, USA; www.cognex.com), Datalogic (Bologna, Italy; www.datalogic.com), Matrox (Dorval, QC, Canada; www.matrox.com), National Instruments (NI; Austin, TX, USA; www.ni.com) and Vision Components (Ettlingen, Germany; www.visioncomponents.com) offer smart cameras that incorporate each companies own machine vision software, systems developers can choose from a range of cameras based on Intel processors that allow a number of software development kits (SDKs) from multiple software vendors to be used. This allows the systems developer to choose from numerous smart cameras from multiple vendors and use familiar software development tools with which to build their applications.

Third-party support

Smart camera manufacturers incorporate third-party software packages in their products in a number of ways. In some cases, these software packages have been tailored by camera vendors to meet the needs of specific machine vision applications while other vendors may choose to offer support for multiple software packages.

Taking the former approach, Matrix Vision (Oppenweiler, Germany; www.matrix-vision.com) has leveraged the power of MVTec's HALCON in its mvBlueGEMINI "Tool box technology" dual-core Cortex-A9-based camera (Figure 1). In doing so, the developer can choose from a number of menu-selectable tools tailored specifically for machine vision such as "acquire image" and "find objects" that are implemented using the HALCON image processing library.

Taking the latter approach, in the design of its latest x86 NEON-1040 smart camera, Adlink Technology (San Jose, CA, USA; www.adlinktech.com) has used a 4MP 60fps global shutter sensor and an Intel Atom quad-core 1.9 GHz processor (Figure 2). With a built-in PWM lighting control module, the camera supports third-party machine vision software such as HALCON from MVTec (Munich, Germany; www.mvtec.com), Common Vision Blox from Stemmer Imaging (Puchheim, Germany; www.stemmer-imaging.com) and Adaptive Vision Studio from Adaptive Vision (Gliwice, Poland; www.adaptive-vision.com).

While such SDKs simplify the development of machine vision tasks, traditional vendors of PC-based machine vision software, recognizing the market opportunity presented by smart cameras, have leveraged their software to further simplify systems development. Using menu-based or graphical flow charts, these smart camera offerings allow many often used image processing functions to be deployed while offering support for discrete I/O, RS-232 and networking protocols. Because such tools are based on the underlying functions of the companies' software libraries, systems integrators can add additional image processing capability using user-callable functions or custom image processing routines.

For its NI 177x Series of smart cameras, for example, NI offers the company's NI Vision Builder for Automated Inspection (AI), an interactive menu-driven development environment that allows developers to configure, benchmark and deploy smart cameras that allows tasks such as pattern matching, code reading and presence detection to be configured without the need for programming.

Using a flowchart-based approach, Matrox's Design Assistant vision software presents the user with an integrated development environment (IDE) where applications can be created by constructing a flowchart rather than writing code or to call image processing functions. The same software lets users create a web-based operator interface that can be accessed locally or remotely. Once configured, the software can be then deployed on the company's 4Sight GPm vision system or Matrox's Iris GT smart camera as well as its 4Sight GPm vision system or any PC with GigE Vision or USB3 Vision cameras.

Remote monitoring

Once such software has been developed, it can be downloaded and tested on the smart camera. Once functional, it may be necessary to remotely monitor the application running on the camera. To address this, many companies now offer IP-enabled cameras that allow remote PCs or wireless tablet running a web browser to access the smart camera.

Those choosing to develop smart camera systems based on BOA smart cameras from Teledyne DALSA (Waterloo, ON, Canada; www.teledynedalsa.com), for example, can speed application development using the company's icon-based iNspect Express software. Since the software is embedded in the camera, any iNspect Express application can be both configured and accessed on the smart camera using a web browser interface on a remote PC.

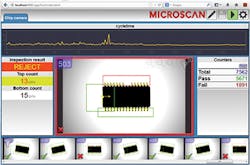

For its line of Vision HAWK smart cameras, Microscan (Renton, WA, USA; www.microscan.com) allows developers a similar option. After developing applications using the company's AutoVISION software, systems integrators can use the company's web-based CloudLink software in a web browser to remotely monitor inspection tasks as they are performed by the camera (Figure 3).

While such cameras are deemed smart because of their on-board processing capability, lighting, and I/O capabilities, they are not perhaps as smart as they could be. At present, developers deploying such systems can choose products such as Microscan's Vision HAWK and Vision MINI smart cameras) to allow focus and gain and exposure to be controlled automatically to achieve optimum contrast.

Integrating such functionality with high-level image processing software, and cycling through different settings automatically, however, would allow such smart cameras to perform intelligent decisions about which setting are the most useful for any specific image processing tasks and reduce camera set-up times. Whether such software will be available from smart camera vendors remains to be seen.

Companies mentioned

AnaFocus

Seville, Spain

www.anafocus.com

Adaptive Vision

Gliwice, Poland

www.adaptive-vision.com

Adlink Technology

San Jose, CA, USA

www.adlinktech.com

Cognex

Natick, MA, USA

www.cognex.com

Datalogic

Bologna, Italy

www.datalogic.com

Future Market Insights

London, England

www.futuremarketinsights.com

Matrox

Dorval, QC, Canada

www.matrox.com

Matrix Vision

Oppenweiler, Germany

www.matrix-vision.com

Microscan

Renton, WA, USA

www.microscan.com

MVTec

Munich, Germany

www.mvtec.com

National Instruments

Austin, TX, USA

www.ni.com

Stemmer Imaging

Puchheim, Germany

www.stemmer-imaging.com

Teledyne DALSA

Waterloo, ON, Canada

www.teledynedalsa.com

Toshiba Teli

Tokyo, Japan

www.toshiba-teli.co.jp

Vision Components

Ettlingen, Germany

www.visioncomponents.com