Cloud computing: the next frontier for vision software

Image processing and vision applications may benefit from cloud computing since many are both data and compute intensive.

Image processing and machine vision applications designed for cell analysis, dimensional measurements of automotive parts or analyzing multispectral satellite images present disparate challenges for systems designers. While all are compute and data intensive, the rate at which such images must be captured and analyzed varies considerably from application to application.

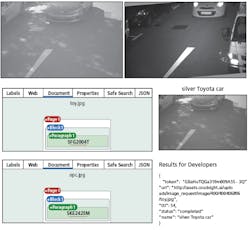

Figure 1: Images of automobiles taken in (a) bright sunshine and (b) in darkness were uploaded to both Google’s Cloud Vision and Cloud Sight’s Image Recognition software. The results of Google’s Cloud Vision analysis returned for both (a) and (b) clearly identified the license plate of each of the automobiles (c and d). When such an image was uploaded to Cloud Sight’s Image Recognition software, the automobile was recognized as a Toyota (e).

While high-speed image capture may not be necessary in digital pathology systems, for example, it is critical in machine vision systems designed to inspect automotive parts at rates of thousands (or more) parts per minute. In such systems, the speed of image capture and processing is critical and - most importantly – so is the latency of the vision system and the pass/fail rejection mechanism that may be required.

Client-server models

With a promise to decentralize much of the image processing required in both image processing and machine vision systems, cloud computing (or more correctly using a network of remote servers to store, manage, and process data) will impact some of the applications that currently employ local processing power and storage. By remotely locating processing and storage capabilities, image processing applications can be employed remotely and may be paid for by the user on as-needed or pay-per-use business models.

Such models are offered in a number of different forms, notably as a Software as a Service (SaaS), Platform as a Service (PaaS) and Infrastructure as a Service (IaaS). While SaaS use the provider’s application running on cloud-based systems, PaaS allow third-party software companies to develop, run, and manage their applications remotely. Finally, IaaS provide managed and scalable resources as services to those who wish to run remote application programs (see “Survey and comparison for open and closed sources in cloud computing,” by Nadir K.Salih and Tianyi Zang; http://bit.ly/VSD-CLOU).

Such cloud computing infrastructures present challenges for developers of image processing and machine vision systems. While cloud-based systems will ideally attempt to automatically distribute and balance processing loads, it is still the developer’s role to ensure that the data is transferred, processed and data returned at speeds that meet the needs of the application.

In his article “Cloud computing: challenges in cloud configuration”, Professor Dan Marinescu of the University of Central Florida, Orlando, FL, USA; www.cs.ucf.edu) points out that due to shared networks and unknown topologies, cloud-based infrastructures exhibit inter-node latency and bandwidth fluctuations that often affect application performance.

Cloud services

Today, a number of companies allow systems integrators to upload and process data using such networks. By doing so, developers need not invest in local processing power, instead using remote processing power and storage capabilities. Amazon Web Services (AWS; Seattle, WA, USA; https://aws.amazon.com/rekognition), Microsoft (Redmond, WA, USA; www.microsoft.com), Google (Mountain View, CA, USA; www.google.com) and IBM (Armonk, NY, USA; www.ibm.com) all offer IaaS products. These companies, as well as Clarifai (New York, NY, USA; www.clarifai.com) and Cloud Sight (Los Angeles, CA, USA: https://cloudsight.ai) also provide image recognition application programming interfaces (APIs) to develop applications.

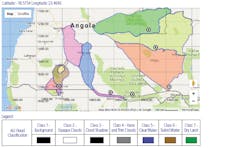

Figure 2. Using Google maps and overlaid satellite imagers, the Namibia flood dashboard presents scientist with constantly updated information pertaining to flooding in the region.

On his website at http://bit.ly/VSD-5API, Gaurav Oberoi has compared the image recognition capabilities of five of these and provides an open-source tool called Cloudy Vision, so that developers can test their own images and choose the best solution for their application. Of the number of companies that currently offer such services, both Google and Cloud Sight allow developers to test their capabilities by simply dragging and dropping images into a web-based interface.

Picturing plates

To better understand how valid such web-based pattern recognition systems might prove, staff at Vision Systems Design decided to tackle the problem of Automatic Number Plate Recognition (ANPR) using the same images supplied by Optasia Systems (Geylang, Singapore; www.optasia.com.sg) shown in the article “Intelligent transportation system uses neural networks to identify license plates,” Vision Systems Design, February 2018, pp. 12-14; http://bit.ly/VSD-NEU).

As avid readers may recall, Optasia Systems developed its IMPS AOI-M3 system using off-the-shelf cameras from Basler (Ahrensburg, Germany; www.baslerweb.com) coupled to a local software-based feed forward artificial neural network running on a host PC.

Using the same images supplied by Optasia Systems, images of automobiles taken in bright sunshine and in darkness (Figure 1), of approximately 5MByte and 0.5Mbyte were first uploaded to both Google’s Cloud Vision and Cloud Sight’s Image Recognition software. Using an upload speed of approximately 17Mbps, the results of Google’s Cloud Vision analysis were returned within approximately 8s for Figure 1a and 4s for Figure 1b. Taking into account the roughly 2.5s minimum time taken to transfer the image and return the results, processing the data for the large high-brightness image of the Toyota shown in took approximately 6s. The results returned for both clearly identified the license plate of each of the automobiles. When such an image was uploaded to Cloud Sight’s Image Recognition software, the automobile was recognized as a Toyota after an uploading and processing time of approximately 3s.

Clearly, the latency and bandwidth fluctuations outlined by Professor Dan Marinescu will often affect application performance. However, even by reducing the image transfer speed to a minimum using the fastest optical network, the system will still be non-deterministic and exhibit a degree of latency. If timestamped images from a simple camera and lighting system were transferred to such cloud-based software, local processing power may not be required, thus reducing the footprint and cost of such systems.

Scientific solutions

While results from processing captured images may not (yet) be as fast as using a local, more deterministic system, such configurations will prove beneficial in license plate recognition and medical and remote sensing systems where determinism and latency effects are less critical.

At the Centre for Dynamic Imaging at the Walter and Eliza Hall Institute (Parkville, Victoria, Australia; www.wehi.edu.au), for example, researchers teamed with DiUS (Melbourne, Victoria, Australia, https://dius.com.au) to jointly develop an AWS cloud-based system to enable image analysis to be performed on computer clusters.

“Since the Walter and Eliza Hall Institute routinely generates 2-3TBytes of image data per week, image analysis presented a bottleneck due the computational requirements,” says Pavi De Alwis, a Software Engineer with DiUs. “Scientists were also constrained by the need to schedule and share the compute and storage infrastructure.” To overcome this, the Fiji/ImageJ (https://fiji.sc/) open-source imaging software, maintained by Curtis Rueden of the University of Wisconsin-Madison (Madison, WI, USA; www.wisc.edu), was ported to AWS’s Elastic Compute Cloud (EC2) - a computing configuration that allows users to rent “virtual” computers to run their applications. Benchmarks of the image processing workloads of the computer clusters used can be found at: http://bit.ly/VSD-DIUS.

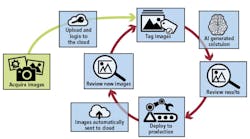

Figure 3: In the implementation of its cloud-based Neural Vision software, Cyth Systems offers a means to train a machine vision system to automatically recognize features such as defects. After images are uploaded to a cloud-based server system, key features of the images are manually tagged, processed and classified. The results are then analyzed to determine how accurately features have been recognized and, if the results are satisfactory, the system is deployed on the production line.

Similarly, open-source projects are underway in remote sensing applications. One of these, Project Matsu (https://matsu.opensciencedatacloud.org), a collaboration between NASA (Washington, DC, USA: www.nasa.gov) and the Open Commons Consortium (Chicago, IL, USA; www.occ-data.org) aims to develop cloud-based systems for processing satellite images.

Using Apache Hadoop, an open-source software framework from the Apache Software Foundation (Forest Hill, MD, USA; www.apache.org) for distributed storage, developers at Project Matsu have used Hadoop’s MapReduce programming model to develop the Project Matsu “Wheel.” This software framework performs statistical analysis of multispectral data from the Advanced Land Imager (ALI) and Hyperion hyperspectral imagers on-board NASA’s Earth Observing-1 (EO-1) satellite (http://bit.ly/VSD-MATS). This is then displayed as a dashboard – a user interface that presents constantly updated information pertaining to flooding (Figure 2).

Towards machine vision

While scientific and medical applications are leveraging the processing and storage capability of cloud computing, some machine vision and image processing vendors are offering cloud-based solutions either as a partial or full solution to the applications of their customers.

National Instruments (NI; Austin, TX, USA; www.ni.com), for example, sees a hybrid approach that includes both cloud computing and what the company calls “edge” computing as a solution that combines the benefits of real-time and cloud-based computing. “In this scenario, the cloud is used for large compute and data aggregation responsibilities while edge nodes provide deterministic second-by-second analysis and decision making,” says Kevin Kleine, Product Manager, Vision. “This enables cloud benefits such as traceability, process optimization, raw image and result aggregation, deep neural network model training and system management to be implemented, without sacrificing the latency and determinism required for on-line pass-fail analysis.”

Such cloud systems aggregate data from edge compute nodes and can deploy algorithms or process updates back to edge systems with tight feedback loops. “In an ideal system, it would be possible to train a neural network at a set cadence (e.g. daily) in the cloud based on the most-up-to-date data collected from the edge nodes. A new model could then be deployed to all appropriate edge nodes to update them with the latest algorithm for their second-by-second compute and decision-making responsibilities,” Kleine says.

NI has developed several components that fit this architecture. While NI integrates cloud services, specifically Amazon Web Services (AWS) via the LabVIEW Cloud Toolkit, its SystemLink system management software provides centralized management capabilities for distributed test and measurement applications. Additionally, the company’s Industrial Controller line provides edge compute nodes optimized for machine vision.

“I don’t believe machine vision inspection processes will run in the cloud anytime soon,” says Mark Williamson, Managing Director of Stemmer Imaging (Puchheim, Germany; www.stemmer-imaging.com). “In an edge process such as a machine vision system, the results and/or failed images may be sent to the cloud. This is implemented using an OPC Unified Architecture (OPC-UA) to interface to a secure data platform that can interface to all cloud providers and also SAP-based factory control systems. Here, the goal of the cloud is to run analytics to identify trends and common failures and provide non-real-time trends. Factories will choose the platform they want and then expect machine vision systems to interface to them. Stemmer’s OPC-UA interface acts as a bridge to these options.”

Dr. Maximilian Lückenhaus, Director Marketing and Business Development with MVTec Software (Munich, Germany; www.mvtec.com) agrees with Kleine that latency is a major challenge for those implementing cloud computing in machine vision environments.

“Where tasks have to be completed in milliseconds, latency is extremely important. Since this can be overcome somewhat by greatly reducing the size of image data, the challenge is to compress images so that image processing algorithms still can extract relevant information.” In the future, automatic adaptive compression tasks may be used to address this issue. “Also,” he says, “many customers do not want sensitive data to be transmitted outside company networks and hence some use an on-site ‘private cloud’.”

MVTec’s software HALCON already allows customers to build their own cloud-based solution and the company is currently in contact with its customers to understand their future requirements. “While there is a trend towards PaaS,” Lückenhaus says, “MVTec will not focus on just one provider, therefore support for a variety of cloud providers such as Google and Microsoft is planned.”

Large data sets

Other vendors dealing with large amounts of image data that must be processed relatively quickly have been more forthcoming in embracing cloud-only concepts. “We take advantage of the cloud by leveraging its nearly endless data storage and extensive computing power,” says Ryan Bieber, Technical Marketing Engineer at Cyth Systems (San Diego, CA; USA; www.cyth.com). In the implementation of its Neural Vision software, the company offers a means to automatically recognize features such as defects. After images are uploaded to an AWS or Google-based cloud-based server system, key features of the images are manually tagged (Figure 3).

Leveraging image-processing algorithms developed by NI to perform edge detection and color analysis on image features, results are then used by an image classifier to provide a weighted value that categorizes the object. “Once this process is complete”, says Bieber, “Cyth Systems and its client analyze the results to determine how accurately features have been recognized. If the results are satisfactory, the system will be deployed on the production line,” he says.

Like Cyth, the Matrox Imaging Library (MIL) from Matrox (Dorval, QC, Canada; www.matrox.com) is also being used to process large data sets in cloud-based systems. To do so, Matrox customers can license MIL to run on Azure, Microsoft’s cloud platform. One of these customer’s is providing a service that automatically inspects blueprint drawings that their clients upload to the cloud. Symbols on these drawings are then identified using MIL to generate a detailed content report which is then provided to the customer’s clients.

The future of cloud-based machine vision and image processing systems depends on variables such as the installation of networks that can transport image data without excessive delay, jitter and packet loss, and whether image data can be processed at acceptable speeds and whether the results of such analysis can be used in a timely manner to control networked-based systems such as robots, actuators, and lighting controllers.

“The stringent requirements in terms of latency, jitter and packet loss make general-purpose clouds inappropriate for real-time interactive multimedia services,” says Professor Luis López of the Universidad Rey Juan Carlos (Madrid, Spain; www.urjc.es). For this reason, the European Commission funded NUBOMEDIA (www.nubomedia.eu), an open source PaaS that allows simple APIs to be used to configure computer vision applications.

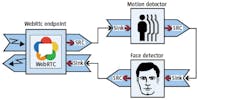

Figure 4: Combining a motion detector element and a face recognition element can be accomplished as a pipeline of elements. In this case, when motion is detected, faces in the image will be attempted to be detected.

Based on Kurento, Web Real-Time Communication (WebRTC) protocols and APIs, computer vision algorithms would then be configured using an API that abstracts the complexity of the underlying code. Combining a motion detector element and a face recognition element, for example, can be accomplished as a pipeline of elements (Figure 4). In this case, when motion is detected, the software attempts to detect faces in the image.

Despite these advancements, much needs to be accomplished to make machine vision “in-the-cloud” a reality. Now emerging smart FPGA-based cameras can perform on-board bad pixel correction and Bayer interpolation, standard formats such as GenICam describe such camera’s properties in an XML Descriptor File and high-speed fiber-optic interfaces are available for a number of such cameras.

To ensure that large images can be transferred effectively to host computers, high-speed, low-latency networks will be required to transmit such data to cloud-based systems. There, the systems integrator may require multiple general purpose, distributed computers and configurable and partitionable FPGAs and GPU-based processors to perform image processing tasks. To determine whether real-time machine vision applications can be performed in such a manner, software tools need to be developed to determine the latency, jitter and packet loss of such systems. More importantly, even when developed, convincing those on the factory floor that handing such tasks to remote server-based systems is really such a good idea will not be an easy task.

Companies and organizations mentioned

Amazon Web Services (AWS)

Seattle, WA, USA

https://aws.amazon.com/rekognition

Apache Software Foundation

Forest Hill, MD, USA

www.apache.org

Basler

Ahrensburg, Germany

www.baslerweb.com

Clarifai

New York, NY, USA

www.clarifai.com

Cloud Sight

Los Angeles, CA, USA

https://cloudsight.ai

Cyth Systems

San Diego, CA, USA

www.cyth.com

DiUS

Melborne, Victoria, Australia

https://dius.com.au

Fiji/ImageJ

https://fiji.sc

Google

Mountain View, CA, USA

www.google.com

IBM

Armonk, NY, USA

www.ibm.com

Kurento

www.kurento.org

Matrox

Dorval, QC, Canada

www.matrox.com

Microsoft

Redmond, WA, USA

www.microsoft.com

MVTec Software

Munich, Germany

www.mvtec.com

NASA

Washington, DC, USA

www.nasa.gov

National Instruments

Austin, TX, USA

www.ni.com

NUBOMEDIA

www.nubomedia.eu

Open Commons Consortium

Chicago, IL, USA

www.occ-data.org

Optasia Systems

Geylang, Singapore

www.optasia.com.sg

Stemmer Imaging

Puchheim, Germany

www.stemmer-imaging.com

University of Central Florida

Orlando, FL, USA

www.cs.ucf.edu

University of Wisconsin-Madison

Madison, WI, USA

www.wisc.edu

Universidad Rey Juan Carlos

Madrid, Spain

www.urjc.es

The Walter and Eliza Hall Institute

Parkville, Victoria, Australia

www.wehi.edu.a

For more information about machine vision software companies and products, visit Vision Systems Design’s Buyer’s Guide buyersguide.vision-systems.com

About the Author

Andy Wilson

Founding Editor

Founding editor of Vision Systems Design. Industry authority and author of thousands of technical articles on image processing, machine vision, and computer science.

B.Sc., Warwick University

Tel: 603-891-9115

Fax: 603-891-9297