Technologies and tutorials highlight NIWeek

Latest developments from National Instruments include more support for machine vision applications and technology

Andrew Wilson, Editor

Although graphical systems design was the theme of last year’s NIWeek, this year’s theme appeared to be a melting pot of disparate ideas ranging from green engineering to multicore processing and graphical systems design. This is not to say that the three-day event was not impressive. More than 3000 people gathered in the Austin, Texas conference center in August to see demonstrations by more than 100 systems integrators and OEM suppliers, attend tutorials on machine vision, hear about the latest new products from National Instruments (NI), and see some very interesting applications and future technology.

As usual, the first day of the event was kicked off by James Truchard, president of NI, who emphasized the importance of green engineering and how the company’s LabVIEW products were being used to solve problems from monitoring the efficiency of fuel injectors to monitoring climate change in the rain forest. To solve these problems requires analyzing petabytes of data using multicore processors and easy-to-program software.

Attempting to continue this green theme, Tim Denhe, senior vice president of R&D, rode a bicycle onstage for his presentation, which he later used to demonstrate the capability of his company’s latest wireless sensors (see “New products target machine vision, data acquisition, and RF,” p. 70, this issue). After introducing several of these products, Denhe showed how Kohji Ohbayashi and his colleagues at Kitasato University had developed an optical coherence tomography (OCT) system to detect cancer. OCT uses a low-power light source and captures the corresponding light reflections to create images in a fashion similar to ultrasound. According to Ohbayashi, this is the fastest OCT system in the world, achieving an axial scan rate of 60 MHz (http://sine.ni.com/cs/app/doc/p/id/cs-11321).

Multicore capability

Computations such as FFT analysis used in the OCT system require high-speed multicore processors. These processors can also be leveraged by developers of machine-vision systems and, in the latest incarnation of LabVIEW, many such functions have been automatically tailored for just these tasks.

“Because vision algorithms such as convolution can be separated,” says Johann Scholtz, senior vision software engineer with NI, “these functions can be split across multiple cores.” In LabVIEW 8.6, partitioning functions as convolution is transparent to the software developer. The algorithm breaks the data into different threads and dispatches it to different cores. After processing, image data is re-assembled.

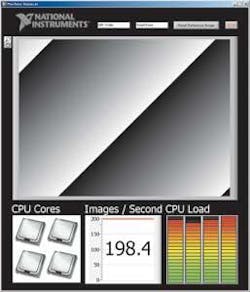

At NIWeek, Scholtz showed two applications that demonstrated this capability. In the first, an FIR filter was applied to an image captured from a Gigabit Ethernet camera running at 50 frames/s. Deploying the same code on a machine with four cores allowed this data rate to almost triple (see Fig. 1). In his second demonstration, Scholtz showed how using a Gigabit Ethernet camera, LabVIEW 8.6, and multicore processors developers could build simple “green-screen” systems.

To accomplish this, a background reference image or mask is first stored. By subtracting this from a live image, the live image is separated and an alternative image can be placed in the background. While this sequential process requires a large amount of data processing, Scholtz’s demonstration showed how this could be accomplished in real-time using a quad-core processor.

Real-time systems

While parallel processing played a major part in the demonstrations at NIWeek, the company also announced products that allow developers to customize LabVIEW in real time. To accomplish this, Matt DeVoe, a reconfigurable I/O (RIO) hardware engineer, described NI’s PXI-based FlexRIO FPGA module and adapter boards used to develop embedded systems. To allow developers to take advantage of this board, NI and others are developing a number of I/O modules. At the conference, NI showed a prototype digital module that allowed parallel data to be transferred to and from the board.

To show how this was used to develop a simple imaging system, the company demonstrated the FlexRIO and LVDS adapter model attached to a low-cost CMOS camera and a small LCD. “Because LabVIEW FPGA allows the developer to configure the FPGA,” says DeVoe, “the timing and data signals from the camera can be configured to allow captured images to be stored on the 128Mbytes of memory on the FlexRIO board and then displayed on the LCD.” To highlight the image-processing capability of the FPGA, DeVoe showed how LabVIEW could be used to program the FPGA to perform a 3-D rotation of the captured image data, a task requiring 40 million matrix multiplies per second.

As well as demonstrating image capture, NI showed a prototype data acquisition I/O board that uses two 10-MSPS, 16-bit ADCs from Analog Devices. Designed in about one month, the 250-Mbit/s data acquisition board was developed to allow NI engineers to evaluate the capabilities of the ADC. After acquiring data at these rates, DeVoe used the Virtex 5 gate array on the FlexRIO board to perform an FFT on the incoming data at a rate of 10,000 unique fixed-point 8k-FFTs/s (see Fig. 2).

In addition to NI, other vendors are already offering third-party I/O boards for the FlexRIO. Averna, for example, now offers an interface board that allows 1394b-based FireWire cameras to stream image data to the FlexRIO’s FPGA processor. Such developments will inevitably lead to greater adoption of FPGAs in embedded systems, which may then be ported to the company’s NI Single-Board RIO-based system.

Bigger is better

Attendees thinking that small was beautiful, however, were in for a shock on the final day of the conference when Mike Cerna, senior software engineer, described one of the biggest imaging projects on earth. “The European Southern Observatory’s (ESO) Very Large Telescope (VLT),” says Cerna, “is designed to image planets that are approximately 170 million light years from Earth and 40 million times fainter than what the human eye can see.” Although impressive, the ESO is currently building an even larger telescope dubbed the Extremely Large Telescope (ELT) that will be even more impressive. With a primary M1 mirror approximately the size of half a football field, the M1 mirror will be composed of 984 segments, each 1.5 m in diameter (see Fig. 3). To image objects light years away, each of these mirrors has to be aligned to within 10 nm.

To accomplish this, each of the approximately 1000 sensors is mounted with three motion actuators and six sensors that measure the position of each of the mirrors. These sensors must measure image data and the data collected used to position the image sensors within 2 ms. “This rather large motion control problem,” says Cerna, “can only be solved by parallel processing techniques.”

To solve this 3k × 6k vector multiplication problem, Cerna and his colleagues first developed the control system in LabVIEW and simulated it on an octal core processor, attaining a 2-ms execution time. “However,” says Cerna, “the ELT also uses an adaptive mirror, known as M4 that is used to compensate for wavefront aberrations caused by the Earth’s atmosphere. This mirror uses 8000 actuators that must be controlled in a similar manner, making the task 15X more computationally intensive.’ At present, Cerna is working with a rack of 16 octal core board-level processors from Dell to reach the 2-ms positioning requirement of the VLT’s M4 mirror.

While simulating the control system of the VLT was performed using a CAD model of the system, integrating CAD packages such as SolidWorks from Dassault Systems within LabVIEW was the subject of a futuristic demonstration by Mike Johansen, a senior software engineer with NI. To demonstrate this concept, Johansen demonstrated a three-axis positioning system designed to test the functions of a mobile telephone. Using SolidWorks to first develop a model of the system, LabVIEW was then used to simulate the control of the machine and calculate such functions as system load, forces, and torque.

“These,” suggests Johansen, “can then be used to evaluate components such as motors that will be used in the final design.” While this concept offered attendees with insight into where NI will position its future products, one could only speculate when imaging functions such as virtual cameras, lenses, and lighting would also be integrated into such models. This topic was addressed by Robert Tait of General Electric’s Global Research Center in his keynote presentation at the Vision Summit held concurrently with NIWeek (see“Design for inspection keynotes NIWeek,” p. 28, this issue).

Self-timed FPGAs

Those wondering about the future direction of such technology should have listened to the speech given by Jeff Kodosky, NI co-founder, on the last day of the show. Speaking of Intel’s forthcoming Larrabee chip, a hybrid CPU/GPU (http://en.wikipedia.org/wiki/Larrabee_(GPU)), Kodosky implied that some of the features of the device, such as rasterized graphics, may be leveraged by future generations of LabVIEW. Perhaps as important, Kodosky said, would be the development of more powerful FPGAs that would allow designers to develop integrated ALUs, multipliers, and CPUs in a sea of gates. At present FPGAs provide no support for self-timed design, but by not having a global clock constraint, placement, routing, and partitioning are simplified. Thus, design performance of algorithms embedded within FPGAs could improve performance or reduce mapping times.

As with every NIWeek, there was much more than machine vision and image processing systems both at the conference and on the show floor. These ranged from data acquisition, motion control, RF test, and robotics systems, all of which used LabVIEW as an integrated software environment for test, development, and deployment. Those who could not attend NIWeek can see new product data and applications on the company’s Web site. Video highlights of some of the Vision Summit presentations are available on the Vision Systems Design video library (www.vision-systems.com/resource/video.html).

New products target machine vision, data acquisition, and RF

Software extends multicore performance

LabVIEW 8.6 includes more than 1200 analysis functions optimized for math and signal processing on multicore systems. Vision applications can use image-processing functions included in the Vision Development Module to distribute data sets automatically across multiple cores. Test engineers can also develop applications to test wireless devices with the Modulation Toolkit and control system engineers can execute simulation models in parallel with the company’s Control Design and Simulation Module.

Smart cameras feature on-board DSP

After last year’s release of its two smart cameras, NI has developed three more cameras-the 1744, 1762, and 1764-to increase the speed and resolution of the company’s initial offerings. Powered by a 533-MHz PowerPC, the 1744 features a 1280 × 1024-pixel sensor, while the 1762 and 1764 models couple the PowerPC processor with a 720-MHz TI DSP to speed pattern matching and OCR. Data Matrix algorithms are also optimized for DSP. DSPs improve the performance of these specific algorithms from 300% to 400%. While the 1762 features a 640 × 480-pixel image sensor, the 1764 features the larger 1280 × 1024 imager.

Signal generator and analyzer run at 6.6GHz

Automated RF and wireless test environments often demand signals to be both generated and analyzed at rates faster than 6 GHz. To meet such demands, the PXIe-5663 6.6-GHz RF vector-signal analyzer performs signal analysis from 10 MHz to 6.6 GHz with up to 50 MHz of instantaneous bandwidth, while the PXIe-5673 generates signals from 85 MHz to 6.6 GHz and up to 100 MHz of instantaneous bandwidth. These instruments are complemented by the PXIe-1075, an 18-slot PXI Express chassis that features PCI Express lanes routed to every slot providing up to 1-Gbyte/s per-slot bandwidth and up to 4-Gbyte/s total system bandwidth.

RIO migrates to a single board

For designers, the announcement by NI of eight reconfigurable I/O (RIO) boards programmable by LabVIEW graphical tools will allow custom designs to be embedded in small-form-factor systems. Featuring a 266-MHz or 400-MHz Freescale MPC5200 processor, Wind River’s VxWorks RTOS, and a Xilinx Spartan-3 FPGA, the eight boards differ in processor speeds, onboard memory configurations, FPGA options, and the number of onboard digital and analog I/O channels. Onboard analog and digital I/O connect directly to the FPGA to allow customization of timing and I/O signal processing. Developers can expand the I/O capabilities using the devices’ three expansion slots for connecting any NI C-Series I/O module or custom modules. More than 60 C Series modules provide inputs for acceleration, temperature, power quality, controller-area network (CAN), motion detection, and more.

Data acquisition devices go wireless

Remote or hazardous environments require sensors that can transmit their data over wireless networks. Recognizing this, NI has developed no less than 10 Wi-Fi and Ethernet data acquisition devices that can connect to electrical, physical, mechanical, and acoustical sensors and offer IEEE 802.11 wireless or Ethernet communication. These Wi-Fi DAQ devices can stream data on each channel at more than 50 kS/s with 24-bit resolution using built-in NIST-approved 128-bit AES encryption methods. The Wi-Fi and Ethernet DAQ devices are offered with DAQmx driver software and LabVIEW SignalExpress LE data-logging software for acquiring, analyzing, and presenting data.

Company Info

Analog Devices, Norwood, MA, USA

www.analog.com

Averna, Montreal, QC, Canada

www.averna.com

Dassault Systems, Concord, MA, USA

www.solidworks.com

Dell Round Rock

Round Rock, TX, USA

www.dell.com

Freescale, Austin, TX, USA

www.freescale.com

GE Global Research Center

Niskayuna, NY, USA

www.ge.com

Intel, Santa Clara, CA, USA

www.intel.com

Kitasato University

Kanagawa, Japan

www.kitasato-u.ac.jp

National Instruments

Austin, TX, USA

www.ni.com

Texas Instruments

Dallas, TX, USA

www.ti.com

Wind River, Alameda, CA, USA

www.windriver.com

Xilinx, San Jose, CA, USA

www.xilinx.com