“Dual-color” IR camera debuts

At the 2005 SPIE Defense and Security Symposium (Orlando, FL), QmagiQ (Nashua, NH; www.qmagiq.com) showed a prototype of its “dual-color” infrared (IR) camera (see Fig. 1). Based on a 320 × 256 dual-color or dual-wavelength detector array, the device can detect IR images in both the 4-5-μm medium-wavelength IR (MWIR) and 8-9-μm long-wavelength IR (LWIR) spectral bands simultaneously and on a pixel-registered basis. According to Mani Sundaram, president of QmagiQ, this makes the device especially useful in military applications that previously required two different IR detectors.

QmagiQ engineers first developed and demonstrated this technology in 1997 while at Lockheed Martin (Bethesda, MD, USA; www.lockheedmartin.com). Since that time, other companies, including AIM-Infrarot Module (AIM; Heilbronn, Germany; www.aim-ir.com), DRS Infrared Technologies (Parsippany, NJ, USA; www.drs.com), Thales-Optem (Fairport, NY, USA; www.thales-optem.com), Raytheon (Waltham, MA, USA; www.raytheon.com), Rockwell Automation (Milwaukee, WI, USA; www.rockwellautomation.com), BAE Systems (Rockville, MD, USA; www.baesystems.com), and NASA Jet Propulsion Laboratory (Pasadena, CA, USA; www.jpl.nasa.gov), have pursued the technology. “To the best of our knowledge, we are the first to actually show up with a working prototype in public,” says Sundaram.

QmagiQ has fabricated a monolithic device that consists of two layers of InGaAs/GaAs/AlGaAs quantum-well stacks.” Controlling the thicknesses and compositions of the layers in the two stacks allows IR detection at dual wavelengths,” says Axel Reisinger, chief technical officer and cofounder of QmagiQ. In the voltage-readout circuitry, a different channel bias is applied to each detector layer; also, the integration time of each can be separately and accurately controlled. “Each 40 × 40-μm dual-layer pixel is fabricated in series, resulting in perfect spatial and temporal registration of the two images,” says Reisinger.

Developing a dual-wavelength IR imager has other benefits. “Taking the ratio of the two signals in both bands yields the absolute temperature of every pixel in the image, provided the emissivity of the target is the same in both spectral bands,” says Sundaram. Because this is generally the case, the absolute temperature can be measured without any need for external calibration, which allows self-calibrating IR cameras to be developed. Signals from each individual detector element on the focal-plane array (FPA) are read out individually using a readout integrated circuit (ROIC). The FPA is then flip-chip bonded to the ROIC by fabricating indium bumps onto contact holes, one per pixel, on both the detector chip and the ROIC.

QmagiQ used an off-the-shelf Dewar and electronics from SE-IR (Goleta, CA, USA; www.seir.com) to demonstrate a prototype dual-color camera. SE-IR, a manufacturer of IR imaging systems for evaluation, test, and operation of staring IR FPAs, sells a cryogenic liquid-nitrogen Dewar assembly for cooling the FPA, clock driver electronics, bias voltage supplies, analog signal-conditioning electronics, A/D converters, and a linear analog power supply. Analog-signal-conditioning electronics control signal offset and gain before A/D conversion. This allows the output from the ROIC to be digitized with a maximized dynamic range.

After signal conditioning, two 14-bit A/D cards digitize signals from the two channels (MWIR and LWIR) of each detector site (see Fig. 2). This digital output is then multiplexed into a single 16-bit video data stream and transferred to a DSP board that performs real-time pixel correction on a pixel-by-pixel basis. To normalize response and offset, a two-point linear correction of each pixel is performed with separate coefficients for each pixel. Pixel data are pipelined into pixel reordering hardware that allows any pixel to be arbitrarily remapped to any other location in the image.

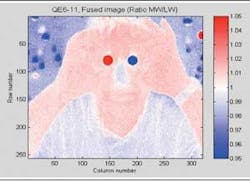

“This performs bad-pixel replacement by substituting a nearest good neighbor pixel,” says Sundaram. After pixel reordering, image data are transferred to a PCI-based frame grabber from Coreco Imaging (St. Laurent, QC, Canada; www.imaging.com) for image display. “Using some simple software,” says Sundaram, “it is possible to set up, control, analyze, and display image data from the FPA. These functions include pixel-uniformity correction, image subtraction, frame rate and integration time, and image display (see Fig. 3).

“Although the prototype camera currently uses numerous boards to capture, process, and display image data, these could easily be integrated into a single system to produce a modular hand-held dual-wavelength camera,” Sundaram says.