Robotic System Loads Bobbins in Textile Manufacturing Process

A manufacturer of textiles used in protective workwear and security coverings for doors and windows is implementing an autonomous robotic system to load spools, or bobbins, onto coil creels, which are large frames designed to hold yarn so it can be unwound easily.

In the current manual process, an employee drives a forklift to a storage area to pick up a pallet of bobbins and then drives it to the creel area. Once there, the employee picks up the bobbins and loads them onto the pins, or mandrels, of the creels, which are lined up in rows.

This is a continuous process because the creels are reloaded after the yarn they hold is used in textile-manufacturing. It’s also physically demanding work: A creel is very large, holding up to 1,200 bobbins; each bobbin weighs 10–20 kg. As Philipp Herpich, software engineer at ONTEC Automation GmbH (Naila, Germany; www.ontec-automation.de/en/), creator of the robotic system, explains, “They have to carry heavy loads the whole day.”

To relieve its employees of the monotony and physical stress of loading creels, the manufacturer wanted to automate the process. That is why the 50-employee company has been working with ONTEC on a project using an autonomously driving robotic assistance system, which ONTEC calls the Smart Robot Assistant.

Robotic Assistance

The Smart Robot Assistant is customized for each use case and comprises an autonomous vehicle, flexible robot arm, 3D stereo camera system, PLC, and software.

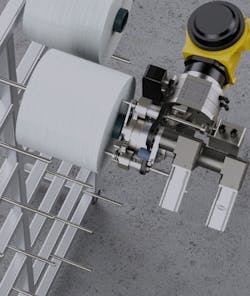

The robot drives autonomously and can pick up pallets directly from the ground without external help. The robotic arm part of the apparatus can lift loads as heavy as 45 kg, while the pallet lifter on the vehicle platform can lift 1,200 kg. ONTEC custom built the vehicle, and the robotic arm is from FANUC (Oshino-mura, Yamanashi Prefecture, Japan; www.fanuc.eu/pl/en).

“It can be used for normal intralogistics purposes, like transporting a pallet from A to B,” explains Herpich. In addition, the robotic arm can accomplish many other tasks such as picking up items from pallets, loading items on machinery, automating optical inspection, and scanning product codes.

In the case of the textile manufacturer, the robot drives to a storage area, picks up a pallet of bobbins, and drives the pallet to the creel area. Once there, the gripper on the robotic arm is positioned vertically above the pallet, so it can pick up a bobbin on the pallet and load it on a mandrel of a creel.

How it Works

To launch the process, an employee gives the robot an order via a graphical-user interface (GUI) on a tablet computer. Master control software on a server translates commands into specific tasks for the Smart Robot Assistant. (This software also would divvy up the work between multiple robots if more than one is deployed.)

ONTEC: SPSComm, the company’s industrial communication software, transmits information between the tablet; server; industrial controller with software, which is located on the vehicle; robotic arm, and camera system, which is mounted on the robotic arm.

The controller is an S7 1500 from Siemens AG (Munich, Germany; www.siemens.com/global/en.html). ONTEC’s software engineers developed the software programs.

Once the system receives an order, the vehicle picks up and loads a pallet onto its bed and then drives to the creel area. To maneuver around the factory, laser scanners on the vehicle first manually map the environment, and then the vehicle uses the laser scanners to navigate by recognizing objects in the environment.

The vehicle then switches to a second navigation system in the creel area of the factory. A scanner located under the vehicle reads data matrix codes (similar to QR-codes) embedded in tape on the ground to find a precise location in front of a creel. “Every code on this tape is an exact position,” Herpich says.

Loading the Creels

After it is in the correct spot, a 3D camera produces a point cloud depicting the top row of the pallet, including all the bobbins and their accurate locations on that layer.

The camera—an Ensenso 3D camera (N45 series) from IDS Imaging Development Systems GmbH (Obersulm, Germany; www.ids-imaging.com)—has a working distance of 330 to 3,000 mm and a maximum field of view (FOV) of 3,970 mm. The camera’s global shutter CMOS sensor has a resolution of 1280 × 1024 pixels (1.3 MPixels).

The Ensenso model from IDS includes an integrated projector, which projects a high-contrast texture onto an object, supplementing a weak or non-existent surface structure on the object. On its website, IDS says this process results in “a more detailed disparity map and a more complete and homogeneous depth information of the scene” than would be possible with stereo vision alone.

ONTEC used the 3D point cloud to calculate the coordinates for the center of each bobbin. These coordinates became the basis for instructions for the robot to pick up the bobbin and put it on the mandrel of the creel.

Once the robot finishes unloading a layer of bobbins, the camera takes another picture, leading to another set of coordinates and a signal to remove the cardboard that separates layers of bobbins. After that task is complete, the camera takes a picture of the next bobbin layer. This process is repeated until an entire pallet is empty.

If the robot accidentally drops a bobbin, this will be visible when the camera takes a picture of the pallet and creates the next point cloud.

To compute the coordinates and write instructions for loading bobbins, ONTEC’s software engineers used HALCON from MVTec (Munich, Germany; www.mvtec.com), an integrated software-development environment used to create vision applications.

Challenges

As is the case in any project, the engineers overcame numerous challenges while implementing the project.

Creating complete point clouds in low-light situations is one such challenge. Tim Böckel, who also is a software engineer at ONTEC, explains, “The problem is sometimes when you have very bad lighting, it can be that there are many non-valid points in the point cloud that cannot be computed correctly. So basically, the space in the point cloud is empty, and this can lead to problems when computing the coordinates.”

Böckel says minor tweaks to the settings of the camera’s interface allow the team to compensate for different lighting conditions occurring in different rooms and throughout a day. “With that, we were able to get relatively stable results,” he says.

A second challenge was maneuvering the vehicle within the rows of creels, necessitating the handoff in navigation approaches. The problem: the autonomous system could not find the correct spot in front of a mandrel because everything within those rows looks the same, Herpich says, adding that, in essence, the vehicle would get lost.

By using the tape with embedded codes, “the vehicle does always know where it is in relation to the creels,” Herpich says. This is important because the robot needs to be positioned within 1 mm of an exact spot to pick up a bobbin and load it on a mandrel, he further explains.

The Project’s Goal

The textile manufacturer wants to create a completely autonomous system to load creels, including on Sunday—the one day per week that the plant is closed. In this way, production could begin as soon as employees arrive for work on Monday morning. One vehicle and robot arm—which can load up to 1,000 bobbins per shift—provides enough capacity to handle the current workload.

Currently, the project is in an introductory stage. “Every time they use it, there is still someone watching that everything works out,” Herpich says.

Employees are adjusting to the idea of working around a large (3.5-m long and 4,000-kg) autonomous machine. “You really need some time to get comfortable with having it around yourself,” Herpich explains. “It’s almost a bit scary when it drives around.”

About the Author

Linda Wilson

Editor in Chief

Linda Wilson joined the team at Vision Systems Design in 2022. She has more than 25 years of experience in B2B publishing and has written for numerous publications, including Modern Healthcare, InformationWeek, Computerworld, Health Data Management, and many others. Before joining VSD, she was the senior editor at Medical Laboratory Observer, a sister publication to VSD.