Researchers use neuromorphic imaging to build smart sensors

Researchers use neuromorphic imaging to build smart sensors

Andrew Wilson

Editor at Large

Many machine-vision systems contain off-the-shelf cameras, frame grabbers, image processors, and software to perform varied visual inspection, diagnosis, and identification applications. Unlike the human visual process, these systems often acquire large amounts of data that must be processed before identification or recognition tasks can be performed. Fortunately, with the advent of powerful microprocessors and digital-signal processors, large amounts of digital data can now be processed at high speeds. Despite processing advances, however, image-processing systems fall short of emulating the capability of the human visual system in which analog--not digital--imaging data are processed in a more efficient, but not well understood, fashion.

"Throughout the last decade, researchers have sought to understand the biological circuits and principles underlying the visual systems of flies, frogs, cats, monkeys, and human beings," says Christof Koch, professor of computation and neural systems at the California Institute of Technology (CIT; Pasadena, CA). "At the same time, the complexity of circuits that can be fabricated on a single chip has increased," he adds.

Capitalizing on these gains, researchers have built integrated circuits (ICs) that mimic the neurobiological circuits related to visual processing. Called neuromorphic ICs by Carver Mead, the Gordon and Betty Moore Professor of Engineering and Applied Science at CIT, neuromorphic imaging sensors combine arrays of photoreceptors with analog circuits at each picture element (pixel) to emulate the human retina. In this way, the sensors can adapt locally to vast changes in brightness, edge detection, temporal signals, and motion detection.

"Until recently, such vision chips were laboratory curiosities," says Koch. "But now they are powerful enough to be used in a variety of products. And, in the long term, the principles of neuromorphic design will enable machines to interact with the environment and humans, not through a keyboard or a magnetic-strip card, but with the help of robust, cheap, small, and real-time sensory systems," he says.

Mimicking biology

"Although the study of biological models can reveal algorithms for target acquisition and tracking, image-motion measurements, and three-dimensional (3-D) navigation, direct VLSI implementation is virtually impossible because the silicon real estate required is usually large," says Ralph Etienne-Cummings, assistant professor of electrical and computer engineering at Johns Hopkins University (Baltimore, MD). "Nonetheless, their computational characteristics can be easily mapped onto VLSI hardware. Developing algorithms that transform biological models into VLSI allows many visually guided tasks to be performed at the focal plane and replaces conventional high-speed computers and dumb sensors with compact, inexpensive, real-time systems," he adds.

These systems can perform logarithmic preamplification of image intensity, temporal-gain adaptation, edge detection, and 2-D motion detection at the imaging plane. Such sensors have already been developed at several universities, including Johns Hopkins, CIT, and the Institute of Neuroinformatics (Zürich, Switzerland).

In a collaboration between Johns Hopkins and the Neural Net System Laboratory at Boston University (Boston, MA), Etienne-Cummings and his colleagues have developed processors that perform computationally intensive vision tasks for boundary contour segmentation and pattern classification. These ICs implement neuromorphic architectures by emulating the information-processing techniques of the visual cortex and other parts of the human brain.

Based on biologically inspired models, Etienne-Cummings has developed a VLSI hardware implementation of a boundary contour system. This system integrates at each pixel the functions of simple and complex cells in each of three directions interconnected on a hexagonal grid. Implementation of spatial convolution is accomplished using a network of laterally coupled MOS transistors to provide an exponentially tapered convolution kernel similar to a Gaussian type. This kernel is performed along four orientations with nearest-neighbor connections along vertical, horizontal, and diagonal lines.

In the implementation of the IC, analog current-mode CMOS circuits are used to perform edge detection. Containing 88 transistors, the IC integrates a phototransistor, a sample-and-hold capacitor, and three networks of interconnections in each of the three directions. Figure 1 illustrates an interpolated directional response of the IC to a curved edge in the input that varies in direction between two of the principal axes.

Boundary contour segmentation and edge detection are just a few of the areas being studied by researchers. Tobi Delbrück, a research scientist at the Institute of Neuroinformatics, has developed a neuromorphic IC to measure the sharpness of images. At the recent Intelligent Vision `99 conference in San Jose, CA (see Vision Systems Design, Aug. 1999, p. 17), Delbrück described the development of an IC that could be used in the image plane of a camera system to provide an absolute measure of image sharpness.

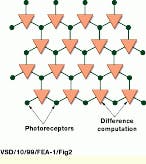

In the design of this device, image pixels are arranged in a hexagonal array (see Fig. 2). Each pixel includes an adaptive photoreceptor and a three-input antibump circuit that process the absolute differences between the three adaptive photoreceptors. "When the imager is subjected to sharp images, where pixel differences are greater, these differences are bigger," says Delbrück. "This allows the devices to be used in such applications as autofocusing systems." Using similar architectures, Delbrück has fabricated other smart sensors including an IC that detects motion and a door-opener sensor. "Although such ICs represent the low end of vision-system complexity," says Delbrück, "they can compute useful image metrics that would require a more complex, traditional approach."

Open eyes

Modeling the behavior of the superior colliculus--the part of the human brain responsible for the control of responses to sensory stimuli--is the goal of Timothy Horiuchi and Christof Koch, researchers at the Computation and Neural Systems Program at CIT. Already, Horiuchi (now a postdoctoral candidate in the Zanvly Krieger Mind-Brain Institute at Johns Hopkins) and Koch have built a hardware model of the primate oculomotor system using analog VLSI circuits to map the location of visual temporal change to a motor command voltage. Triggered by visual stimuli, their system uses an array of adaptive photoreceptor circuits that drives a temporal derivative circuit, which is activated when the intensity of regions of an image changes.

To map retinal positions to motor output commands, the chip contains a one-dimensional array of photoreceptors and floating-gate circuits. While the temporal derivative circuit combines a low-pass filter with a bump circuit to signal the absolute value of the temporal derivative, a centroid circuit computes a weighted-average voltage that is used for motor control.

The main components of the system consist of the photoreceptor IC, an eye-movement generator IC that drives the motors, and an auditory localization system that triggers motion to auditory targets. The photoreceptor that detects the local changes in image intensity is driven by two motors (see photo on p. 35). These motors are driven by a controller IC that mimics the neural circuits found in the brain stem of primates. This IC is used to center the photoreceptor on the location of the target. In addition, two microphones allow the system to be oriented toward auditory targets. According to researchers, this system models the auditory mechanism of a barn owl.

"Beyond our effort to understand neural systems by building large-scale, physically embodied biological models," says Koch, "adaptive, analog, VLSI sensory-motor systems can be applied to many commercial and industrial applications involving self-calibrating actuation systems. For mobile robotics or remote sensing, these circuits will be invaluable."

Robots that see

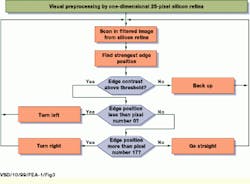

"Interesting systems that interact with the natural environment in real time can be built by interfacing neuromorphic sensors with traditional digital devices," says Giacomo Indiveri, research assistant at the Institute of Neuroinformatics. One of the first systems to use digital technology in conjunction with an analog VLSI neuromorphic retina is the Koala robot from K-Team (Préverenges, Switzerland). The analog neuromorphic sensor developed at the institute provides the robot with a parallel solution for preprocessing sensory information. A controller algorithm, programmed into the robot`s CPU, uses the data processed by the sensor to perform a line-following task (see Fig. 3).

Containing a one-dimensional array of 25 pixels, the analog VLSI chip used in the robot implements a model of the outer layer of the vertebrate retina. In the array, neighboring pixels are coupled through both excitatory and inhibitory connections. "These lateral interactions between pixels produce properties resembling those of the neurons present in the first stages of the vertebrate visual pathway," says Indiveri. "As a result, the overall system performs an operation that corresponds to a convolution with an approximation of the Laplacian of a Gaussian function, a common operation used in machine vision to extract edges," he adds.

The Koala robot uses a Motorola 68331 processor and 1 Mbyte of RAM and can run autonomously from battery power. In the development of the vision system, Koala`s designers used an 8-mm lens with a 1:2 focal length mounted directly on the sensor`s socket. Attached to the front of the robot with its lens tilted toward ground at an angle of approximately 45°, the sensor can image features located 20 cm away. The outputs of the retina`s pixels are fed to one of six analog input ports and digitized to 8 bits.

"To track the strongest edge detected by the silicon retina, software was cross-compiled for the 68331 processor and uploaded over a serial link," says Indiveri. "Once the software was resident in the robot`s RAM, the serial connection was removed, and the robot operated autonomously."

Neuromorphic circuits such as those from CIT, Johns Hopkins, and the Institute of Neuroinformatics are still in the early stages of development. Still, researchers have been able to demonstrate that, even with a limited understanding of the human visual process, such devices can be used to perform edge detection, autofocusing, and robot guidance. In the future, advances in neurobiological research are expected to expand the knowledge of both the human visual system and higher-level understanding. At that time, neuromorphic-system developers are anticipated to encompass these advances in silicon and to come closer to emulating human vision.

Researchers at the California Institute of Technology have built a hardware model of a primate`s visual system that can map the location of a visual temporal change to a motor command voltage. The system consists of a photoreceptor IC, an eye-movement generator IC that drives the motors, and an auditory localization system that triggers motion to auditory targets. The photoreceptor that detects local changes in image intensity is driven by two motors.

FIGURE 1. By implementing spatial convolution using a network of laterally coupled MOS transistors, sensor devices such as those developed at Johns Hopkins University and the Neural Net System Laboratory at Boston University can find boundaries within images.

FIGURE 2. To measure image sharpness, image pixels are arranged in a hexagonal array and use an adaptive photoreceptor and a circuit to deliver the absolute differences between the photoreceptors. When the imager is subjected to increasingly sharper images, the pixel differences become larger and enable the device to be useful in autofocusing systems.

FIGURE 3. The Koala robot from K-Team performs tracking by using an analog VLSI sensory processor developed at the Institute of Neuroinformatics and a microcontroller. The tracking algorithm searches for edges above a certain threshold and then makes left/right decisions depending on the position of the edge pixel in the sensor.

Company Information

Boston University

Boston, MA 02215

(617) 353-2000

Web: www.bu.edu/

California Institute of Technology

Pasadena, CA 91125

(626) 395-6811

Web: www.caltech.edu/

Institute of Neuroinformatics

Zürich, Switzerland

(+41) 1 635 3052

Fax: (+41) 1 635 3053

Web: www.ini.unizh.ch/

Johns Hopkins University

Baltimore, MD 21218

(410) 516-8000

Web: www.jhu.edu/

K-Team

Préverenges, Switzerland

(+41) 21 802 54 72

Fax: (+41) 21 802 54 71

E-mail: [email protected]

Web: www.k-team.com/

Motorola Inc.

Schaumburg, IL 60196

(800) 668-6765

Web: www.motorola.com