CCD cameras embed imaging algorithms

To increase performance, camera vendors are embedding image-processing and machine-vision algorithms within their cameras.

By Andrew Wilson, Editor

Because of their low-cost, added functionality, and increasingly easier programming, field-programmable gate arrays (FPGAs) have become a mainstay of today’s CCD cameras. By incorporating functions such as Bayer interpolation, flat-field correction, and bad-pixel correction within these devices, the host computer can be offloaded from such compute-intensive tasks, increasing the speed of machine-vision systems. Today, many CCD cameras use FPGAs to allow these functions to be performed in a pipelined fashion in real time as images are captured. Better yet, some camera vendors are teaming with high-level imaging-software companies to allow more sophisticated image-analysis functions to be performed within these cameras.

When evaluating any specific CCD camera for machine-vision applications, system integrators should be mindful of many of the functions that can be performed within the camera itself. While many currently available CCD cameras use FPGAs in their designs, often these are used just to provide camera timing, triggering, and Ethernet, Camera Link, or USB connectivity. In the simplest designs, such cameras provide raw data over these interfaces and any pre- or postprocessing of the imaging data must be provided by a frame grabber (which often also incorporates an FPGA) or by the host processor itself. Because these cameras do not provide added functionality, they can be an inexpensive way of digitizing images, especially in applications where a frame grabber or host-processor combination can be used.

In many applications, however, embedding added functionality within the camera can prove beneficial, especially when implementing low-level point, area, resampling, or color-correction algorithms. Such algorithms readily lend themselves to the pipeline nature of FPGA logic, and, as such, provide a means for camera developers to increase the processing of functions by an order of magnitude (or greater) than simply offloading these functions to a host CPU.

Before choosing a camera that features these algorithms, it is necessary to understand both the algorithms and the benefits of choosing a camera with this on-board functionality. For example, while functionality such as gamma correction, Bayer interpolation, or flat-field correction is important in many machine-vision applications, functions such as JPEG/MPEG-2 compression are more suited to security surveillance. In these applications, image bandwidth reduction may be more important than preserving the quality of the original image.

Class actions

Image-processing algorithms generally fall into three classes: point operations, neighborhood operations, and more complex recursive operations. Because of their pipelined logic and on-board memory, FPGAs are mainly used to compute point and neighborhood operations. More recursive operations are usually handled by an external CPU or, in the case of smart cameras, by an on-board CPU. However, with the introduction of on-board RISC link processors, future generations of cameras will likely not perform all three types of operation within an FPGA.

Point-processing functions are mostly used to remap the camera’s original pixel values into a new set of pixel values using the FPGA’s on-board look-up tables. They can alter the contrast, brightness, and gamma of the image so that details of specific interest appear more clearly.

A more sophisticated class of operators are neighborhood operators. Indeed, many of the most powerful image-processing algorithms rely on these operators to perform functions such as spatial averaging, sharpening or blurring of image details, edge detection, and image-contrast enhancement.

In spatial convolution, the intensity of each output pixel is determined as a function of the intensity values of its neighboring pixels. This involves multiplying a group of pixels in the input image with an array of pixels in the convolution mask. By performing this function, the output value of each pixel is a weighted average of each input pixel and its neighboring pixels in the convolution kernel.

“Since mathematical manipulation of pixel data is the strongest point of an FPGA’s abilities,” says Anthony Pieri of Basler Vision Technologies, “FPGAs are very useful for incorporating finite and infinite impulse response filters in digital cameras. A typical example is a low-pass filter that can be used to “smooth” the pixels within an image. A low-pass filter is in essence a pixel averaging process and is suited to an FPGA’s math implementation.”

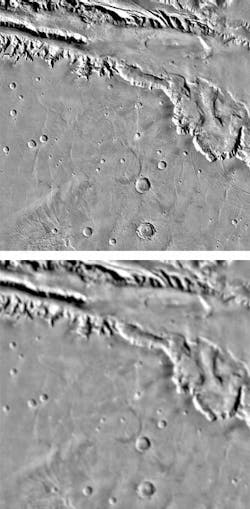

FIGURE 1. FPGAs are useful for incorporating finite impulse response and infinite impulse response filters in digital cameras. Low-pass filters can smooth the pixels within an image with a pixel averaging process. An original image (top) goes through a low-pass filter using a 7 x 7 neighborhood filter (bottom). Edges have softened, and small craters are fading or obliterated.

These types of filters are often used for preprocessing an image before it is passed onto a more powerful processing system (such as a CPU or DSP) for more complex analysis (see Fig. 1 on p. 49). “FPGA-based preprocessing capability has been a staple in Basler cameras used in inspection equipment designed to detect defects at the micron level in products such as CDs, DVDs, or glass substrates,” Pieri says.

Unsharp masking is another example of a neighborhood operator that increases the contrast of images within a scene. To do this, an image is first blurred by replacing each pixel value with the average of the pixel values that surround it. The resultant image is then subtracted from the original image within the FPGA, resulting in an image with small objects and structures enhanced. And, because FPGAs can be rapidly configured to incorporate pipelined multipliers and accumulators, they are ideally suited to performing these neighborhood operations (see Vision Systems Design, November 2005, p. 97).

While FPGA-based point and neighborhood operators are useful in performing many types of image-processing functions, they are generally not suited to more recursive image-processing operations such as statistical image analysis or image understanding. Here von-Neumann processors remain the architecture of choice. Despite this, many camera vendors have incorporated several different architectures within their FPGAs that allow bad-pixel correction, flat-field correction, filtering, color space conversion, centroid and particle detection, and JPEG/MPEG compression to be performed in real time.

Clever cameras

Two of the most important algorithms currently implemented in FPGA-based cameras are bad-pixel interpolation and flat-field correction. Bad pixels can occur when the sensitivity of a pixel is either lower than the sensitivity of adjacent pixels (in which case a dark pixel will be detected) or if the sensitivity is higher (resulting in a bright pixel). Alternatively, hot pixels may having sensitivity equal to an adjacent pixel but may produce a high-intensity output and be completely bright during long integration times (see Vision Systems Design, July 2006, p. 20).

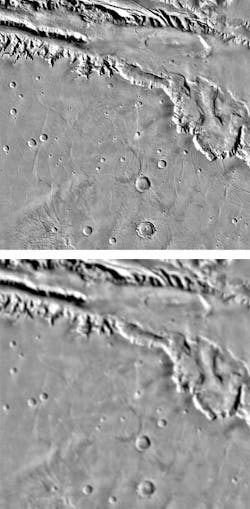

As in many areas of image processing, a number of methods exist to compensate for bad pixels. One approach, for example, would be to replace the output from a dead pixel with a copy of the output from a neighboring pixel that was read previously. More sophisticated approaches involve substituting, for each defective pixel, the average of readouts from surrounding pixels. This approach can be easily accomplished within an FPGA (see Fig. 2 on p. 52).

FIGURE 2. Several methods can compensate for bad pixels in CCD imagers. One is to replace the output from a dead pixel with a copy of the output from a neighboring pixel that was read previously. More sophisticated approaches involve substituting, for each defective pixel, the average of readouts from surrounding pixels; for example, software used for detecting defective pixels and an image from the camera containing a cluster of defective pixels (top) and the same image with the defective cluster pixels corrected (bottom).

For its range of Lynx CCD cameras, Imperx uses a camera’s on-board FPGA to compute a gray-scale value based on the values of the two adjacent pixels. To find dead pixels, however, the camera must first be subjected to uniform illumination and a defective-pixel map file created. As images are captured by the CCD array, each pixel is compared with the known value in the table. Should the pixel be known to be dark, bright, or hot, the camera’s FPGA generates a new gray-scale value that is read out with other known good-pixel values as a defective-pixel compensated image.

Pixels within a CCD device also may not return similar values even though the illumination across the field of view is constant. To compensate for this, flat-field correction measures the response of each pixel in the CCD to a known illumination and corrects for any variation in illumination. By computing a histogram of the value of the intensity of each pixel across the array, the percentage difference of each pixel compared with its ideal flat-field value can be determined. In Imperx cameras, this flat-field-correction file contains coefficients describing these nonuniformities. By uploading the file to the camera’s nonvolatile memory, the FPGA can compensate for each individual pixel value as images are captured in real time.

“Incorporating a 32-bit RISC power PC in the FPGA,” says Petko Dinev, president of Imperx, “enables firmware and software upgradeability and features such as programmable frame rates, multiple triggering options, user-programmable LUTs, and defective pixel correction maps (DPMs). The on-board RISC processor allows developers to create LUTs and DPMs and upload them into the camera.”

Rotation and cropping

Neighborhood operations such as image rotation and cropping algorithms can be invaluable, especially in high-speed systems where digitized images may be skewed or when particular regions of interest need to be quickly isolated. If an incoming image such as a printed-circuit board under test is skewed, image-rotation algorithms can be used to align the image correctly. Since an image-rotation algorithm runs inside the FPGA, these functions can run in real time at frame rates. In other applications, the image may need to be analyzed for proper orientation and then displayed. In these cases, image rotation and 2-D scaling can analyze the incoming image and perform an automatic rotation and scaling for the display device in real time.

Kerry Van Iseghem, cofounder of Imaging Solutions Group (ISG), states that his company’s engineers specialize in image algorithm development and implementation. Functions such as rotation, cropping, and centroid detection are very important and can be used in conjunction with other algorithms to speed machine-vision applications. “Centroid detection, for example, is a neighborhood operation used in many sorting algorithms and in conjunction with other machine-vision algorithms to trigger pneumatic systems,” he says.

By properly determining the center of a faulty part, the triggering mechanism will ensure that pneumatic air pumps properly blow defective products from the production line. Centroid detection also finds uses in applications such as laser-based Free Space Optical Communication systems (en.wikipedia.org/wiki/Free-space_optical_communication). If the lasers used in such an application are not continuously aligned, the bandwidth drops to zero and the system fails. Here, CCD cameras can act as the input to servomotors to keep laser beams properly aligned.

“Object- and particle-detection algorithms coupled with centroid detection can also track multiple targets within moving images,” says Van Iseghem. “This is important in applications such as detecting and tracking defects on the surface of semiconductor wafers or detection and tracking of military or automotive targets. By implementing the functions with an FPGA, data throughput can be sustained within ISG’s cameras while image-processing functions are being performed.”

Color spaces

Image-interpolation algorithms are especially important in color cameras that use CCDs with a Bayer filter mosaic to produce color images. In these cameras, a color filter array is overlayed on a square grid of photodetectors. Because the human eye is more sensitive to green light, the Bayer pattern uses twice as many green or luminance elements as red or green chrominance sensors. To obtain an RGB output from such an array, this Bayer pattern must be interpolated to produce a color value for each pixel.

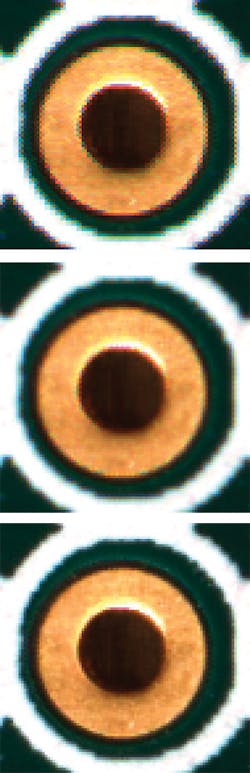

To create a full-color image, interpolation generates color values for each pixel, creating a full three-channel RGB color image. In modern CCD cameras, this interpolation is accomplished within the camera’s FPGA. Here again, a number of different algorithms can be used to accomplish this task (see Fig. 3).

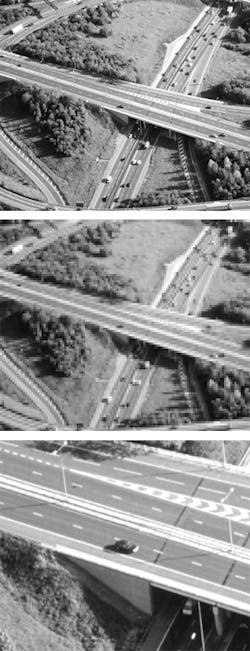

FIGURE 3. To create a full-color image, interpolation generates values for each pixel, creating a full three-channel RGB color image. These include nearest neighbor interpolation (top), bilinear interpolation (middle), and interpolation using a variable number of gradients (bottom).

These are generally classed as either nonadaptive or adaptive. Nonadaptive methods include nearest neighbor, bilinear, and smooth-hue transition. Adaptive methods can use edge sensing or variable gradient algorithms to perform the interpolation function. While nonadaptive methods are generally less complex and faster, adaptive algorithms are more complex, more computationally demanding, and can produce more accurately interpolated images.

Many manufacturers provide RGB data output for their cameras by performing Bayer interpolation within the camera. Examples include the PicSight Camera Link camera from Leutron Vision, all ISG LightWise Cameras, and the TMC-1000CL and TMC-6700CL cameras from JAI.

The Leutron Vision PicSight Camera Link camera, for example, has an on-board FPGA for optional preprocessing tasks such as Bayer decoder and color space rotation. “To perform Bayer interpolation, the Bayer decoder module implements a 5 × 5 window algorithm,” says Stephane Francois, executive vice president. “Gradients of color values within the 5 × 5 window are calculated relative to the center pixel, and a threshold is used to locate regions of pixels that are most like the pixel under consideration. The pixels in these regions are then weighted and summed to determine the average difference between the color of the actual measured center pixel value and the missing color. This yields excellent results compared to a simple 3 × 3 bilinear interpolation, resulting in sharper edges and better scene contrast.”

In JAI’s latest GigE-based cameras, this interpolation is taken one step further. “After Bayer conversion, most digital cameras apply some level of edge enhancement to counteract the softening effects from the low-pass optical filter and interpolation of colors during Bayer conversion,” says Steve Kinney, product manager. “This increases aliasing effects in the image and decreases the signal-to-noise ratio.” To overcome this, the embedded NuCORE processor in the camera uses a proprietary algorithm to produce a sharp image without introducing these unwanted effects. Better still, the processors on-chip hardware allows pixel-error correction to be performed in hardware.

“While most pixel-error correction algorithms use only nearest-neighbor pixels, the NuCORE processor has a proprietary 2-D error-correction algorithm that uses more than the nearest neighbors to produce a more accurate approximation of the bad pixel,” says Kinney. Perhaps one of the most important features of the processor, however, is its digital zoom feature. Rather than implement a bilinear interpolation, NuCore engineers have used a bicubic interpolation algorithm that provides more accurate edge detail and less pixilation than bi-linear methods (see “GigE cameras leverage consumer ICs for improved performance,” p. 10).

JPEG and MPEG

In applications such as traffic surveillance and security, it may be necessary to transmit digital data from CCD cameras over lower-performance networks such as Ethernet and USB. A number of camera manufacturers have opted to incorporate image compression within their cameras. Most often, these cameras use the Joint Photographic Experts Group (JPEG) standard or a version of it in their designs. While a version of the baseline JPEG standard can be used for lossless compression, most often, a lossy compression in used. To perform this compression a discrete cosine transform (DCT) is used. In a variation of the standard, adopted by the Moving Picture Experts Group (MPEG), only the changes from one frame to another are stored and these changes once again encoded using the DCT.

Camera designers have a number of choices. Image compression can be implemented using-off-the-shelf custom ICs especially designed for the task, embedded as cores within FPGAs, or off-loaded to the host computer or embedded CPU within the camera for host processing. Of course, each of these approaches has its trade-offs. While off-the shelf-codecs may prove effective for low-resolution cameras, multiple devices may be required for large-format imagers. Embedding these functions within FPGAs using off-the-shelf or custom cores provide a flexible, engineering-intensive solution. Finally, off-loading image compression to the camera’s on-board processor results in a slower, more flexible implementation.

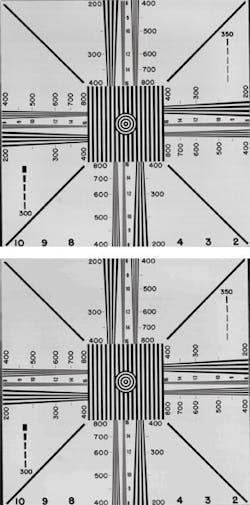

To perform JPEG compression in hardware at the high data rate provided by the Micron Technology sensor in its FastVision cameras, Fast Vision has reduced the size of the DCT core by creating a systolic form of a fast 2-D 8 × 8 DCT, implemented with two systolic 1-D eight-point DCTs implemented in a Xilinx XC2V2000 (see Vision Systems Design, December 2004, p. 39). To implement the 660-Mpixel/s data rate, the FPGA runs at 85 MHz (see Fig. 4).

ISG has also implemented the JPEG algorithm within a gate array in its cameras. The company says it can compress images of greater than 8 Mpixels at greater than 72 frames/s. Furthermore, ISG’s JPEG algorithm has bandwidth monitoring so a particular bandwidth can be set and the algorithm will vary the compression ratio to maintain the highest quality at this given bandwidth. Most JPEG algorithms are limited with a fixed compression ratio that wastes bandwidth. Since each image will compress differently than the next, the available bandwidth can be fully used to guarantee the best possible image quality.

Advanced features

“Many advanced features found within digital cameras now offer specialized functionality targeted for specific applications,” says Mike Cyros, president of Allied Vision Technologies. “These include Secure Image Signature, which places a digital time stamp, frame counter, and trigger counter into every image delivered from the camera in real time. Monitoring the trigger-to-frame counter gives an indication of a missed frame that may be very useful in time- critical systems.

“Other functions such as deferred image transport allow multiple cameras to acquire images simultaneously and stage the read-out of images in a controlled manner to the PC. This ensures the camera-to-PC bus bandwidths are not exceeded.” Similarly, a multisequence image feature allows a preprogrammed set of specific image acquisitions, each with individual parameters, to occur using a single trigger. “This is an important way to ease integration with complicated handling systems for the vision integrator,” Cyros says.

Of course, implementing algorithms within an FPGA still remains a challenge for many engineers. After algorithms are written in high-level languages, they must be recoded into hardware description languages such as Verilog before they are recoded, simulated, and synthesized to FPGA gates. However, this may be about to change. At VISION 2006 (Stuttgart, Germany), Silicon Software showed how its VisualApplets tool was used to program the eneo series of smart cameras from Videor Technical.

“VisualApplets uses a graphical user interface that allows developers to program and modify FPGA processors without knowledge of the hardware or HDL languages,” says Klaus-Henning Noffz, executive manager of Silicon Software, “both easing and accelerating system programming. The freely accessible Xilinx Spartan-3 FPGA within the eneo camera allows application-specific algorithms for image preprocessing to be rapidly implemented to off-load these functions from the host CPU.”

It may not be long before other providers of image-processing software follow suit. When this happens, both camera and frame-grabber vendors will be able to incorporate some of the software functions offered by software companies in hardware-based FPGA implementations. When such correct partitioning of point, neighborhood, and deterministic algorithms between FPGAs and CPUs is performed, a dramatic increase in speed and throughput of machine-vision applications will result.

RPDZ minimizes processing loads in networked cameras

Resolution Proportional Digital Zoom (RPDZ) is a postprocessing algorithm that maintains a constant data rate between a camera and a host computer while digitally modifying (zooming) the camera’s field of view (FOV). This is done by subsampling pixels in the image in a manner proportional to the digital zoom level.

Some vision applications demand that the FOV for the image being captured be repeatedly adjusted from wide to narrow and back again. In other words, the camera/sensor being used must capture a wide-angle view of a scene, zoom in on a specific object or section of the FOV, then zoom out again to see the big picture.

One way to tackle this challenge is by equipping the camera with a large, variable optical zoom lens. This enables a camera of moderate resolution (and cost) to capture enough detail in both wide-angle and close-up views, but adds the considerable expense and weight of the zoom lens to the vision system. A less expensive and less bulky alternative is to use a high-resolution camera with fixed optics, provided there is sufficient pixel density in the image to identify or analyze small objects by digitally “zooming” into an area of interest.

This challenge, however, becomes more demanding when the bandwidth of the video output must be considered. For example, unmanned aerial vehicles (UAVs) typically must transmit both wide-angle and close-up video information via an RF link of limited bandwidth. Similarly, some machine-vision or traffic applications call for multiple cameras sending both wide angle and close-up views over a single network.

In these applications, it may be inefficient or even impossible to use a megapixel camera with basic digital zoom capabilities, because the wide-angle view from a 4-Mpixel camera operating at 15 frames/s would generate more than 60 Mbytes of data per second at 8-bit gray scale. This is enough to choke even a high-bandwidth network and well beyond what a typical RF link can bear. The zoom lens option might satisfy the bandwidth requirement, but at an added cost and weight that greatly reduces the attractiveness of the system, especially for a UAV-type application.

Resolution Proportional Digital Zoom eliminates the need for an optical zoom, but avoids the bandwidth issues of megapixel digital zoom. For example, in a 4-Mpixel camera (2048 x 2048) utilizing RPDZ, the full FOV is imaged by capturing every fourth pixel in every fourth row, thus creating a 512 x 512 resolution. A 2X zoom is accomplished by digitally zooming to half of the full FOV and increasing the pixel density to every second pixel in every second line-again a 512 x 512 resolution. At 4X digital zoom, every pixel in the center 512 x 512 section of the image is used.

No matter what the zoom ratio or FOV, RPDZ holds the resolution at a level that fits within the standard TV bandwidth of a real-time RF link and greatly minimizes the processing load in network scenarios. In addition, full resolution snapshot images can still be captured when needed and transmitted in non-real-time over the lower bandwidth data link-something a low-resolution camera with a zoom lens cannot do.

Steve Kinney, product manager

JAI, San Jose, CA, USA