3-D imaging navigates robots

Interest in 3-D vision systems has dramatically increased recently due to projects such as the DARPA Autonomous Vehicle Research and Development Program known as the Urban Challenge (www.darpa.mil/grandchallenge). Rather than replace manned vehicles with autonomous versions, however, researchers at Vecna Technologies (Cambridge, MA, USA; www.vecna.com) are developing the Battlefield Extraction-Assist Robot (BEAR), which is designed to replace the role of the medic on the battlefield. The humanoid-like robot combines sophisticated mechanical, electromechanical, electronic, and computer-based systems and can operate in a variety of positions, from standing to kneeling. Already the robot can lift and carry a 185-lb human-shaped mannequin across rough terrain.

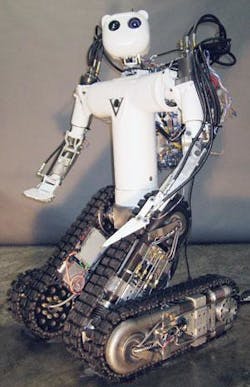

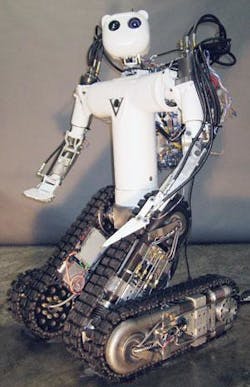

“To provide a variety of modes of locomotion, the lower body employs a hybrid tracked leg design says Andreas Hofmann, director of Machine Learning at Vecna (see figure on page 20). “Each upper and lower leg link is identical and features a tread driven by an electrical motor and a hydraulically driven hinge joint. For the lower leg links, the hinge joint is the knee. For the upper leg links, the hinge joint provides the sagittal plane rotation at the hip, causing the leg to move forward and backward.

Vecna Battlefield Extraction-Assist Robot can carry a 185-lb human-shaped mannequin across rough terrain. This year the company is evaluating 3-D imaging systems that will allow the robot to autonomously traverse rough terrain.

“These independently articulated legs improve the robot’s ability to balance in both side to side, and forward and backward directions. This allows the robot to perform movements such as bending one knee to balance on inclined planes, or placing one lower link forward of the other to increase the dynamic stability of the robot when climbing and descending inclined surfaces.”

To walk on very difficult terrain, to step over obstacles, to go up and down stairs, and to climb, the robot is placed in an upright position. A novel hybrid locomotion mode is also possible, where an upright, standing posture is used, as when walking, but the tracks are also turning to maintain balance. This allows for fast traversal of level terrain. Control of the hydraulic joint actuators is accomplished through pulsewidth modulation of valves associated with each actuator. A single hydraulic pump is used that connects to the actuator valves via a hydraulic manifold. The system also uses advanced state estimation techniques to estimate actuator force, to support force, and impedance control modes.

On the steel torso of the BEAR’s upper body there are two arms, each with six degrees of freedom, and a head that can pan and tilt. “Within the head are both a visible-light camera and an infrared camera, allowing the robot to be operated during the day or night. At present, however, the visible and IR system is strictly used to show the operator the scene ahead of the robot.

“Obstacle avoidance is a critical requirement for autonomous robot navigation,” says Neal Checka, AI expert at Vecna. “To navigate unknown environments effectively, robots must create an accurate representation of their operational environment. Stereo-based vision is a popular and effective technique for detecting and characterizing isolated, local obstacles (2–10 m in front of the robot) and for capturing elevation profiles of the scene.”

This year Checka and his colleagues will evaluate a number of different 3-D systems to provide disparity-map information to the robot’s on-board computer system. “These data will then be processed by a local obstacle-avoidance planner that makes decisions on where the robot should and should not be heading,” says Checka.

Checka has drawn up a number of criteria that such a system will be required to perform. This includes both indoor and outdoor use, an operating range of approximately 10 m, and high range accuracy. Checka will be evaluating vision systems that include time-of-flight systems, stereo-based systems, and perhaps structured-light methods.

If you would like to participate in this project by contributing hardware or software for evaluation, contact Neal Checka at Vecna Technologies, (617) 674-8545 or e-mail [email protected]. After his evaluation, Checka will discuss the results of his findings in a future issue of Vision Systems Design.