Designing effective traditional and deep learning-based inspection systems for machine vision applications

By David L. Dechow and Andrew Ng

For decades, machine vision technology has performed automated inspection tasks—including defect detection, flaw analysis, assembly verification, sorting, and counting—in industrial settings. Recent computer vision software advances and processing techniques have further enhanced the capabilities of these imaging systems in new and expanding uses. The imaging system itself remains a critically important vision component, yet its role and execution can be underestimated or misunderstood.

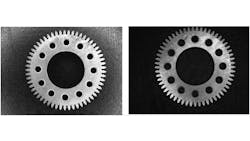

Without a well-designed and properly installed imaging system, software will struggle to reliably detect defects. For example, even though the imaging setup in Figure 1 (left) displays an attractive image of a gear, only the image on the right clearly shows a dent. When best practices are followed, machine vision and deep learning-based imaging systems are capable of effective visual inspection and will improve efficiency, increase throughput, and drive revenue. This article takes an in-depth dive into the best practices for iterative design and provides a roadmap for success for designing each type of system.

Is Your Imaging System Good Enough?

Lighting, optics, and cameras comprise an imaging system, and these components must be carefully specified and implemented to ensure high-quality parts images. “High quality,” in this context, means images with sufficient contrast to highlight unacceptable features (such as dents) compared with those with a normal or expected appearance. The images must also have adequate resolution to show differences between features.

If a human inspector examining an image produced by an inspection system cannot confidently identify a defect, it’s unlikely the software will be able to either. Conversely, in circumstances where a human inspector can identify a defect in an image, there’s no guarantee that an imaging technique will produce reliable and repeatable detection of similar target defects during operation. Situations that indicate an imaging system (and not the software) needs work include:

- An inspector looking at a physical part can reliably judge that something is defective but can’t be sure when looking only at the image captured.

- Two inspectors looking at a physical part generally agree in their assessment, but an inspector looking at the physical part often disagrees with a different inspector looking only at the image.

One common misconception is that if a human inspector can see a feature with the naked eye, an imaging system can be designed to produce an image that successfully captures the same feature. But, a human inspector can view a part from multiple orientations and under different lighting conditions to make a quality judgment, while a static imaging system cannot necessarily capture a similarly large range of orientations and illumination variations. Therefore, it might have trouble highlighting features that a human inspector holding the same object would highlight. And, in cases such as detecting scratches in transparent parts, the imaging system’s challenges might become even more complex.

Over millennia, the human visual system has evolved to be very efficient and accurate at processing image data. Building a software system capable of beating a person at processing images is an incredibly difficult task, as is building a software system that can detect defects that an inspector cannot. Even the most advanced vision system is not magic. If given a sufficiently blurry and fuzzy image, no vision system can reliably make a defect judgment.

Traditional Imaging System Design Checklist

Systems integrators and OEMs must consider several factors when designing an effective imaging system. These factors include:

Contrast: Creative use of dedicated illumination and optics specifically selected for an application and the types of features that produce contrast represent an important element in machine vision.

Spatial resolution: Spatial resolution in an imaging system refers to the number of pixels that span a feature, such as a defect. With too few pixels, it is impossible to reliably detect the feature relative to the part surface. Assuming that an image is well focused, we recommend having at least a 5-pixel width of the smallest defect the system is expected to detect.

Image consistency: In an automated process, many factors can cause variation in an image, including part positional variation and variation in the parts themselves. In some scenarios, these variations can cause glare or dropout from the illumination source that obscures features. In other cases, part variation might cause reflections that could be mistaken for flaws or defects. If a machine vision system inspects a transparent automotive headlight for defects, for example, different lighting conditions will produce different amounts of glare. The more the system can capture images from the same angle, with the same lighting and the same background, the easier it is to build software to detect defects.

Exposure: Over or underexposed images lose a lot of fine detail. The appropriate level of exposure should allow the system to capture clear images of defects.

The Iterative Process of Designing an Imaging System

Specifying an imaging architecture for a machine vision system is just one critical step in the overall integration process. Successful automation vision system integration requires thorough and competent analysis and planning prior to component design and specification, followed by efficient installation, configuration, and system start-up.

Software must be considered during imaging system design as well. In some cases, an image that will be used with traditional rules-based machine vision algorithms might be different from an image that would be appropriate for a system using deep learning algorithms. Figure 2 (left) shows a significantly more challenging detection problem than the better illuminated and lit image in Figure 2 (middle). The darker backdrop in Figure 2 (right) illuminates the defect even more. In this case, better image design would make implementation of either inspection system significantly more reliable.

Designing an imaging system is a highly iterative process; the best machine vision solutions evolve and grow more reliable and stronger over time. Designing a system around the “perfect” lighting and camera and then building it in advance may not be possible. But with thorough analysis of an application’s needs —along with some knowledge of imaging components and techniques—developers can produce a good initial design.

When developing software systems, an integrator or OEM should collect sample images—even with a smartphone camera in the first few days—to get initial data to validate the feasibility of the software. Whether this proof of concept produces positive or negative results, bear in mind that a separate, production-ready imaging system must be designed. A smartphone camera’s capabilities, such as quickly moving to multiple angles, might not be feasible in a production system. Working with sample parts with representative defects using a static imaging setup may work, but the final imaging system configuration must still be considered.

Testing software with “perfect” images might not truly represent actual capability in the production setting. When designing a production-ready imaging system, a thoughtful design will produce longer-term success. In a typical process, one should:

- Develop a specification for the types of features/objects/defects to be imaged, considering the automation and handling limitations of the part. Considerations might involve fast-moving parts, parts that change in appearance based on viewing orientation, and parts that show glare.

- Collect defective and acceptable part samples.

- Design an initial imaging system that meets the needs of the parts to be detected and the physical constraints and specifications of the production environment.

- Run the sample of parts through the system and check that all defects are imaged clearly in a way that will be suitable for the targeted software solutions.

- Iterate steps 3 and 4 until performance is satisfactory.

Similar steps must be made when developing a deep learning-based imaging system or implementing deep learning capabilities into an existing machine vision system, with the exception of some key considerations. The next section provides a plan for getting started with deep learning in your imaging system.

Deep Learning Development Checklist

In several scenarios, discrete analysis-based machine vision algorithms may not suffice. These include semiconductor and electronics inspection, steel inspection, welding inspection, and any other inspection task where defects may be hard to find or where the appearance of “good” parts or items varies. Developing a deep learning software solution may be similar to building a traditional rules-based system, with the exception of some key considerations. These include:

Deploying clean data: “Garbage in, garbage out” is the saying. Data represent the food that nourishes an artificial intelligence (AI) system, so it is imperative that quality data are used to train a deep learning model. Even the most well-conceived model produces subpar results when consuming inaccurate or incomplete information. A quality deep learning software solution should continuously collect data while allowing the data and each software component to be systematically developed, deployed, tracked, maintained, and monitored with tools that help developers access and control AI model evolution. The data should include information on products, defects, labels or tags, data consistency, and associated models.

Defining defects: In many industrial settings, companies that rely on human inspectors often keep a written log of defined part defects. In training a deep learning system, these defects must also be defined up front so that the software can recognize a defective part.

Tagging and labeling: Companies looking to deploy deep learning must accurately label and tag data. When done inconsistently, this step can lead to inaccurate AI models. With clear defect definitions and clear, unambiguous labels on a representative data set, companies can proceed with visual projects with small amounts of data. Internal experts must collaborate to assign, manage, execute, and review tasks to ensure quick and accurate labeling to produce more accurate models.

Iterative improvement: The best AI models should be evaluated against expert human inspectors to prove value before deploying to a production line, especially if the line serves as a test for global deployments. Deep learning software should have tools for evaluating a model’s performance, identifying data that can result in losses in model accuracy, and evaluating new data sets to improve and expand existing models to reach success metrics. The software should also feature tools to prevent overfitting and to evaluate the performance of a trained model.

Common Pitfalls and Challenges

Imaging presents many challenges, so systems integrators and OEMs should consider a few of the most fundamental and elementary pitfalls and address these up front in system design. These include:

Ambient light: Illumination from sources other than the dedicated lighting components designed for an imaging system is considered ambient and can introduce inconsistency and failure into the system. Sunlight and even overhead illumination must be controlled either through shielding or optical filtering where possible. In one example, a change in color of the uniforms of manufacturing personnel near the inspection system caused additional reflected light that impacted inspection results. In most cases, it is relatively simple to mitigate ambient light in imaging system design.

Mechanical stability: Factory vibration can shake the optics in imaging systems loose, and changes in camera position, lighting components, or even lens settings can cause unreliable imaging.

Varying appearances: Materials, design, and overall appearance of the parts being inspected may change, and without the vision system owner being aware of them. For example, a manufacturing engineering team decides to change the metal alloy on a screw because it’s cheaper. Functionally, the part will work the same, but the appearance may be altered. Such external influences can cause a system’s performance to degrade, sometimes silently. Software that checks for this drift can signal to the operations team when to carry out vision system maintenance in a timely way.

Machine Vision and Deep Learning Evolved

A visual inspection system, whether traditional or deep learning-based, can help industries and companies of all types keep up with customer demand while ensuring product quality, improving productivity, and bringing down costs. Whether you are a company looking to automate more processes or an integrator or OEM facing the specification, design, and installation of your next system, consider the fact that all visual inspection systems require testing, iteration, and continuous improvement.

Following best practices and considering contrast, spatial resolution, image consistency, and exposure will aid in the design of an effective imaging system. On the deep learning side, factoring in the need for clean data, agreement-based labeling, tagging and labeling, and iterative model improvement will help produce a high-quality AI visual inspection system. With ongoing improvement, your visual inspection system will continue to add value and allow your business to grow into the future.