Leveraging embedded vision system performance for more than just vision

Brandon Treece

Machine vision has long been used in industrial automation systems to improve production quality and throughput by replacing manual inspection traditionally conducted by humans. Ranging from pick and place and object tracking to metrology, defect detection, and more, visual data is used to increase the performance of the entire system by providing simple pass-fail information or closing control loops.

The use of vision doesn't stop with industrial automation; we've all witnessed the mass incorporation of cameras in our daily lives, such as in computers, mobile devices, and especially in automobiles. Just a few years ago, backup cameras were introduced in automobiles, and now automobiles are shipped with numerous cameras that provide drivers with a full 360° view of the vehicle.

But perhaps the biggest technological advancement in the area of machine vision has been processing power. With the performance of processors doubling every two years and the continued focus on parallel processing technologies such as multicore CPUs, GPUs, and FPGAs, vision system designers can now apply highly-sophisticated algorithms to visual data and create more intelligent systems.

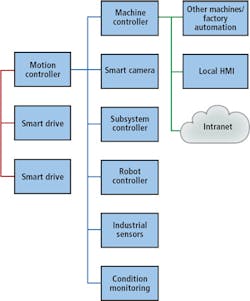

This increase in technology opens up new opportunities beyond just more intelligent or powerful algorithms. Let's consider the use case of adding vision to a manufacturing machine. These systems are traditionally designed as a network of intelligent subsystems that form a collaborative distributed system, which allows for modular design (Figure 1).

However, as system performance increases, taking this hardware-centric approach can be difficult because these systems are often connected through a mix of time-critical and non-time-critical protocols. Connecting these different systems together over various communication protocols leads to bottlenecks in latency, determinism, and throughput.

For example, if a designer is attempting to develop an application with this distributed architecture where tight integration must be maintained between the vision and motion system, such as is required in visual servoing, major performance challenges can be encountered that were once hidden by the lack of processing capabilities. Furthermore, because each subsystem has its own controller, there is actually a decrease in processing efficiency because no one system needs the total processing performance that exists across the entire system.

Finally, because of this distributed, hardware-centric approach, designers are forced to use disparate design tools for each subsystem-vision-specific software for the vision system, motion-specific software for the motion system, and so on. This is especially challenging for smaller design teams where a small team, or even a single engineer, is responsible for many components of the design.

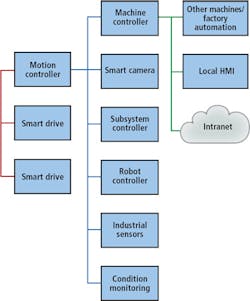

Fortunately, there is a better way to design these systems for advanced machines and equipment-a way that simplifies complexity, improves integration, reduces risk, and decreases time to market. What if we shift our thinking away from a hardware-centric view, and toward a software-centric design approach (Figure 2)? If we use programming tools that provide the ability to use a single design tool to implement different tasks, designers can reflect the modularity of the mechanical system in their software.

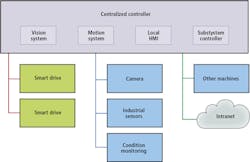

This allows designers to simplify the control system structure by consolidating different automation tasks, including visual inspection, motion control, I/O, and HMIs within a single powerful embedded system (Figure 3). This eliminates the challenges of subsystem communication because now all subsystems are running in the same software stack on a single controller. A high-performance embedded vision system is a great candidate to serve as this centralized controller because of the performance capabilities already being built into these devices.

Let's examine some benefits of this centralized processing architecture. Take for example a vision-guided motion application such as flexible feeding where a vision system provides guidance to the motion system. Here, parts exist in random positions and orientations. At the beginning of the task, the vision system takes an image of the part to determine its position and orientation, and provides this information to the motion system.

The motion system then uses the coordinates to move the actuator to the part and pick it up. It can also use this information to correct part orientation before placing it. With this implementation, designers can eliminate any fixtures previously used to orient and position the parts. This reduces costs and allows the application to more easily adapt to new part designs with only software modification.

Nevertheless, a key advantage of the hardware-centric architecture mentioned above is its scalability, which is mainly due to the Ethernet link between systems. But special attention must be given to the communication across that link as well. As pointed out previously, the challenge with this approach is that the Ethernet link is nondeterministic, and bandwidth is limited.

For most vision-guided motion tasks where guidance is given at the beginning of the task only, this is acceptable, but there could be other situations where the variation in latency could be a challenge. Moving to a centralized processing architecture for this design has a number of advantages.

First, development complexity is reduced because both the vision and the motion system can be developed using the same software, and the designer doesn't need to be familiar with multiple programming languages or environments. Second, the potential performance bottleneck across the Ethernet networks is removed because now data is being passed between loops within a single application only, rather than across a physical layer.

This leads to the entire system running deterministically because everything shares the same process. This is especially valuable when bringing vision directly into the control loop, such as in visual servoing applications. Here, the vision system continuously captures images of the actuator and the targeted part during the move until the move is complete. These captured images are used to provide feedback on the success of the move. With this feedback, designers can improve the accuracy and precision of their existing automation without having to upgrade to high-performance motion hardware.

This now begs the question, what does this system look like? If designers are going to use a system capable of the necessary computation and control needs of machine vision systems, as well as the seamless connectivity to other systems such as motion control, HMIs and I/O, they need to use a hardware architecture that provides the performance, as well as the intelligence and control capabilities needed by each of these systems.

A good option for this type of system is to use a heterogeneous processing architecture that combines a processor and FPGA with I/O. There have been many industry investments in this type of architecture, including the Xilinx (San Jose, CA, USA; www.xilinx.com) Zynq All-Programmable SoCs (which combine an ARM processor with Xilinx 7-Series FPGA fabric), the multi-billion dollar acquisition of Altera by Intel, and other vision systems.

For vision systems specifically, using an FPGA is especially beneficial because of its inherent parallelism. Algorithms can be split up to run thousands of different ways and can remain completely independent. But this architecture has benefits that go beyond just vision-it also has numerous benefits for motion control systems and I/O as well.

Processors and FPGAs can be used to perform advanced processing, computation, and decision making. Designers can connect to almost any sensor on any bus through analog and digital I/O, industrial protocols, custom protocols, sensors, actuators, relays, and so on. This architecture also addresses other requirements such as timing and synchronization as well as business challenges such as productivity. Everyone wants to develop faster, and this architecture eliminates the need for having large specialized design teams.

Unfortunately, although this architecture offers a lot of performance and scalability, the traditional approach of implementing it requires specialized expertise, especially when it comes to using the FPGA. This introduces significant risk to designers and can make using the architecture impractical or even impossible. However, using integrated software, such as NI LabVIEW, designers can increase productivity and reduce risk by abstracting low-level complexity and integrating all of the technology they need into a single, unified development environment unlike any other alternative.

Now it's one thing to discuss theory, it's another to see that theory put into practice. Master Machinery (Tucheng City, Taiwan; www.mmcorp.com.tw) builds semiconductor processing machines (Figure 4). This particular machine uses a combination of machine vision, motion control, and industrial I/O to take chips off a silicon wafer and package them. This is a perfect example of a machine that could use a distributed architecture like the one in Figure 1-each subsystem would be developed separately, and then integrated together through a network.

Average machines like this one in the industry yield approximately 2,000 parts per hour. Master Machinery, however, took a different approach. They designed this machine with a centralized, software-centric architecture and incorporated their main machine controller, machine vision and motion systems, I/O, and HMI all into a single controller, all programmed with LabVIEW. In addition to achieving a cost savings from not needing individual subsystems, they were able to see the performance benefit of this approach as their machine yields approximately 20,000 parts per hour-10X that of the competition.

A key component to Master Machinery's success was the ability to combine numerous subsystems in a single software stack, specifically the machine vision and motion control system. Using this unified approach allowed Master Machinery to simplify not only the way they design machine vision systems but also how they designed their entire system.

Machine vision is a complex task that requires significant processing power. As Moore's law continues to add performance to processing elements, such as CPUs, GPUs, and FPGAs, designers can use these components to develop highly-sophisticated algorithms. Designers can also use this technology to increase performance of other components in their design as well, especially in the areas of motion control and I/O.

As all of these subsystems increase in performance, the traditional distributed architecture used to develop those machines gets stressed. Consolidating these tasks into a single controller with a single software environment removes bottlenecks from the design process, so designers can focus on their innovations and not worry about the implementation.

Brandon Treece, Senior Product Marketing Manager, National Instruments, Austin, TX, USA (www.ni.com)