July/August 2017 snapshots: Google Lens, Facebook's deep learning, thermal imaging, computer vision

Google Lens leverages computer vision and artificial intelligence to understand images

At Google's (Menlo Park, CA, USA; www.google.com) I/O Developer Conference from May 17-19, CEO Sundar Pichai announced a new technology called "Google Lens" that utilizes computer vision and artificial intelligence technologies that enables your smartphone to understand what you're looking at and help act based on that information.

"So for example if you run into something and you want to know what it is-say a flower-you can invoke Google Lens from your Assistant, point your phone at it and we can tell you what flower it is," said Sundar Pichai, Google CEO at the conference. "Or, if you've ever been at a friend's place and you've crawled under a desk just to get the username and password from a Wi-Fi router, you can point your phone at it and we can automatically do the hard work for you."

He added, "Or, if you're walking in a street downtown and you see a set of restaurants across the way, you can point your phone, because we know where you are, and we have our Knowledge Graph, and we know what you're looking at, we can give you the right information in a meaningful way."

Pichai noted that Google was built because they "started understanding text and web pages, so the fact that computers can understand images and video has profound implications for our core mission."

Google Lens will first ship in Google Assistant and Photos, and will come to other products in the future. Scott Huffman, Vice President, Assistant, also provided some details on how Google Lens can be used.

"So, last time I traveled to Osaka, I came across a line of people waiting to try something that smelled amazing. Now, I don't speak Japanese so I couldn't read the sign out front. But Google Translate knows over a hundred languages, and my Assistant will help with visual translation. I just tap the Google Lens icon, point the camera and my Assistant can instantly translate them into English."

Lens will reportedly be rolling out this year. Just like the Google Glass-which failed to take off, seemingly due to privacy concerns-there are likely many people that are excited about Google Lens. On the other side, though, are those that may find it too intrusive, as technology creeps further and further into peoples' everyday lives. It will be one of numerous interesting storylines to follow over the next several months, with regards to topics surrounding the proliferation of artificial intelligence.

Computer vision enables new whiteboard sharing software for video conferencing

Panoramic imaging company Altia Systems (Cupertino, CA, USA; www.altiasystems.com) has partnered with Intel (Santa Clara, CA, USA; www.intel.com) to launch PanaCast Whiteboard, a computer vision software that detects content on an existing whiteboard and displays it as an individual screen within a video conference.

Panacast Whiteboard is designed to be a computer vision capability for the PanaCast 2 camera system from Altia Systems. Panacast 2 is a panoramic camera system with three separate imagers and an adjustable field of view through a USB video class (UVC) PTZ (pan, tilt, zoom) command set. The camera has USB 2.0 and USB 3.0 interfaces and features a 180° wide by 54° tall field of view, enabling it to see an entire meeting space without the use of electromechanical PTZ cameras.

To capture images from all three imagers and to provide panoramic video, the PanaCast features a patent-pending Dynamic Stitching technology, which does an analysis of the overlapping image. Once the geometric correction algorithm has finished correcting sharp angles, the Dynamic Stitching algorithm creates an energy cost function of the entire overlap region and comes up with stitching paths of least energy, which typically lie in the background, according to Altia Systems.

The computation is done in real time on a frame-by-frame basis to create the panoramic video. Each video frame is 1600 x 1200 pixels from each imager, and joining these frames from three imagers together creates a 4800 x 1200 image. Each imager in the PanaCast 2 camera are 3 MPixel sensors that can reach up to 30 fps in YUV422 and MJPEG video format.

PanaCast Whiteboard is the result of computer vision algorithms initially developed by Intel and Altia Systems. The software alleviates reflections, shadows and glare to produce a crisp image, and is compatible with a number of video conferencing platforms, including Google Hangouts, Cisco WebEx, and Microsoft Skype and Zoom, per Altia Systems.

"Intel and Altia Systems have collaborated to deliver innovative computer vision algorithms that run on the Intel Core i-series processor family to detect whiteboards, rectify and enhance images to produce high-quality, orthogonal whiteboard views," said Praveen Vishakantaiah, VP and GM of Intel Client Computing Group R&D division.

Altia Systems President and CEO Aurangzeb Khan also commented: "The standard whiteboard is the simplest collaboration tool available for meetings and classroom settings," he said. "However, the rise of remote workers and education programs creates an urgent need for technology to keep the video collaboration process running smoothly. With PanaCast Whiteboard, people can brainstorm, host planning sessions, teach online courses and more from anywhere in the world, all while engaging your team and keeping the communication simple and natural."

PanaCast Whiteboard is compatible with PCs running quad-core Intel Core i7 processors and above, and can be viewed by participants on any video collaboration app via laptop, tablet, or phone.

"My clients are sometimes more interested in my whiteboard sketches than seeing my face," said Dean Heckler, President of PanaCast Whiteboard beta user Heckler Design. "When using PanaCast Vision, our whiteboard becomes an active meeting participant, creating another powerful communication tool for Heckler Design."

Stuart Evans, Distinguished Service Professor at Carnegie Mellon University, said: "With PanaCast, students feel like it's one classroom. They can glance at the panoramic video and see their classmates, no matter how far away they really are."

Facebook develops new technique for accelerating deep learning for computer vision

Based on a collaboration between the Facebook (Menlo Park, CA, USA; www.facebook.com) Artificial Intelligence Research and Applied Machine Learning groups, a new paper has been released that details how Facebook researchers developed a new way to train computer vision models that speeds up the process of training an image classification model in a significant way.

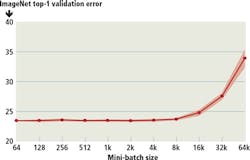

Facebook explains in the paper that deep learning techniques thrive with large neural networks and datasets, but these tend to involve longer training times that may impede research and development progress. Using distributed synchronous stochastic gradient descent (SGD) algorithms offered a potential solution to this problem by dividing SGD minibatches over a pool of parallel workers, but to make this method efficient, the per-worker workload must be large, which implies nontrivial growth in the SGD minibatch size, according to Facebook.

In the paper, the researchers explained that on the ImageNet dataset, large minibatches cause optimization difficulties, but when these are addressed, the trained networks exhibited good generalization. The time to train the ImageNet-1k dataset of over 1.2 million images would previously take multiple days, but Facebook has found a way to reduce this time to one hour, while maintaining classification accuracy.

"They can say 'OK, let's start my day, start one of my training runs, have a cup of coffee, figure out how it did,'" Pieter Noordhuis, a software engineer on Facebook's Applied Machine Learning team, told VentureBeat (San Francisco, CA, USA; www.venturebeat.com) ."And using the performance that [they] get out of that, form a new hypothesis, run a new experiment, and do that until the day ends. And using that, [they] can probably do six sequenced experiments in a day, whereas otherwise that would set them back a week."

Specifically, the researchers noted that with a large minibatch size of 8192, using 256 GPUs, they trained ResNet-50 in one hour while maintaining the same level of accuracy as a 256 minibatch baseline. This was accomplished by using a linear scaling rule for adjusting learning rates as a function of minibatch size and developing a new warmup scheme that overcomes optimization challenges early in training by gradually ramping up the learning rate from a small to large value and the batch size over time to help maintain accuracy.

With these techniques, noted the paper, a Caffe2-based system trained ResNet-50 with a minibatch size of 8192 on 256 GPUs in one hour, while matching small minibatch accuracy.

"Using commodity hardware, our implementation achieves 90% scaling efficiency when moving from 8 to 256 GPUs," notes the paper's abstract. "This system enables us to train visual recognition models on internet-scale data with high efficiency."

The team achieved these results with the aforementioned Caffe2, along with the Gloo library for collective communication, and Big Basin, which is Facebook's next-generation GPU server.

In summary, according to Facebook's Lauren Rugani, the paper demonstrates how creative infrastructure design can contribute to more efficient deep learning at scale.

"With these findings, machine learning researchers will be able to experiment, test hypotheses, and drive the evolution of a range of dependent technologies - everything from fun face filters to 360 video to augmented reality," wrote Rugani.

Thermal imaging cameras help researchers understand hummingbird energy usage

At the Loyola Marymount University for Urban Resilience (Los Angeles, CA, USA; cures.lmu.edu), researchers are using thermal imaging cameras to better understand how hummingbirds can use so much energy with so little rest.

By understanding the physiological mechanisms hummingbirds use to cope with extreme energy requirements and limitations, researchers may be able to gain insight into broader, human medical applications such as the necessity to reduce oxygen and food consumption during long-term space travel, according to FLIR (Wilsonville, OR, USA; www.flir.com).

Hummingbirds need to maintain a high metabolism since they use energy at such extreme rates. Because of their small size, the birds consume the caloric equivalent of 300 hamburgers in nectar each day. Unless a female hummingbird is nesting, nightly temporary hibernation (Torpor) is vital to survival. Torpor involves the drastic reduction of body temperature, and nesting hummingbirds are unable to enter this state, as they must use their body temperature to look after their eggs.

With body temperature being the primary indicator of torpidity, the researchers decided to use thermal imaging cameras to monitor the nests without disturbing them. The research team used a Vue Pro R thermal drone camera, which enabled the researchers to place the cameras very close to the birds in order to capture frequent, accurate, and non-contact temperature readings. Vue Pro R cameras feature uncooled VOx microbolometer thermal imagers in either 640 x 512 or 336 x 256 formats, and a 7.5 - 13.5 μm spectral range.

In addition to the Vue Pro R, the team placed a FLIR C2 handheld camera near the nest for additional monitoring. The C2 camera features an 80 x 60 uncooled microbolometer thermal imager, as well as a 640 x 480 visible color camera, along with FLIR's MSX (multispectral dynamic imaging) image processing enhancement, which enhances thermal images with visible light detail for extra perspective. The C2 features a spectral range of 7.5 - 14 μm.

By using these cameras, LMU researchers are now monitoring 26 nests daily to measure the energy associated with female hummingbirds by thermally monitoring each of the nesting birds state of torpidity.