Integration of vision in embedded systems

Embedded vision architectures enable smaller, more efficient vision solutions optimized for price and performance.

Dr. Thomas Rademacher

Embedded computer systems usually come into play, when space is limited and power consumption has to be low. Typical examples are mobile devices, from mobile test equipment in factory settings to dental scanners. Embedded vision is also a great solution for robotics, especially when a camera has to be integrated into the robot's arm.

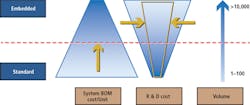

Furthermore, the embedded approach allows reducing the system costs compared to the classic PC-based setup. Let's say you spend $1,700 on the system with a classic camera, a lens, a cable and a PC. An embedded system with the same throughput would cost $300, because each piece of hardware is cheaper (Figure 1).

So be it smart industrial wearables, automated parking systems, or people counting applications, there are several embedded system architectures available for integrating cameras into your embedded vision system.

Camera integration into the embedded world

In the machine vision world, a typical camera integration works with a GigE or USB interface, which more or less is a plug-and-play solution connected to a PC (or IPC). Together with a manufacturer's software development kit (SDK) it is easy to get access to the camera and this principle can be transferred to an embedded system (Figure 2).

Utilizing a single-board computer (SBC), this basic integration principle remains the same (Figure 3). Low-cost and easy to obtain SBCs contain all the parts of a computer on a single circuit board SoC, RAM, storage slots, IO Ports (USB 3.0, Gig-E, etc.).

Popular single-board computers like Raspberry Pi or Odroid have compatible interfaces (USB/ Ethernet). There are also industry-proven single-board computers available from companies such as Toradex (Horw, Switzerland;www.toradex.com) or Advantech (Taipei, Taiwan; www.advantech.com) that provide these standard interfaces.

The major differences are the processor types that these single-board computers are equipped with. Although there are SBCs available with x86-based processors, most processing units use ARM type processors because they typically consume less power.

More and more camera manufacturers provide their software development kit (SDK) also in a version working on an ARM platform, so that users can integrate a camera in a familiar way as on a Windows PC.

In the best case, the SDK provides the same functionality and APIs (application programmable interfaces) for both platforms, so that even parts of the software application code can be re-used. Therefore, with such a setup, only low additional integration efforts must be fulfilled, compared to a standard PC-based vision system.

Specialized embedded systems

Embedded systems can be specialized to an even higher level, when the processing technology needs to be even more stripped down, for certain applications. That is why many systems are based on a system on module (SoM). These very compact board computer modules only contain a processor (to be precise: typically, a system on chip, SoC), microcontrollers, memory and other essential components.

Such a SoM needs to be mounted onto a carrier board which, for example, carries the needed plugs for certain interfaces. With such a relatively cheap carrier board, the system can be easily individualized for a specific application and system requirement, yet-since the SoM is off-the-shelf ware-the whole setup can be kept low-priced.

Generally, this setup can be equipped with a standard interface connector (e.g. USB) as well. In this case the plug and play benefits apply in the same way as for the single-board computer or even the PC-based machine vision system. However, often that does not comply with the idea of a very specific and lean system setup. As well, due to space, weight or energy consumption requirements, a USB interface might not be appropriate and instead a more direct camera-to-processor connection is of interest.

Furthermore, many embedded vision systems are based on (or include) an FPGA (Field-Programmable Gate Array) module. These devices are ideal for the computational work needed e.g. in stereo vision products or face detection applications. All these aspects are reasons why a direct camera-to-FPGA or camera-to-SoC connection might be required.

Special image data transfer

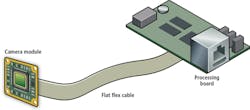

A direct camera-to-SoC-connection for the image data transfer can be achieved by an LVDS-based connection or via the MIPI CSI2 standard. Both methods are not clearly standardized from the hardware side. This means there are no connectors specified, not even numbers of lanes within the cable. As a result, in order to connect a specific camera, a matching connector must usually be designed-in on the carrier board and is not available in standardized form on an off-the-shelf single-board computer.

CSI2, a standard coming from the mobile device industry, describes signal transmission and a software protocol standard. Some SoCs have CSI interfaces and there are drivers available for selected camera modules and dedicated SoCs. However, they are not working in a unified manner and there are no generic drivers. As a result, the driver may need to be individually modified and the data connection to the driver can require further adaptation on the application software side in order to enable the image data collection. So, CSI2 does not represent a ready-to-use solution working immediately after installation.

While LVDS is a widely-used connection for high-speed data transfer, with defined signal transmission principles, there is no standardized software protocol for image data transfer either. As a result, there are no existing standard drivers. Some manufacturers provide complementary systems, i.e. cameras with LVDS output based on a proprietary protocol and processing boards with accordingly adapted drivers, which work together directly. The advantage is a complete solution with low integration effort, but the user is restricted to certain hardware.

Other manufacturers provide an openly documented LVDS-based camera output which is free for any hardware integration. In this case, a driver must be created. Most practically, this signal processing can be carried out on an FPGA. This type of FPGA-based image grabbing algorithm can be programmed from scratch, but there are also tools available to decrease the amount of integration work.

For example, there are predeveloped IP cores to be used on such an FPGA. For its board-level camera with LVDS interface, the Basler (Ahrensburg, Germany;www.baslerweb.com) dart camera provides a development kit including a processing board with a reference implementation (FPGA programming) to provide a direct sample for the integration of the vision device.

Camera configuration

Another aspect of these board-to-board connections is the camera configuration. Controlling signals can be exchanged between SoC and camera via various bus systems, e.g. CAN, SPI or I²C. As yet, no standard has been set for this functionality. It depends on the camera manufacturer, which imaging parameters can be controlled and how. Even the decision to support or not support GenICam depends on the manufacturer. But the good news is that all these bus systems are supported by most SoCs. Thus-with the appropriate driver-software can directly access the camera for configuration and changing imaging parameters.

An openly-documented software protocol is also important to be able to access the camera configuration. Basler supports the access of cameras via I²C (as part of the BCON for LVDS interface) from Basler's pylon SDK, and thus provides standardized APIs (e.g. in C++) simplifying the configuration programming (Figure 4).

Conclusion

Embedded vision can be an interesting solution for certain applications; several applications based on GigE or-more typically-on USB, can be developed using single-board computers. Given that these types of hardware are popular and offer a broad range in price, performance and in compliance with quality standards (consumer and business), this is a reasonable option for many cases.

For a more direct interface, LVDS or CSI2-based camera-to-SoC connections are possible for image data transfer. However, there is no comprehensive industrial standard established for a broad variety of technological requirements, or for all platforms. Hence, both methods have their limitations when it comes to creating easy integration solutions.

Adaption and integration work is necessary, but companies provide drivers, sample kits, SDKs or other tools to make the integration easier for the user. Both of these interfaces have advantages for one or the other application and use case. The camera configuration accompanying these interfaces is generally easier, since standards and drivers already exist. With an SDK or other tools, integration and operation can even be simplified.

Generally, for a better integration of cameras with a direct connection to the SoC, development and broad adoption of standards is essential. With subsequent generic drivers and standardized data APIs, a true image processor pipeline working out of the box (without adaptive programming) could be achieved. This would make the integration of vision technology even in the smallest and leanest embedded systems as easy as is already the case for today's (I)PC-based machine vision.

Dr. Thomas Rademacher, Product Market Manager - Factory & Traffic, Basler, Ahrensburg, Germany (www.baslerweb.com)