LEADING EDGE VIEWS - Machine Vision Gets Moving: Part II

Ned Lecky

Determining the dynamic range of a vision system—the ratio between the largest and smallest possible values of the light that can be captured by the camera—is one of the key considerations for any developer designing a system to meet the exigencies of the transportation marketplace.

In transportation systems, vision systems are often expected to perform over a variation in lighting similar to the human eye—a lighting variation that is approximately nine orders of magnitude.

But reaching nine orders of magnitude of light-intensity variation sensitivity in a machine-vision system is challenging. However, using automatic exposure control might provide an additional three orders of magnitude, automatic gain control another two, and an auto-iris lens to motorize the camera aperture another three.

Unlike many machine-vision applications, in the transportation segment, being able to control the automatic gain of a camera is vital. Because the images that are captured by the camera tend to be split-contrast images comprising shadows and moving targets, to practically implement an automatic gain, it is important that the system can identify a special area of interest from the image, which then dictates at what time and speed the gain is to be adjusted. Due to the application-specific nature of each system, custom gain solutions are almost inevitably required.

Similarly, automatic exposure control is almost always needed to provide the necessary dynamic range. Because the maximum exposure time is a function of vehicle speed, it is important to calculate what part of a vehicle seems to be moving the fastest; typically, it is the part closest to the camera. That may be accomplished using a series of computational algorithms that may need to be changed from one vehicle to the next.

Although auto-iris and automatic gain control are often used in these systems, their use does affect the quality of the acquired image. Therefore, it is necessary to limit the maximum exposure time of the camera to minimize image blurring. Limiting maximum gain in the camera can also help prevent graininess and noise on the image from analog amplifiers in the camera.

Another technique that can be employed is to record the image-acquisition parameters such as aperture setting and exposure when the image is acquired, either by stamping it into the image or building it into the image filename. That at least provides an opportunity to use the data to compensate for any quality issues in the image at some later date.

One further means to increase the order of magnitude is to use an infrared (IR) filter designed to block IR wavelengths while passing visible light. Such IR filters can provide another factor of two in dynamic range, but because the filters cut down the amount of light that enters the camera, a mechanical changeover of the filter is required if the system is to operate at night.

The Bayer filters used on color cameras are IR-transparent, so without an IR filter, it is almost impossible to capture a respectable color image during daylight operation.

A camera with a built-in motorized IR filter will, however, allow a system to capture good color images during the day (see Fig. 1). Although there is no IR filter that will eliminate all the infrared radiation from the sun, the amount of IR can still be dramatically reduced relative to the IR from the system's strobe. At night, while illuminating a scene with monochromatic IR, or even simply very dim light, the filter can be automatically removed to allow as many photons of light to enter the camera as possible.

In many applications, developers might also consider using both an IR-compensated lens as well as a removable filter on their systems. At night, the IR-compensated lens will then focus IR light in the same fashion that the visible light is focused by the camera during the day—without the need to adjust the focus on the lens.

When lighting a scene with an IR strobe, it may be advantageous to use a high-pass filter to eliminate as much visible light as possible so the camera effectively detects only the IR light from the strobe and is less sensitive to visible light.

Selecting the appropriate enclosure for the vision system is also a key consideration, especially when one accounts for the extreme conditions to which many transportation systems are exposed. A multitude of off-the-shelf enclosures are large enough to house a strobe and a camera (see Fig. 2). There are also many vendors who will create a custom enclosure for a specific application to meet different environmental specifications.

Sensors and cameras

One important factor for any system integrator is selecting a camera with a sensor that offers an appropriate dynamic range, resolution, and speed. Here, the obvious decision to be made is whether to choose a camera based on a CCD device or a CMOS device.

Traditionally, CCD-based cameras have been considered to be more sensitive and provide a greater dynamic range than their CMOS counterparts. But although this was true in the early days of such devices, today's CMOS sensors continue to improve in quality and in dynamic range to the point where they can provide a suitable alternative to CCD devices.

In attempting to decide between a CCD and a CMOS sensor, it is important to consider the nature of the application. It may be the case that while a CCD imager provides twice the sensitivity of its CMOS counterpart, doubling the sensitivity will only provide a small fractional increase in one order of magnitude of lighting variation.

This is a small contribution compared to the alternative schemes previously mentioned that can be employed to reach the nine orders of magnitude of light-intensity variation that may be required in an imaging system for the transportation marketplace.

The specific camera chosen for any task is dictated by the needs of the application. To determine the speed of a vehicle by performing frame-by-frame pattern recognition, a low-cost camera with VGA resolution running at a relatively high frame rate will suffice. Imaging the back of a vehicle to check whether a padlock is locked or is open, on the other hand, would require the use of a higher-resolution 2-Mpixel or 3-Mpixel color imager.

The choice of a wide or a standard 4/3 sensor is application-dependent as well. To image the back of a tractor trailer, a 4/3 format sensor would be suitable; a wide-format camera might be more appropriate to image the side of a very long vehicle, like a boxcar.

Image-processing systems in the transportation marketplace also demand a different style of lens from those used in industrial processes. For example, an industrial vision system might require a limited field of view, maybe less than 1 in.—so a 50-mm lens would suffice. The requirements of a transportation system may demand a field of view greater than 12 ft, so a 4-mm-wide angled lens is likely to be more commonly employed.

Both laser and acoustic sensors are also very popular in transportation applications. Like cameras, they can be used to detect the presence or absence of an object as well determining vehicle speed. As effective as they are, though, they also have drawbacks. For example, both types of sensors can be temperamental in difficult weather conditions. So while they can play a role in many transportation systems, their advantages, disadvantages, and cost must be carefully weighed before deployment.

Capturing color data

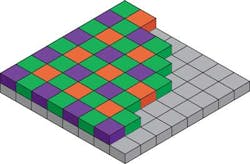

Capturing the color of vehicles is a goal of many image-processing systems in the transportation industry. Most of the devices used in mid-range color cameras have a Bayer filter array that comprises numerous RGB color filters over a square grid of photosensors (see Fig. 3). Since human beings tend to be more visually sensitive in the mid-range, there are twice as many green filters as there are reds and blues.

To obtain a full-color image, various demosaicing algorithms are used to interpolate a set of complete red, green, and blue values for each point, after which the data can be re-represented in some other format such as RGB, YUYW, or YUYW-411 or 422.

In transportation systems, there may be as many as 60 different formats of color data that are being captured by various cameras. The color data are frequently converted into monochrome format, but the computational burden and then storing large images at high speeds is often so great that many system integrators opt to use two cameras: one low-resolution monochrome camera and one high-resolution color camera that are both pointing at the same target.

Enhancing the means by which images can be compressed is equally important because if the image data were to be stored in a raw form on a disk, in a matter of minutes, terabytes of disk space could be consumed. So while image processing is performed on an uncompressed image and important data extracted from it, any images that need to be stored are converted into JPEG format, after which they are stored on disk.

Unfortunately, JPEG was designed to be a fast decompression algorithm, not a fast compressor; implementing high-performance compression requires a custom implementation of the JPEG compression algorithm that can be run many times over an image.

In Part III of the series, Ned Lecky will examine the selection of computers and software available for intelligent transportation systems.