EMBEDDED VISION: Low-cost embedded devices boost end-user applications

Today's smart cameras employ a number of different types of processors such as central processing units (CPUs), digital signal processors (DSPs), and field-programmable gate array (FPGA) cores. These devices allow smart cameras to take on much of the image-processing capability from complex systems configured with cameras, frame grabbers, and computers. Developers require low-cost, low-power, and programmable image processors to accomplish the task—a need that was highlighted at the September 2012 Embedded Vision Summit held in Boston, MA.

Organized by Jeff Bier, founder of the Embedded Vision Alliance (www.embedded-vision.com) and president of BDTI (www.bdti.com), and held in conjunction with UBM's Design East trade show and conference, the single-day Embedded Vision Summit event premiered, packed with presentations and product demonstrations from many of the major processor vendors and their customers.

By their very nature, image-processing algorithms fall into two broad categories: those that can be implemented in a parallel fashion such as point and neighborhood operations, and those that require a global approach such as feature extraction and image segmentation algorithms.

In the past, point operations have been implemented in a pipelined fashion using FPGAs, whereas global transforms fall to CPUs, DSPs, and graphics processing units (GPUs). Recognizing the importance of imaging in embedded applications, semiconductor vendors such as Analog Devices (www.analog.com), ARM (www.arm.com), Texas Instruments (www.ti.com), and Xilinx (www.xilinx.com) now offer products that combine multiple processor types to meet these demands.

While multiprocessor products may be more complex to program, they will allow low-cost (sub-$50), high-volume systems to be developed. Each processor manufacturer has taken a different approach to allow optimization of their image-processing code.

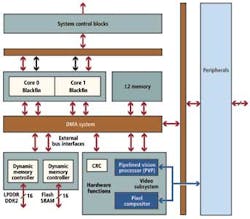

In its latest generation of Blackfin processors, Analog Devices' 500-MHz/core dual-core ADSP-BF608 and ADSP-BF609 incorporate a Pipelined Vision Processor (PVP) that comprises a set of configurable processing blocks designed to accelerate up to five concurrent image-processing algorithms (see Fig. 1). Targeted at large-volume imaging applications such as automotive driver assistance systems (ADAS) and security/surveillance systems, the PVP operates in conjunction with the Blackfin cores to optimize convolution and wavelet-based object detection and classification, as well as tracking and verification algorithms.

Rather than offer a pipelined image processor in its lineup, ARM has opted for a single-instruction-multiple-data (SIMD) approach in the latest ARM Neon processor. This programmable approach is well suited to image-processing algorithms such as Bayer interpolation and image filtering because only a single instruction can be used to perform the same operation on large datasets such as images.

As a 128-bit SIMD architecture extension for the ARM Cortex-A series of processors, the processor features 32 registers, 64 bits wide (or 16 registers, 128 bits wide). Of course, such SIMD designs require developers to vectorize their code to run in the optimal fashion.

However, as Apical chief executive officer Michael Tusch (www.apical.co.uk) pointed out in his presentation "Harnessing Parallel Processing to Get from Algorithms to Embedded Vision" at the Embedded Vision Summit, applying wide registers in a simple luminance from RGB data conversion should theoretically result in a speed increase of more than three times.

"In many cases, however," says Tusch, "memory management and dataflow bottlenecks can reduce this to just a 30% speed increase."

Texas Instruments' OMAP5430 integrates multiple processors, including two ARM Cortex-A15 processors, two ARM Cortex-M4 processors, graphics accelerators, a vision processing unit, and numerous I/O features, to name a few! While such integration is impressive, at present the device is intended for high-volume mobile OEMs such as smart phone and tablet manufacturers and is not available through distributors, as the company readily points out on its web site.

In many of today's cameras and frame grabber boards, dual FPGAs and CPUs are used to partition imaging functions. In embedded vision systems where power, weight, and cost must be minimized, processors that incorporate dual general-purpose and FPGA fabric are now appearing.

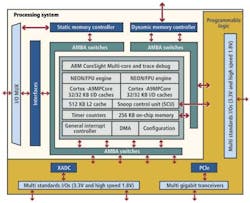

One such processor, Xilinx's Zynq-7000 1-GHz system-on-a-chip (SoC), features a dual-core Cortex-A9 processing core from ARM with 350k logic cells, sixteen 12.5-Gbit/sec serial transceivers, and 1334 GMACs peak DSP performance (see Fig. 2). The Zynq-7045 devices pushes programmable system integration a step further by providing a hard PCIe Gen2 x8 block, with I/O that supports up to 1866 Mbits/sec for additional DDR3 memory interfaces and 1.6 Gbits/sec for LVDS interfaces in DDR mode.

The processor has found use by Omnitek (www.omnitek.tv), a company specializing in the development of waveform monitors, video signal generators, and picture quality analyzers for SD, HD, and 3G video.

"Having an integrated solution with processor and programmable logic in a single device provides significant advantages over our existing two-chip design in terms of power reduction, size, and system performance," says Mike Hodson, OmniTek president.

"The added capability of operating the ARM processing system at 1 GHz provides us the headroom needed to run our video-processing GUI [graphical user interface] while simultaneously running several audio codecs and other real-time processing functions. The 12.5-GHz transceivers enable eight SDI video streams to be handled while also supporting 10G Ethernet for video over IP," explains Roger Fawcett, OmniTek managing director.