A spin-off of Montreal startup incubator TandemLaunch, AIRY3D (Montréal, QC, Canada; www.airy3d.com) takes an economical approach in 3D image sensor design. The company was founded in 2015 with the vision to enable all machines to see the world in 3D by bringing 3D computer vision to any camera.

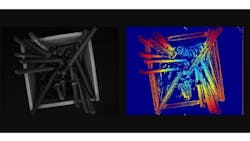

The company’s mission is to enable new capabilities, applications, and value in the computer vision ecosystem by offering unique approaches that transform generic 2D cameras to 3D. With that in mind, AIRY3D developed DEPTHIQ, a passive near-field 3D single-sensor, single capture solution, which uses diffraction to measure depth directly through an optical encoding transmissive diffraction mask (TDM) applied on a conventional 2D CMOS sensor. Together with image depth processing software, DEPTHIQ converts the 2D color or monochrome sensor into a 3D sensor that generates both 2D images and depth maps which are inherently correlated, resulting in depth per pixel (pictured above).

The following is a Q&A with Paul Gallagher, VP Strategic Marketing that further describes the company’s technology, how and where it can benefit machine vision systems, and a look at the state of 3D imaging today.

What is unique about what you do?

AIRY3D has solved the major issues associated with deploying depth to high-volume, low-cost products: cost, power consumption, reliability, and manufacturing complexity. DEPTHIQ enables high-quality depth sensing at a much lower cost than conventional 3D technologies as it uses a single existing 2D CMOS image sensor to generate both the high resolution 2D image and the 3D depth map. It does so with very light-weight processing, as most of the computational burden is eliminated by having the physics of light diffraction contribute the disparity information on a pixel-to-pixel basis.

What are the latest developments at AIRY3D?

To date, the DEPTHIQ single-sensor design has been successfully applied to implement single-sensor 3D imaging solutions based on a wide range of CMOS image sensors. The TDM approach has been adapted to both backside-illuminated high-volume, low-cost mobile sensors for smartphones and frontside-illuminated, global-shutter, low-volume, high-performance machine vision sensors. As such, TDM structures have been incorporated onto sensors having pixel pitches from about 1 to >3 µm and pixel counts from about 2 to 20 MPixels.

AIRY3D is working to enable complete 3D solutions through efficient depth computation algorithms and integration with various embedded vision platforms.

What is your technology bringing to the 3D imaging arena? What problems does it solve?

Conventional depth technologies are expensive, taxing on space, power hungry, and limited in the ways they could be implemented for given applications. For these reasons, depth-based imaging has only been able to address the very high-end markets that can afford the burden of multiple components, extra processors, and significant implementation costs.

DEPTHIQ addresses the limitations associated with traditional depth solutions. It provides a big advantage in cost, lower power consumption, reduced computational load, and can get more readily designed into very size-constrained applications or those where aesthetic concerns dominate.

As opposed to traditional 3D approaches, DEPTHIQ only needs the 2D camera module, it does not need an additional camera/module, and can work in sunlight, interior, or near-infrared lighting without expensive specialty lighting.

By enabling faster, cheaper, and smaller 3D solutions for human and machine vision applications, DEPTHIQ can be deployed nearly anywhere a conventional camera is being used.

How is DepthIQ different from other 3D imaging solutions? How does it work?

DEPTHIQ adds a single mask step at the end of the sensor’s fabrication process to place an optical encoding TDM over the existing 2D image sensor. Only a few microns thick, the TDM is made of standard semiconductor materials and is customized for the sensor characteristics, multiple lens, camera types, and working ranges. It maintains the low-light performance of the underlying camera and does not require changes to the lens or other camera module components from a deployed 2D imaging solution. Through the process of diffraction, the TDM is designed to encode phase/direction information into the intensity of the light which contains information about the depth of the light emitting source, generating unique, inherently compressed raw data sets.

DEPTHIQ’s lightweight image depth processing software (IDP) generates high frame rate sparse depth maps aligned with the RGB image data, as well as dense depth maps, preserving the 2D image information, resolution, and quality. It does so with in-video stream processing and without the need for frame buffering. Moreover, the IDP proprietary computational imaging algorithm is a drop-in to any image processing platform - CPU, GPU, DSP, FPGA, ASIC.

In which areas or applications do you see the most growth?

DEPTHIQ fuels depth deployment to numerous use-cases that want or need depth but cannot deploy 3D solutions due to conventional depth’s cost, size, or power requirements. We see the opportunity to accelerate the adoption of high-end features such as object segmentation, facial and gesture recognition, object detection and monitoring, in the areas of security, industrial robotics, consumer electronics, and automotive.

What is your take on the current state of the machine vision market?

The machine vision market is strongly focused on trying to deploy depth-based solutions to enhance detection, tracking, navigation, metrology, and isolation. This has not been easy though.

Many traditional depth approaches require very tight alignment tolerances between the multiple components needed to generate the depth image. If the units move relative to each other, even just through thermal expansion of the platform they are mounted on, your system could then report the wrong depth results.

Other approaches use illumination like stereo vision to triangulate on the object position, or they measure the time it took the pulse of light to go from the source, bounce off an object, and get captured by the imager (Time of Flight). One issue with the traditional special illumination approach is it does not work well in sunlight because the sun overwhelms the light from the light source. Another issue is errors from what is referred to as retro reflections, where light from the illuminator bounces off an object onto a second object and then to the sensor.

Despite the industry’s strong desire to broadly deploy depth, dealing with these issues increases cost and complexity of the system, which then reduces where depth can be deployed. The DEPTHIQ technology is poised to enable a much broader deployment to address the needs of the industry.

What can we expect from Airy3D in the near future?

DEPTHIQ is set to improve object segmentation in factory automation, metrology, and safety. Industrial applications enhanced with DEPTHIQ include robotic navigation, object avoidance, proximity and positioning, as well as optical inspection, dimensioning, and process control. Safety applications include non-contact button selection and safe distancing.

Do you have any new/exciting products on the horizon that you can talk about?

We have numerous projects underway with major sensor partners and customers and expect a significant number of announcements in the coming year.

About the Author

James Carroll

Former VSD Editor James Carroll joined the team 2013. Carroll covered machine vision and imaging from numerous angles, including application stories, industry news, market updates, and new products. In addition to writing and editing articles, Carroll managed the Innovators Awards program and webcasts.