3D ultrasound-based imaging technique combines depth cameras and robotics

A new vision-based robotic 3D ultrasound system developed by researchers from the Technical University of Munich (München, Germany; www.tum.de/en), Zhejiang University (Hanzhou, China; www.zju.edu.cn/english), and Johns Hopkins University (Baltimore, Maryland, USA; www.jhu.edu) creates accurate 3D scans even if the target limb changes position after the scan begins.

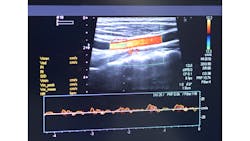

While 2D ultrasound imaging can provide images in real time even if the target moves, 3D ultrasound imaging is much more sensitive to changes in movement. Repositioning limbs, for example, may be necessary to make different parts of a limb available to the ultrasound probe in order to image an entire artery. The angle of the 2D image changes from its original position, making it difficult to stitch the images together into a 3D representation.

Robotic ultrasound systems (RUSS) produce high quality images by precisely controlling the contact force and orientation of the ultrasound probe. Combining robot arms with depth cameras to determine optimal probe position increases the accuracy of the scan. This is still not effective for 3D imaging because this system also cannot account for movement of the scan target.

The new system developed by the researchers, as described in the paper titled “Motion-Aware Robotic 3D Ultrasound” (bit.ly/VSD-MTN3D), combines RUSS with depth cameras to make 3D imaging with ultrasound possible. The system uses a LBR iiwa 14 R820 robot arm from Kuka (Augsburg, Germany; www.kuka.com/en-us), a Cicada LX ultrasound machine from Cephasonics Ultrasound (Santa Clara, CA, USA; www.cephasonics.com), and an Azure Kinect 3D camera from Microsoft (Redmond, WA, USA; www.microsoft.com/en-us).

The process begins by drawing a red line on the patient’s skin to mark the path of the ultrasonic probe during the scan. The Kinect camera images the line, and the software registers the path to calculate a trajectory for the robot arm. Two marker spheres with retroreflective layers are placed on both ends of the drawn red line. The spheres help provide reliable position data for the Kinect camera and aid in extracting region of interest and trajectory data. This helps the system account for limb position adjustment during the scan.

Because the system can assign 3D coordinates to images during the scan, even if the limb moves, the system can compensate for the movement and stitch the images together as if the limb movement never took place. This allows for creating accurate 3D scan data. Testing demonstrated that the system compensates for up to 40° of target rotation.

The researchers proposed that a laser system could project a trajectory onto the surface of a limb and replace the drawn red line. Also, while the system test simulated vascular scans, the system could also perform bone visualization.

About the Author

Dennis Scimeca

Dennis Scimeca is a veteran technology journalist with expertise in interactive entertainment and virtual reality. At Vision Systems Design, Dennis covered machine vision and image processing with an eye toward leading-edge technologies and practical applications for making a better world. Currently, he is the senior editor for technology at IndustryWeek, a partner publication to Vision Systems Design.