Deep learning model identifies difficult-to-detect abnormalities in medical images

Researchers from the Skolkovo Institute of Science and Technology (Moscow, Russia; www.skoltech.ru), Institute of Computer Science at Goethe University Frankfurt (Frankfurt, Germany; www.goethe-university-frankfurt.de/), and Philips Research (Eindhoven, The Netherlands; www.philips.com) have created a new method for anomaly detection in medical images.

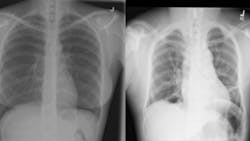

The complexity of medical images such as X-ray or lymph node scans presents a significant challenge for deep learning-based vision systems and anomaly detection, according to the authors of the research paper, “Anomaly Detection in Medical Imaging With Deep Perceptual Autoencoders” (bit.ly/3Goojkv/), because these anomalies strongly resemble normal images.

Training current deep learning systems for anomaly detection would therefore require time-consuming and expensive dataset creation at the hands of specialized experts. Medical anomalies are also much rarer than normal data for such supervised analysis, compounding the difficulty. To solve the challenge, the researchers turned to autoencoders.

Autoencoders are a type of neural network (NN) that map input, in this case image data, into low-level representation and then reconstruct the original image. The process trains the NN to learn only the most critical or useful portions of the data needed to recreate an approximate representation of the original image.

In their study, the researchers trained an autoencoder to recognize the differences between the input medical images and the output reconstructions, or the perceptual loss, and then used the perceptual loss information only to train the anomaly detection model. In this way, the model had more flexibility in determining what normal data, i.e. images without abnormalities, looked like. Therefore, any sections of an image that still did not look normal, even when compared to such a flexible definition of what a normal image looks like, more likely identified as an anomaly.

The progressive growing method first teaches a model how to recognize and then reconstruct low-resolution images. The images’ resolutions slowly increase until high resolution images are used. In this case, the method allowed the model to better understand the intricacies of the wide number of variables expressed in “normal” images. This, in turn, made the model more sensitive to anomalies of even very small pixel sizes.

Finally, the researchers determined that including a limited number of carefully chosen annotated abnormality images in the training dataset allowed them to tune the model for higher accuracy. Doing so allowed the model to reject blatantly incorrect hyperparameters the model created to identify anomalies.

To test the new method, the researchers used the model to detect metastases in lymph nodes using the Camelyon16 dataset (www.camelyon16.grand-challenge.org/) and a subset of the NIH dataset for the detection of abnormalities in chest X-rays (bit.ly/NIHCC-XRAY).

The researchers’ model achieved 93.4% success on the lymph node scan analysis and 92.6% success on the X-ray scan analysis, outperforming current state-of-the-art methods by 2.8% and 5.2%, respectively. The researchers disclosed the source code for all their experiments.

About the Author

Dennis Scimeca

Dennis Scimeca is a veteran technology journalist with expertise in interactive entertainment and virtual reality. At Vision Systems Design, Dennis covered machine vision and image processing with an eye toward leading-edge technologies and practical applications for making a better world. Currently, he is the senior editor for technology at IndustryWeek, a partner publication to Vision Systems Design.