Imaging Technique Helps Surgeons Remove Cancerous Cells

Under the current standard of care for head-and-neck cancers, surgeons usually remove a margin of tissue around a tumor to help ensure they’ve caught all cancerous cells. In the process, however, they might remove more healthy tissue than is necessary, potentially leading to debilitating issues for patients with eating, drinking, speaking, or breathing.

To improve medical outcomes for these patients, researchers at the Illinois Institute of Technology (Chicago, IL, USA; www.iit.edu) are working with surgical oncologists at the University of Groningen (Groningen, Netherlands; www.rug.nl). Using fluorescence-guided imaging, they want to evaluate tumors that have been surgically removed while patients are still in the operating suite.

The goal is to use the images of the tumors to determine if surgeons should remove more tissue from the patient during the same procedure. If the outer margins of the tumor are free of cancerous cells, there is less risk that problematic cells are still inside the patient.

If the methodology proves successful, it would be a big change. This type of follow-up information usually isn’t available until after a patient is out of surgery and pathologists have had time to examine the tumors.

To achieve their goals, surgical oncologists specializing in head and neck cancers at the University of Groningen inject patients with a fluorescent marker before surgery. The marker attaches itself to cancerous cells, emitting a light signal that is visible under certain lighting conditions.

Guiding Fluorescent Imaging

It’s not a perfect solution, however. Fluorescent imaging uses low-energy light, which tends to scatter rather than absorb into the tissue, according to Kenneth M. Tichauer, associate professor of biomedical engineering at the Illinois Institute of Technology. As a result, this method is not effective for detecting small groups of cancerous cells, which typically occur at the margins of the tumor. And, the technique only depicts cells up to 1 mm below the surface of the tumor, whereas surgeons ideally would like to evaluate cells up to 5 mm below the surface.

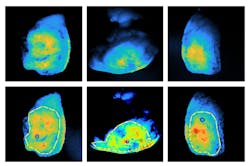

To solve these problems, Tichauer and his team developed a method that takes advantage of the scattering effect of fluorescent imaging and combines that with images taken at multiple apertures.

Why multiple apertures? “If something is deep and you take picture with an open aperture or a closed aperture, you actually tend to get roughly the same looking image. But if it's close to the surface and you look at with an open aperture and a closed aperture, one looks different than the other,” he says.

Based on the differences in the images taken with different apertures, Tichauer and his research team developed algorithms to determine how deep in the tissue the cells emitting light are.

The prototype microscopy vision system begins with a 780-nm LED light source (M780LP1) from Thorlabs (Newton, NJ, USA; www.thorlabs.com). On the LED, the team mounted other products from Thorlabs: an adjustable collimation adaptor (SM1U) and a bandpass excitation NIR filter centered at 780 nm (FBH05780-10).

The camera is a PCO.panda 4.2 from PCO Imaging (Kelheim, Germany; www.pco-tech.com). The team used a 24-mm Schneider High Resolution VIS-NIR Lens with an aperture range f/2–f/16 from Schneider Optics (Hauppauge, NY, USA; https://schneiderkreuznach.com/en). They mounted an emission filter from Thorlabs (FELH0800) between the camera and the lens.

They attached this assembly to a light tube, which splits the light focused on the sample into two beams at 45° angles and opposite from each other. The sample, or tumor, sits on a “stage” from Thorlabs that can be lifted or lowered, depending on the thickness of the sample.

The team used MATLAB from MathWorks (Natick, MA, USA; www.mathworks.com) as the primary coding environment, explains Cody Rounds, a PhD candidate at the Illinois Institute of Technology. They used the software to control the stage, process the images, and perform calculations. “A GUI (graphical user interface) was put together using MATLAB to consolidate this all into a simple-to-use UI for the surgeons,” says Rounds.

The team tested the system on specimens from six patients during the tail end of a clinical trial headed by the University of Groningen. Tichauer said he is pleased with the results, and he hopes to publish information about the work in a peer-reviewed journal.

About the Author

Linda Wilson

Editor in Chief

Linda Wilson joined the team at Vision Systems Design in 2022. She has more than 25 years of experience in B2B publishing and has written for numerous publications, including Modern Healthcare, InformationWeek, Computerworld, Health Data Management, and many others. Before joining VSD, she was the senior editor at Medical Laboratory Observer, a sister publication to VSD.