Why You Don't Need Hardware Design Expertise To Develop Embedded Vision Systems

Why you don’t need hardware expertise to implement machine learning algorithms into your next embedded vision design

Traditional embedded vision applications are transforming dramatically with increasing demand for artificial intelligence (AI) in systems across all industries. These “machine learning-enhanced” embedded vision applications include collaborative robots (or “cobots”), sense-and-avoid drones, augmented reality, autonomous vehicles, automated surveillance and medical diagnostics. If you want to become the newest player in the AI game, choosing the right hardware platform is critical.

Many companies have turned to Xilinx All Programmable technology which significant, concrete, and measureable advantages over alternative hardware options. Xilinx devices enable the fastest response time from sensors through efficient inference and control; they deliver reconfigurability to support the latest neural networks, algorithms and sensors; and they support any-to-any connectivity to the Internet (and to the cloud), to legacy networks, and to new machines.

But what if you aren’t a hardware design expert? Or maybe you have heard about the advantages of All Programmable technology, but are up against a significant barrier to adoption: lack of RTL and FPGA design experience and lack of support for software defined programming. The new Xilinx reVISION software defined stack for embedded vision breaks through this barrier.

Removing the Barrier with a Software Defined Development Flow

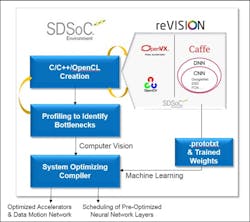

The Xilinx reVISION stack allows design teams without deep hardware expertise to combine efficient software and hardware implementations of machine-learning and computer-vision algorithms into highly responsive systems using a familiar software defined development flow. As shown in Figure 1 below, the reVISION development flow starts with a familiar, Eclipse-based development environment; the C, C++, and/or OpenCL programming languages; and associated compilers all incorporated into the Xilinx SDSoC development environment. You can target reVISION hardware platforms within the SDSoC environment, drawing from a pool of acceleration-ready, computer vision libraries to quickly build your application. Soon, you’ll also be able to use the Khronos Group’s OpenVX framework as well.

Figure 1: The reVISION Software Defined Flow

For machine learning, you can use popular frameworks including Caffe to train neural networks. Within one Xilinx Zynq SoC or Zynq UltraScale+ MPSoC, you can use Caffe-generated .prototxt files to configure a software scheduler running on one of the device’s ARM processors to drive CNN inference accelerators—pre-optimized for and instantiated in programmable logic. For computer vision and other algorithms, you can profile your code, identify bottlenecks, and then designate specific functions that should be hardware-accelerated to achieve performance goals. The Xilinx system-optimizing compiler then creates an accelerated implementation of your code, automatically including the required processor/accelerator interfaces (data movers) and software drivers.

The Xilinx reVISION stack is the latest in an evolutionary line of development tools for creating embedded-vision systems. Xilinx All Programmable devices have long been used to develop such vision-based systems because these devices can interface to any image sensor and connect to any network—which Xilinx calls any-to-any connectivity—and they provide the large amounts of high-performance processing horsepower that vision systems require to achieve real-time performance.

You can download a whitepaper and learn more in the reVISION Developer Zone.