Andrew Wilson, Editor

Ask anyone who has ever designed, purchased, built, installed or operated a machine vision system what they believe to be some of the most significant developments in the field and the answers will be extremely diverse. Indeed, this was just the case when, for this our 200th Anniversary issue of Vision Systems Design we polled many of our readers with just such a question.

Which particular individuals, companies and organizations, types of technologies, products and applications did they consider to have most significantly affected the adoption of machine vision and image processing systems?

After reviewing the answers to these questions, it became immediately apparent that the age of our audience played an important part in how their answers were formulated. Here, perhaps their misconception (although understandable) was that machine vision and image processing was relatively new dating back just a half century. In his book "Understanding and Applying Machine Vision," however, Nello Zuech points out that the concepts for machine vision are evident as far back as the 1930s with Electronic Sorting Machines (then located in New Jersey) offering food sorters based on using specific filters and photomultiplier detectors.

While it is true that machine vision systems have only been deployed for less than a century, some of the most significant inventions and discoveries that led to the development of such systems date back far longer. To thoroughly chronicle this, one could begin by highlighting the development of early Egyptian optical lens systems dating back to 700 BC, the introduction of punched paper cards in 1801 by Joseph Marie Jacquard that allowed a loom to weave intricate patterns automatically or Maxwell's 1873 unified theory of electricity and magnetism.

To encapsulate the history of optics, physics, chemistry, electronics, computer and mechanical design into a single article, however, would, of course, be a momentous task. So, rather than take this approach, this article will examine how the discoveries and inventions by early pioneers have impacted more recent inventions such as the development of solid-state cameras, machine vision algorithms, LED lighting and computer-based vision systems.

Along the way, it will highlight some of the people, companies and organizations that have made such products a reality. This article will, of necessity, be a rather more personal and opinionated piece and, as such, I welcome any additions that you feel have been omitted.

How our readers voted

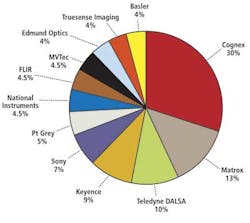

Vision Systems Design's marketing department received hundreds of responses to our questionnaire about what our readers thought were the most important companies, technologies, and individuals that have made the most important contributions to the field of machine vision. Not surprisingly, many of the companies our readers deemed to have made the greatest impact have existed for over twenty years or more (Figure 1).

Of these, Cognex (Natick, MA; www.cognex.com) was mentioned more than any other company, probably due to its relatively long history, established product line and large installed customer base. Formed in 1981 by Dr. Robert J. Shillman, Marilyn Matz and William Silver, the company produces a range of hardware and software products including VisionPro software and its DataMan series of ID readers.

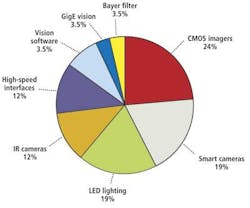

When asked what technologies and products have made the most impact on machine vision, readers' answers were rather more diverse (Figure 2). Interestingly, the emergence of CMOS images sensors, smart cameras and LED lighting, all relatively new development in the history of machine vision, were recognized as some of the most important innovations.

Figure 2: Relatively new developments in CMOS imagers, smart cameras and LED lighting were deemed to be the most important technological and product innovations. ">

Capturing images

Although descriptions of pin-hole camera date back to as early as the 5th century BC, it was not until about 1800 that Thomas Wedgwood, the son of a famous English potter, attempted to capture images using paper or white treated with silver nitrate. Following this, Louis Daguerre and others demonstrated that a silver plated copper plate exposed under iodine vapor would produce a coating of light-sensitive silver iodide on the surface with the resultant fixed plate producing a replica of the scene.

Developments of the mid-19th century was followed by others, notably Henry Fox Talbot in England who showed that paper impregnated with silver chloride could be used to capture images. While this work would lead to the development of a multi-billion dollar photographic industry, it is interesting that, during the same time period, others were studying methods of capturing images electronically.

In 1857, Heinrich Geissler a German physicist developed a gas discharge tube filled with rarefied gasses that would glow when a current was applied to the two metal electrodes at each end. Modifying this invention, Sir William Crookes discovered that streams of electrons could be projected towards the end of such a tube using a cathode-anode structure common in cathode ray tubes (CRTs).

In 1926, Alan Archibald Campbell-Swinton attempted to capture an image from such a tube by projecting an image onto a selenium-coated metal plate scanned by the CRT beam. Such experiments were commercialized by Philo Taylor Farnsworth who demonstrated a working version of such a video camera tube known as an image dissector in 1927.

These developments were followed by the introduction of the image Orthicon and Vidicon by RCA in 1939 and the 1950s, Philips' Plumbicon, Hitachi's Saticon and Sony's Trinicon, all of which use similar principles. These camera tubes, developed originally to television applications, were the first to find their way into cameras developed for machine vision applications.

Needless to say, being tube-based, such cameras were not exactly purpose built for rugged, high-EMI susceptible applications. This was to change when, in 1969, Willard Boyle and George E. Smith working at AT&T Bell Labs showed how charge could be shifted along the surface of a semiconductor in what was known as a "charge bubble device". Although they were both later awarded Nobel prizes for the invention of the CCD concept, it was an English physicist Michael Tompsett, a former researcher at the English Electric Valve Company (now e2V; Chelmsford, England; www.e2v.com), that, in 1971 while working at Bell Labs, showed how the CCD could be used as an imaging device.

Three years later, the late Bryce Bayer, while working for Kodak, showed how by applying a checkerboard filter of red, green, and blue to an array of pixels on an area CCD array, color images could be captured using the device.

While the CCD transfers collected charge from each pixel during readout and erases the image, scientists at General Electric in 1972 developed and X-Y array of addressable photosensitive elements known as a charge injection device (CID). Unlike the CCD, the charge collected is retained in each pixel after the image is read and only cleared when charge is "injected" into the substrate. Using this technology, the blooming and smearing artifacts associated with CCDs is eliminated. Cameras based around this technology were originally offered by CIDTEC, now part of Thermo Fischer Scientific (Waltham, MA; www.thermoscientific.com).

While the term active pixel image sensor or CMOS image sensor was not to emerge for two decades, previous work on such devices dates back as far as 1967 when Dr. Gene Weckler described such a device in his paper "Operation of pn junction photodetectors in a photon flux integrating mode," IEEE J. Solid-State Circuits (http://bit.ly/12SnC7O).

Despite this, the success of CMOS imagers was not to become widely adopted for the next thirty years, due in part to the variability of the CMOS manufacturing process. Today, however, many manufacturers of active pixel image sensors widely tout the performance of such devices as comparable to those of CCDs.

Building such devices is expensive, however and – even with the emergence of "fabless" developers - just a handful of vendors currently offer CCD and CMOS imagers. Of these, perhaps the best known are Aptina (San Jose, CA; www.aptina.com), CMOSIS (Antwerp, Belgium http://cmosis.com), Sony Electronics (Park Ridge, NJ; www.sony.com) and Truesense Imaging (Rochester, NY; www.truesenseimaging.com), all of whom offer a variety of devices in multiple configurations.

While the list of imager vendors may be small, however, the emergence of such devices has spawned literally hundreds of camera companies worldwide. While many target low-cost applications such as webcams, others such as Basler (Ahrensburg, Germany; www.baslerweb.com), Imperx (Boca Raton, FL; www.imperx.com) and JAI (San Jose, CA; www.jai.com) are firmly focused on the machine vision and image processing markets often incorporating on board FPGAs into their products.

Lighting and illumination

Although Thomas Edison is widely credited with the invention of the first practical electric light, it was Alessandro Volta, the invention of the forerunner of today's storage battery, who noticed that when wires were connected to the terminals of such devices, they would glow.

In 1812, using a large voltaic battery, Sir Humphry Davy demonstrated that an arch discharge would occur and in 1860 Michael Faraday, an early associate of Davy's, demonstrated a lamp exhausted of air that used two carbon electrodes to produce light.

Building on these discoveries, Edison formed the Edison Electric Light Company in 1878 and demonstrated his version of an incandescent lamp just one year later. To extend the life of such incandescent lamps, Alexander Just and Franjo Hannaman developed and patented an electric bulb with a Tungsten filament in 1904 while showing that lamps filled with an inert gas produce a higher luminosity than vacuum-based tubes.

Just as the invention of the incandescent lamp predates Edison so too does the halogen lamp. As far back as 1882, chlorine was used to stop the blackening effects caused by the blackening of the lamp and slow the thinning of the tungsten filament. However, it was not until Elmer Fridrich and Emmitt Wiley working for General Electric in Nela Park, Ohio patented a practical version of the halogen lamp in 1955 that such illumination devices became practical.

Like the invention of the incandescent lamp, the origins of the fluorescent lamp date back to the mid 19th century when in 1857, Heinrich Geissler a German physicist developed a gas discharge tube filled with rarefied gasses that would glow when a current was applied to the two metal electrodes at each end. As well as leading to the invention of commercial fluorescent lamps, this discovery would form the basis of tube-based image capture devices in the 20th century (see "Capturing images").

In 1896 Daniel Moore, building on Geissler's discovery developed a fluorescent lamp that used nitrogen gas and founded his own companies to market them. After these companies were purchased by General Electric, Moore went on to develop a miniature neon lamp.

While incandescent and fluorescent lamps became widely popular in the 20th century, it would be research in electroluminescence that would form the basis of the introduction of solid-state LED lighting. While electroluminescence was discovered by Henry Round working at Marconi Labs in 1907, it was pioneering work by Oleg Losev, who in the mid-1920s, observed light emission from zinc oxide and silicon carbide crystal rectifier diodes when a current was passed through them (see "The life and times of the LED," http://bit.ly/o7axVN).

Numerous papers published by Mr. Losev constitute the discovery of what is now known as the LED. Like many other such discoveries, it would be years later before such ideas could be commercialized. Indeed, it would not be until 1962 when, while working at General Electric Dr. Nick Holonyak, experimenting with GaAsP produced the world's first practical red LED. One decade later, Dr. M. George Craford, a former graduate student of Dr. Holonyak, invented the first yellow LED. Further developments followed with the development of blue and phosphor-based white LEDs.

For the machine vision industry the development of such low-cost, long-life and rugged light sources has led to the formation of numerous lighting companies including Advanced illumination (Rochester, VT; www.advancedillumination.com), CCS America (Burlington, MA; www.ccsamerica.com), ProPhotonix (Salem, NH; www.prophotonix.com) and Spectrum Illumination (Montague, MI; www.spectrumillumination.com) that all offer LED lighting products in many different types of configurations.

Interface standards

The evolution of machine vision owes as much to the television and broadcast industry as it does to the development of digital computers. As programmable vacuum tube based computers were emerging in the early 1940s, engineers working on the National Television System Committee (NTSC) were formulating the first monochrome analog NTSC standard.

Adopted in 1941, this was modified in 1953 in what would become the RS-170a standard to incorporate color while remaining compatible with the monochrome standard. Today, RS-170 is still being used is numerous digital CCD and CMOS-based cameras and frame grabber boards allowing 525 line images to be captured and transferred at 30 frames/s.

Just as open computer bus architectures led to the development of both analog and digital camera interface boards, television standards committees followed an alternative path introducing high-definition serial digital interfaces such as SDI and HD-SDI. Although primarily developed for broadcast equipment, these standards are also supported by computer interface boards allowing HDTV images to be transferred to host computers.

To allow these computers to be networked together, the Ethernet originally developed at XEROX PARC in the mid-1970s was formally standardized in 1985 and has become the de-facto standard for local area networks. At the same time, serial busses such as FireWire (IEEE 1394) under development since 1986 by Apple Computer were widely adopted in the mid-1990s by many machine vision camera companies.

Like FireWire, the Universal Serial Bus (USB) introduced in a similar time frame by a consortium of companies including Intel and Compaq were also to become widely adopted by both machine vision camera companies and manufacturers of interface boards.

When first introduced, however, these standards could not support the higher bandwidths of machine vision cameras and by their very nature were non-deterministic and because no high-speed point to point interface formally existed, the Automated Imaging Association (Ann Arbor, MI) formed the Camera Link committee in the late 1990s. Led by companies such as Basler and JAI, the well-known Camera Link standard was introduced in October 2000 (http://bit.ly/1cgEdKH).

Using a Muirhead wirephoto scanner, Dr. Sawchuk digitized the top third of Lena Soderberg, a Playboy centerfold from November 1972. Notable for its detail, color, high and low-frequency regions, the image became very popular in numerous research papers published in the 1970s. So popular, in fact, that in May 1997, Miss Soderberg was invited to attend the 50th Anniversary IS&T conference in Boston (http://bit.ly/1bfOTD). Needless to say, in today's rather more "politically correct" world, the image seems to have fallen out of favor!

Because of the lack of processing power offered by Von Neumann architectures, a number of companies introduced specialized image processing hardware in the mid-1980s. By incorporating proprietary and often parallel processing concepts these machines were at once powerful, while at the same time expensive.

Stand-alone systems by companies such as Vicom and Pixar were at the same time being challenged by modular hardware from companies such as Datacube, the developer of the first Q-bus frame grabber for Digital Equipment Corp (DEC) computers.

With the advent of PCs in the 1980s, board-level frame grabbers, processors and display controllers for the open architecture ISA bus began to emerge and with it software callable libraries for image processing.

Today, with the emergence of the PCs PCI-Express bus, off-the-shelf frame grabbers can be used to transfer images to the host PC at very high-data rates using a number of different interfaces (see "Interface standards"). At the same time, the introduction of software packages from companies such as Microscan (Renton, WA; www.microscan.com), Matrox (Dorval, Quebec, Canada; www.matrox.com), MVTec (Munich, Germany; www.mvtec.com), Teledyne Dalsa (Waterloo, Ontario, Canada; www.teledynedalsa.com) and Stemmer Imaging (Puchheim, Germany; www.stemmer-imaging.de) make it increasingly easier to configure even the most sophisticated image processing and machine vision systems.

Ten individuals our readers chose as pioneers in machine vision

Dr. Andrew Blake is a Microsoft Distinguished Scientist and the Laboratory Director of Microsoft Research Cambridge, England. He joined Microsoft in 1999 as a Senior Researcher to found the Computer Vision group. In 2008 he became a Deputy Managing Director at the lab, before assuming his current position in 2010. In 2011, he and colleagues at Microsoft Research received the Royal Academy of Engineering MacRobert Award for their machine learning contribution to Microsoft Kinect human motion-capture. For more information, go to: http://bit.ly/69Le5Z

Dr. William K. Pratt holds a Ph.D. in electrical engineering from University of Southern California and has written numerous papers and books in the fields of communications, signal and image processing. He is perhaps best known for his book "Digital Image Processing" (Wiley; http://amzn.to/15sGYUd) and his founding of Vicom Systems in 1981. After joining Sun Microsystems in 1988, Dr. Pratt participated in the Programmers Imaging Kernel Specification (PIKS) application programming interface that was commercialized by PixelSoft (Los Altos, CA; http://pixelsoft.com) in 1993.

Mr. William Silver has been at the forefront of development in the machine vision industry for over 30 years. In 1981, he along with fellow MIT graduate student Marilyn Matz joined Dr. Robert J. Shillman, a former lecturer in human visual perception at MIT to co-found a start-up company called Cognex (Natick, MA; www.cognex.com). He was principal developer of the company's PatMax pattern matching technology and its normalized correlation search tool.

Mr. Bryce Bayer (1929-2012) will be long remembered for the filter that bears his name. After obtaining an engineering physics degree in 1951, Mr. Bayer worked as a research scientist at Eastman Kodak until his retirement in 1986. U.S. patent 3,971,065 awarded to Mr. Bayer in 1976 and titled simply "Color imaging array" is one of the most important innovation in image processing over the last 50 years. Mr. Bayer was awarded the Royal Photographic Society's Progress Medal in 2009 and the first Camera Origination and Imaging Medal from the SMPTE in 2012.

Mr. James Janesick is a distinguished scientist and author of numerous technical papers on CCD and CMOS devices, as well as several books on CCD devices including "Scientific Charge-Coupled Devices", SPIE Press; http://bit.ly/1304e9I). Working at the Jet Propulsion Laboratory for over 20 years, Mr. Janesick developed many scientific ground and flight-based imaging systems. He received NASA medals for Exceptional Engineering Achievement in 1982 and 1992 and, in 2004 was the recipient of the SPIE Educator Award (2004) and was SPIE/IS&T Imaging Scientist of the Year (2007). In 2008 he was awarded the Electronic Imaging Scientist of the Year Award at the Electronic Imaging 2008 Symposium in recognition of his innovative work with electronic CCD and CMOS sensors.

Dr. Gene Weckler received a Doctor of Engineering from Stanford University and, in 1967, published the seminal "Operation of pn junction photodetectors in a photon flux integrating mode," IEEE J. Solid-State Circuits (http://bit.ly/12SnC7O). In 1971 he co-founded Reticon to commercialize the technology and, after a twenty year career, co-founded Rad-icon in 1997 to commercialize the use of CMOS-based solid-state image sensors for X-ray imaging. Rad-icon was acquired by Teledyne DALSA (Waterloo, Ontario, Canada; www.teledynedalsa.com) in 2008. This year, he was awarded the Exceptional Lifetime Achievement Award by the International Image Sensor Society (http://bit.ly/123wpd1) for significant contributions to the advancement of solid-state image sensors.

Mr. Stanley Karandanis (1934 -2007) was Director of Engineering at Monolithic Memories (MMI) when John Birkner and H.T. Chua invented the programmable array logic (PAL).

Teaming with J. Stewart Dunn in 1979, Mr. Karandanis co-founded Datacube (http://bit.ly/qgzpl5) to manufacture the first commercially available single-board from grabber for Intel's now-obsolete Multibus. After the (still existing) VMEbus was introduced by Motorola, Datacube developed a series of modular and expandable boards and processing modules known as MaxVideo and MaxModules. In recognition of his outstanding contributions to the machine vision industry, Mr. Karandanis received the Automated Imaging Achievement Award from the Automated Imaging Association (Ann Arbor, MI; www.visiononline.org) in 1999.

Rafael C. Gonzalez received a Ph.D. in electrical engineering from the University of Florida, Gainesville in 1970 and subsequently became a Professor at the University of Tennessee, Knoxville in 1984 founding the University's Image & Pattern Analysis Laboratory and the Robotics & Computer Vision Laboratory. In 1982, he founded Perceptics Corporation, a manufacturer of computer vision systems that was acquired by Westinghouse in 1989. Dr. Gonzales is author of four textbooks in the fields of pattern recognition, image processing, and robotics including "Digital Image Processing" (Addison-Wesley Educational Publishers) which he co-authored with Dr. Paul Wintz. In 1988, Dr. Gonzales was awarded the Albert Rose National Award for Excellence in Commercial Image Processing.

Dr. Azriel Rosenfeld (1931-2004) is widely regarded as one of the leading researchers in the field of computer image analysis. With a doctorate in mathematics from Columbia University in 1957, Dr. Rosenfeld joined the University of Maryland faculty where he became Director of the Center for Automation Research. During his career, he published over 30 books, making fundamental and pioneering contributions to nearly every area of the field of image processing and computer vision. Among his numerous awards is the IEEE's Distinguished Service Award for Lifetime Achievement in Computer Vision and Pattern Recognition.

Dr. Gary Bradski is a Senior Scientist at Willow Garage (Menlo Park, CA; www.willowgarage.com) and is a Consulting Professor in the Computer Sciences Department of Stanford University. With 13 issued patents, Dr. Bradski is responsible for the Open Source Computer Vision Library (OpenCV), an open source computer vision and machine learning software library built to provide a common infrastructure for computer vision applications in research, government and commercial applications. Dr. Bradski also organized the vision team for Stanley, the Stanford robot that won the DARPA Grand Challenge and founded the Stanford Artificial Intelligence Robot (STAIR) project under the leadership of Professor Andrew Ng.

Will machine vision replace human beings?

by John Salls, President, Vision ICS (Woodbury, MN; www.vision-ics.com)

Technology by its nature makes human beings more efficient. Automobiles replaced stable hands and horse trainers. Power tools make carpenters more efficient, yet nobody would suggest that we should eliminate nail guns or circular saws because they "replace people". Automation of any kind is the same, making people more efficient and providing them with goods that they can afford.

You could hire a professional baker to make a batch of waffles, freeze them, put them in a bag, put them in a box, and sell them. But it would cost $10-$20 a box, not the $3-$4 that we pay and still have the company that makes them make a profit. To make them in the quantity would take an army of bakers, not a half dozen semi-skilled laborers that run a production line.

If you happen to be a baker you might argue that automation replaces your task. However, as a society we are all better off as we have increased purchasing power and can afford to purchase products such as waffles, clothing, shoes, computers, homes, furniture, and automobiles.

If I had to have my computer built without automation it would be impossible to afford for anybody without a NASA budget. If you are that baker, open a high end bakery making special occasion cakes, get a job in a restaurant, or change careers.

At the turn of the last century, my ancestors were heavily invested in stables. The automobile destroyed their business. They could have complained about it and it still would not have diminished the effect of the introduction of the automobile or made their business survive. Instead we adapted and went into different businesses, we survived, and thrived.

I live in a home larger than my ancestors, have two trucks, multiple computers, a kitchen and garage full of gadgets, affordable clothing, plenty of food in my fridge, and live well largely thanks to automation. I think most of us can say that automation makes our lives better. Even our poor and unemployed live better as a result of automation. Food is fresher and safer. Goods are more abundant and cost less.

Featured articles from our last 200 issues

To achieve dynamic tracking of cranes, SmartCrane has designed an embedded vision system that combines a smart camera from Vision Components (Ettlingen, Germany; www.vision-components.com) with a tilt encoder and IMU that is used to transmit positional information of the head block to a console in the operator's console. http://bit.ly/MJ3AVq

Using a foot scanner to take measurements, Corpus.e developed a 3-D foot scanner using a USB 2.0 industrial camera from IDS Imaging Development Systems (Obersulm, Germany; www.ids-imaging.de) See: http://bit.ly/14Ilu32

Engineers at CMERP developed a machine-vision system that uses off-the-shelf imaging components from SVS-Vistek (Seefeld, Germany; www.svs-vistek.com) to measure fill levels on olive oil bottles as they pass along a production line. See: http://bit.ly/14Ilu32

Microscopic striations on the surface of fired bullets and cartridge cases are routinely used to associate a bullet with a particular weapon. To image these, Intelligent Automation developed a system using a frame grabber from The Imaging Source (Bremen, Germany; www.theimagingsource.com) capable of capturing the three-dimensional (3-D) topology of the bullet's surface. See: http://bit.ly/142VqDF

With pixel sizes of CCD and CMOS image sensors becoming smaller, system integrators must pay careful attention to their choice of optics. Read how Greg Hollows of Edmund Optics (Barrington, NJ, www.edmundoptics.com) and Stuart Singer of Schneider Optics (Hauppauge, NY; www.schneideroptics.com) focus on how to choose the correct optics for your application. See: http://bit.ly/wHAy1h

Researchers at the Fraunhofer Institute for Integrated Circuits (Erlangen, Germany; www.iis.fraunhofer.de) developed a camera dubbed Polka that uses a conventional CMOS image sensor of 1120 × 512 pixels overlaid with a 2 × 2 matrix of polarizing filters to analyze the stress formed in molded glass and plastic products. See: http://bit.ly/xEqDMF

Vision Systems Articles Archives